The future of AI is now: Meet OpenAI's GPT-4

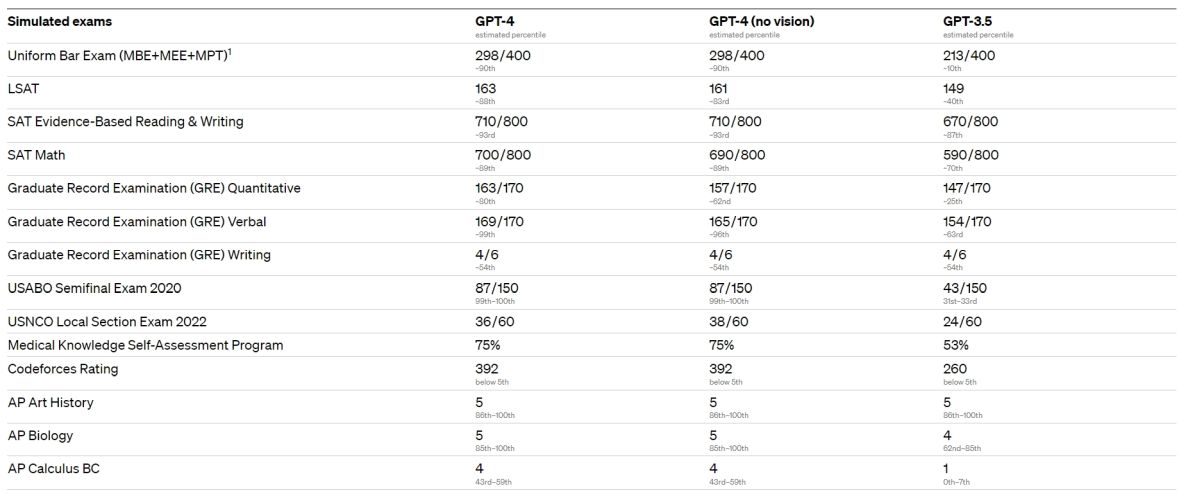

The wait is over as OpenAI has finally launched GPT-4, the new multi-modal LLM that outperforms GPT-3.5 in many ways. The Company revealed it on Twitter yesterday, showcasing some of its abilities in a developer live stream.

OpenAI truly fascinated the world with the technology it has been building for years. ChatGPT's success made people wonder about the future, and millions turned their eyes to what was coming. Yesterday, OpenAI introduced GPT-4, its new language model and the most capable and aligned one yet. The company's CEO, Sam Altman, said it is available in OpenAI's API with a waitlist and ChatGPT+.

Announcing GPT-4, a large multimodal model, with our best-ever results on capabilities and alignment: https://t.co/TwLFssyALF pic.twitter.com/lYWwPjZbSg

— OpenAI (@OpenAI) March 14, 2023

Last week, Microsoft Germany's CTO announced that the new language model would be announced within this week. He also gave additional information that OpenAI is moving away from a text-only chatbot to an improved version that can tell you all the details of an image you upload.

"It is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it," says Altman. He had also wanted people to lower their expectations before the release so that no one would be disappointed.

What can GPT-4 do?

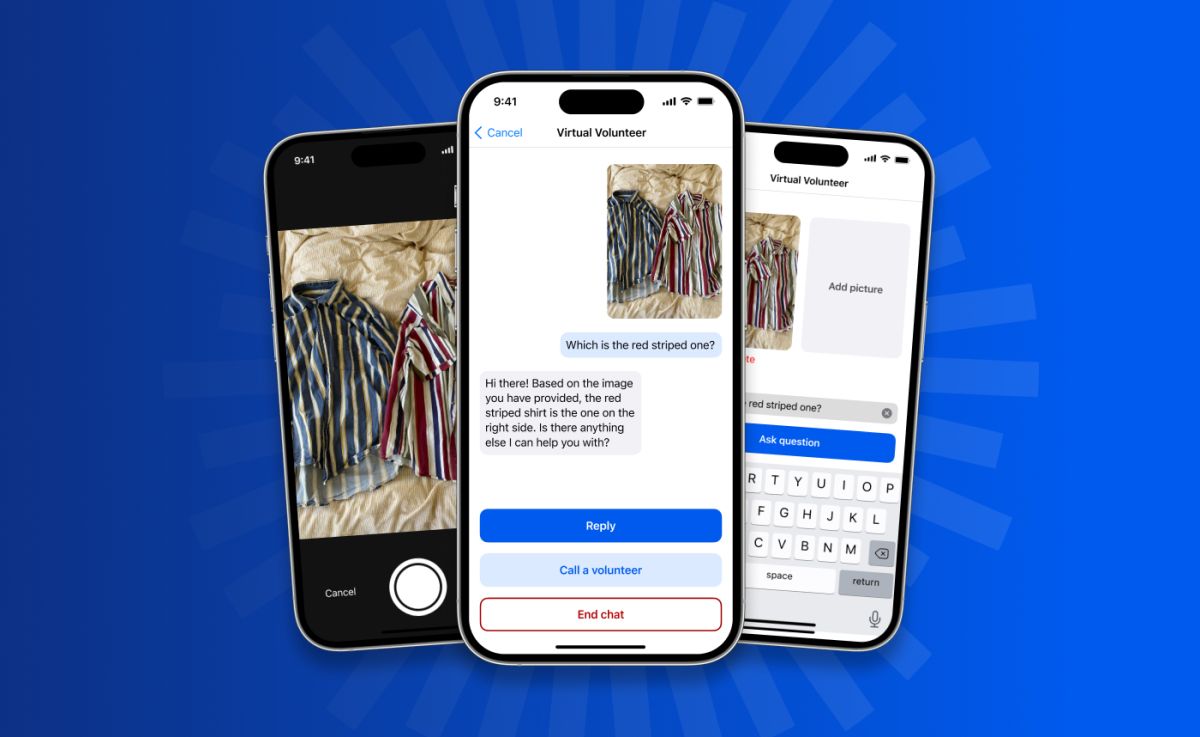

GPT-4 has all the abilities of the current GPT-3.5 version. Moreover, OpenAI developed it to be more than just a text-only chatbot but understand visual inputs and respond accordingly. It can describe the setting of an image, and that feature can be very helpful in certain areas. For instance, it can tell the colors of a shirt for color-blind people, as seen below in the Be My Eyes image. Apart from that, GPT-4 can also read a map, identify a plant, translate a label, and do many more tasks.

OpenAI described GPT-3.5 as a "test run" of a new training architecture, and the company used the lessons on GPT-4 successfully to get stable results. The new version is now harder to trick and has the ability to prevent malicious prompts as well as a more "human-like" approach to various prompts.

It understands 26 languages with better translation accuracy and an ability to answer multiple-choice questions in all 26 languages. It is still better at Romance and Germanic languages, but the others are also improved. GPT-4 also has a better memory compared to its predecessor, with a context length of 8,192 tokens.

How to get access to GPT-4?

It is currently available to the general public via ChatGPT Plus. You must pay a monthly $20 premium member fee to use the new language model. However, OpenAI has put a usage cap. "We will adjust the exact usage cap depending on demand and system performance in practice, but we expect to be severely capacity constrained (though we will scale up and optimize over upcoming months)," says the company. It also added that depending on the traffic patterns, a new subscription level for higher-volume GPT-4 usage can be introduced.

The second way is to join the waitlist to get access to the GPT-4 API. Once you get access, you can only make text-only requests for now. Pricing is $0.03 per 1k prompt tokens and $0.06 per 1k completion tokens. Default rate limits are 40k tokens per minute and 200 requests per minute.

If you want to check out the technical details, here is the GPT-4 technical report provided by OpenAI.

Advertisement