MiniGPT-4 is as efficient and accessible as it gets

The AI community has been buzzing about OpenAI's latest Large Language Model, GPT-4, which has proven to be a game-changer in the field of Natural Language Understanding. Unlike its predecessors, GPT-4's multimodal nature allows it to perform complex vision-language tasks, such as generating detailed image descriptions, developing websites using handwritten text instructions, and even building video games and Chrome extensions. The reasons behind GPT-4's exceptional performance are not fully understood, but some experts believe that it is due to the use of a more advanced Large Language Model.

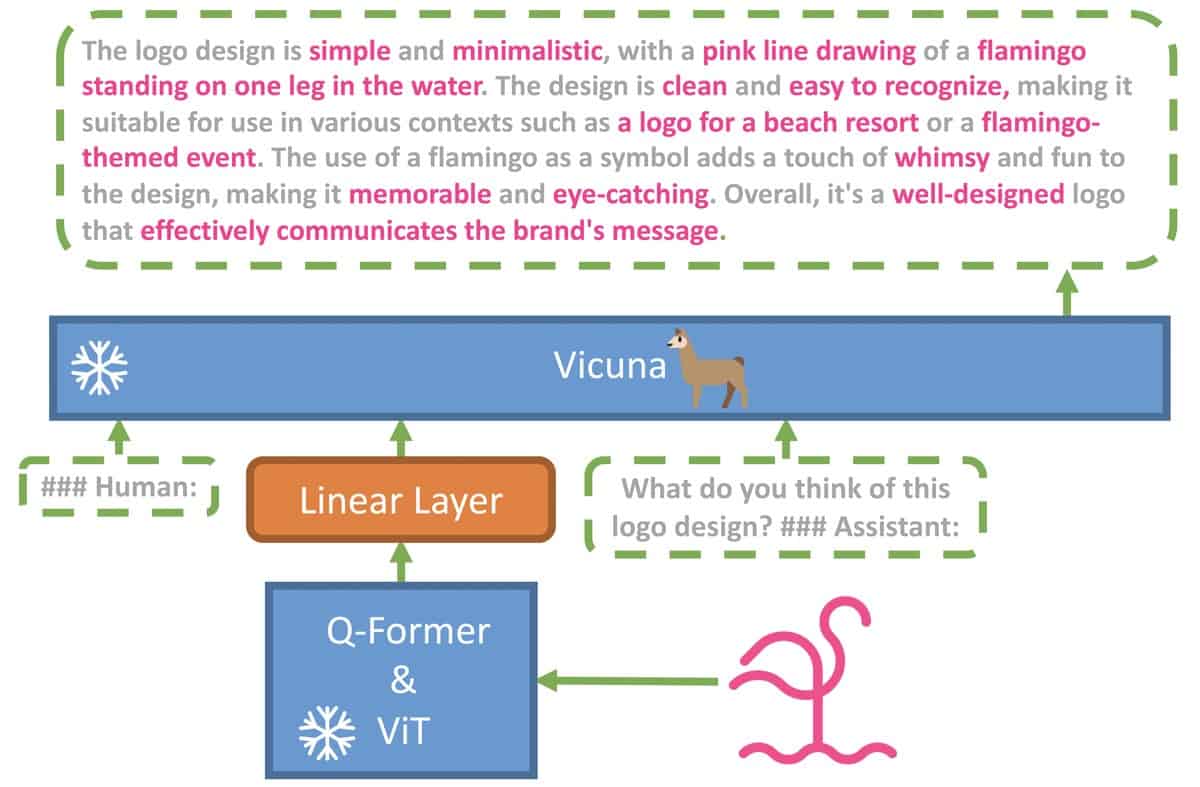

To explore this hypothesis, a team of Ph.D. students from King Abdullah University of Science and Technology, Saudi Arabia, has developed MiniGPT-4, an open-source model that performs complex vision-language tasks just like GPT-4. MiniGPT-4 uses an advanced LLM called Vicuna as the language decoder, which is built upon LLaMA and achieves 90% of ChatGPT's quality as evaluated by GPT-4.

Additionally, MiniGPT-4 uses the pre-trained vision component of BLIP-2 and has added a single projection layer to align the encoded visual features with the Vicuna language model.

Don't be fooled by the name

MiniGPT-4 has shown great results in identifying problems from picture input, such as providing a solution based on provided image input of a diseased plant by a user with a prompt asking about what was wrong with the plant.

It has even demonstrated the ability to generate detailed recipes by observing delicious food photos, writing product advertisements, and coming up with rap songs inspired by images. However, the team mentioned that training MiniGPT-4 using raw image-text pairs from public datasets can result in repeated phrases or fragmented sentences. To overcome this limitation, MiniGPT-4 needs to be trained using a high-quality, well-aligned dataset.

One of the most promising aspects of MiniGPT-4 is its high computational efficiency, which requires only approximately 5 million aligned image-text pairs for training a projection layer. Furthermore, MiniGPT-4 only needs to be trained for approximately 10 hours on 4 A100 GPUs, making it a highly efficient and accessible AI model.

The code, pre-trained model, and collected dataset are all available, making MiniGPT-4 a valuable addition to the open-source AI community. You may try out MiniGPT-4 here.

Advertisement