Rescuezilla: open source backup, restore and recovery environment

Rescuezilla is a free open source disk imaging solution that supports data backups, restores and recovery actions. The application is operating system agnostic, as it needs to be put on an optical disc or an USB drive; one of the benefits that comes out of that, is that may access the application at any time, even if the PC does not boot anymore.

Rescuezilla is fully compatible with Clonezilla, a disk imaging solution that is also open source. One of the main differences between the two solutions is that Rescuezilla has a graphic user interface that should make it easier to use for some users.

The process of creating a working copy of Rescuezilla is straightforward:

- Download the latest version of the backup program from the official project website. Version 2.4, which we used for testing, has a size of about 1 Gigabyte.

- Use an USB writer program such as balenaEtcher to write the image to the USB drive. If you want to use a DVD instead, use a DVD writer application.

- Boot from the USB drive or the optical disc to launch the application.

Rescuezilla is based on Ubuntu Linux.

Once there, you get easy options to create backups, restore previously created backups, clone a disk, verify images, or use the built-in image explorer.

When you select backups, which you may do on the first start, you will get a list of all connected drives, their capacity, drive model, and partitions it contains. From there, you may select to save all partitions of the selected drive or only some of them.

Backups may be stored to a destination drive that is connected to the computer directly, e.g., an external hard drive, or to a network share.

The restore option becomes available once the first backup has been completed. It is a simple process to restore an entire partition or all partitions of a drive.

Rescuezilla is compatible with virtual machine images, including those created by VirtualBox, VMWare, Hyper-V and Qemu. It supports raw image formats next to that, and may also be used to clone disks.

Image Explorer is a beta feature of the open source application to browse files that are found inside backups.

Rescuezilla has a handful of extra features that may prove useful at one point. The solution includes a working internet browser, which may be useful to quickly download drivers or updates that may be required to repair a system. The tool supports options to manage partitions, e.g., resize them, which may also prove useful to some users.

Closing Words

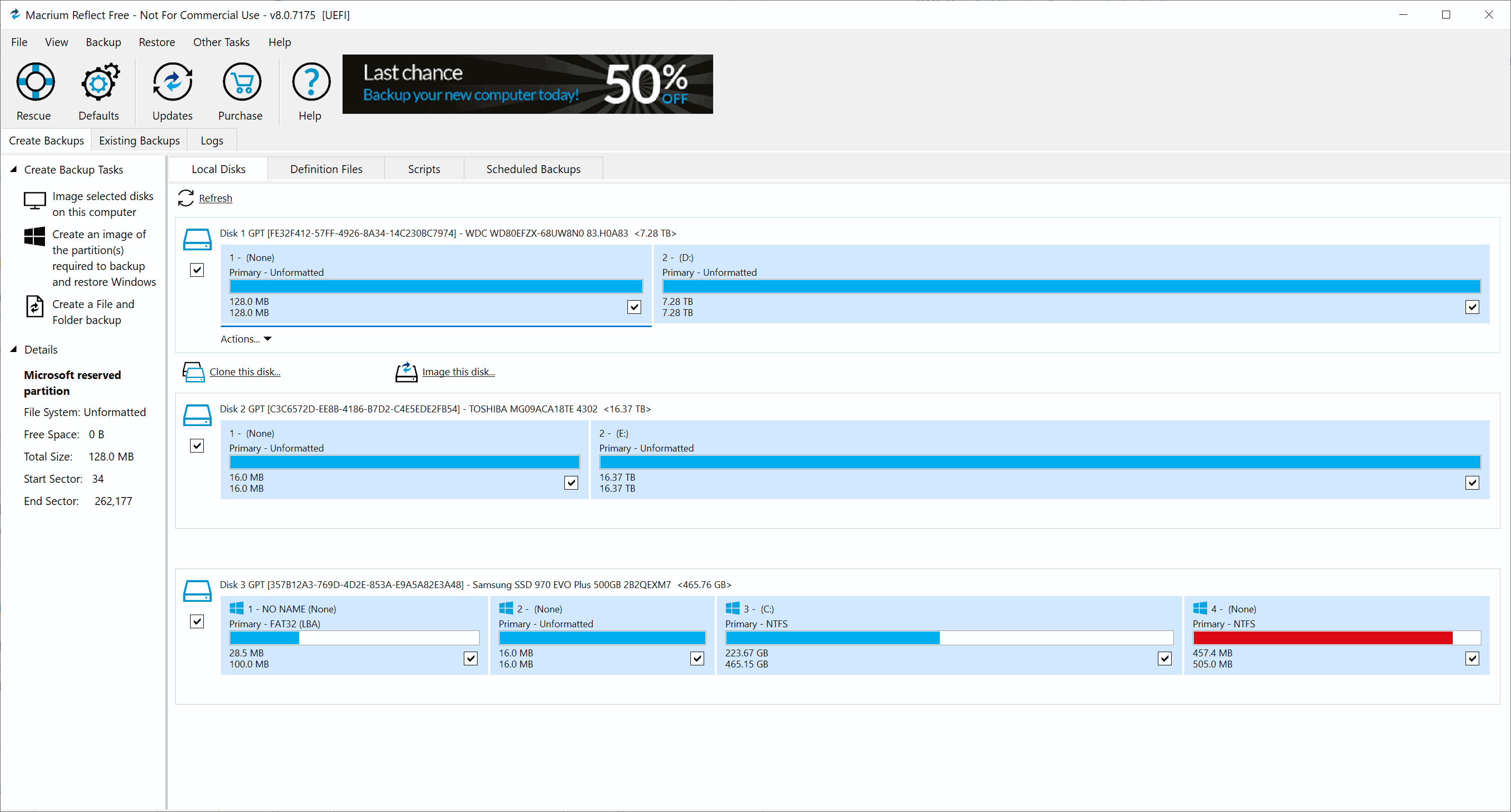

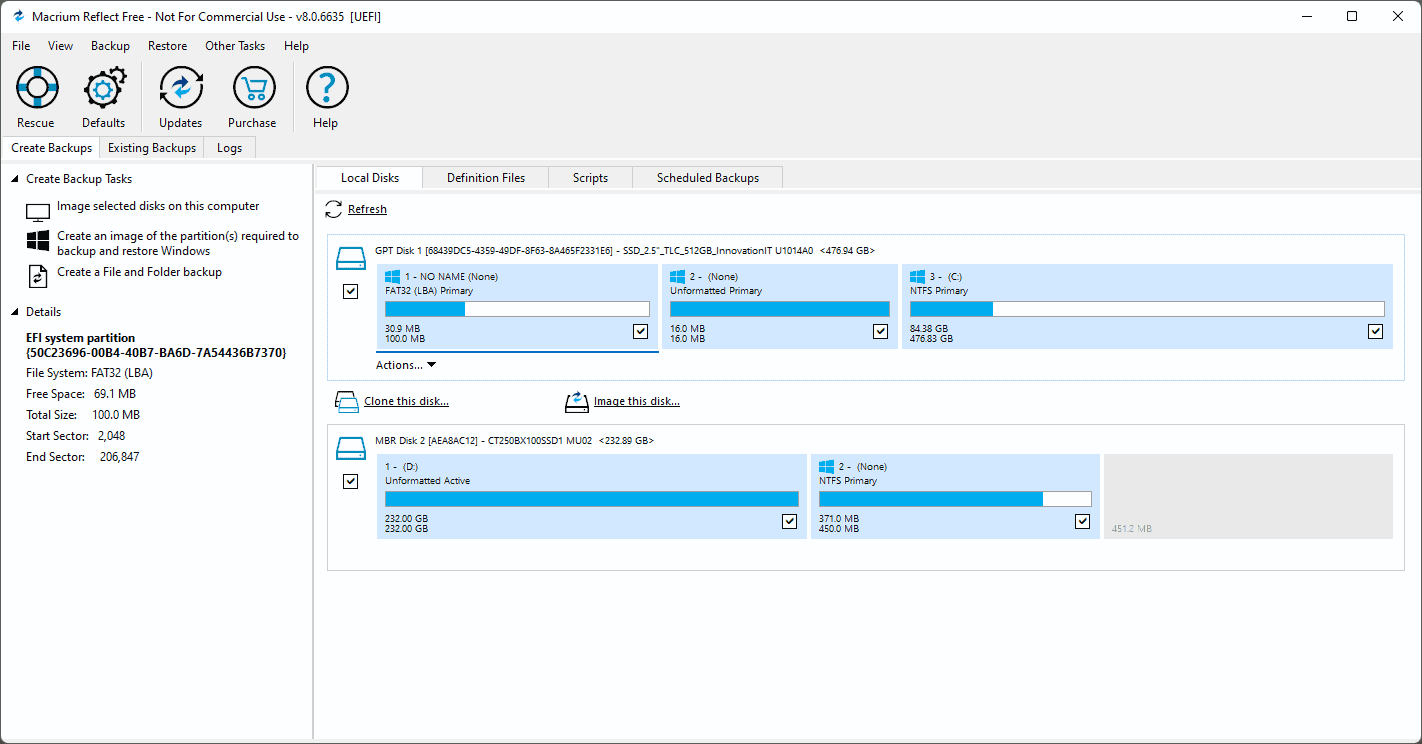

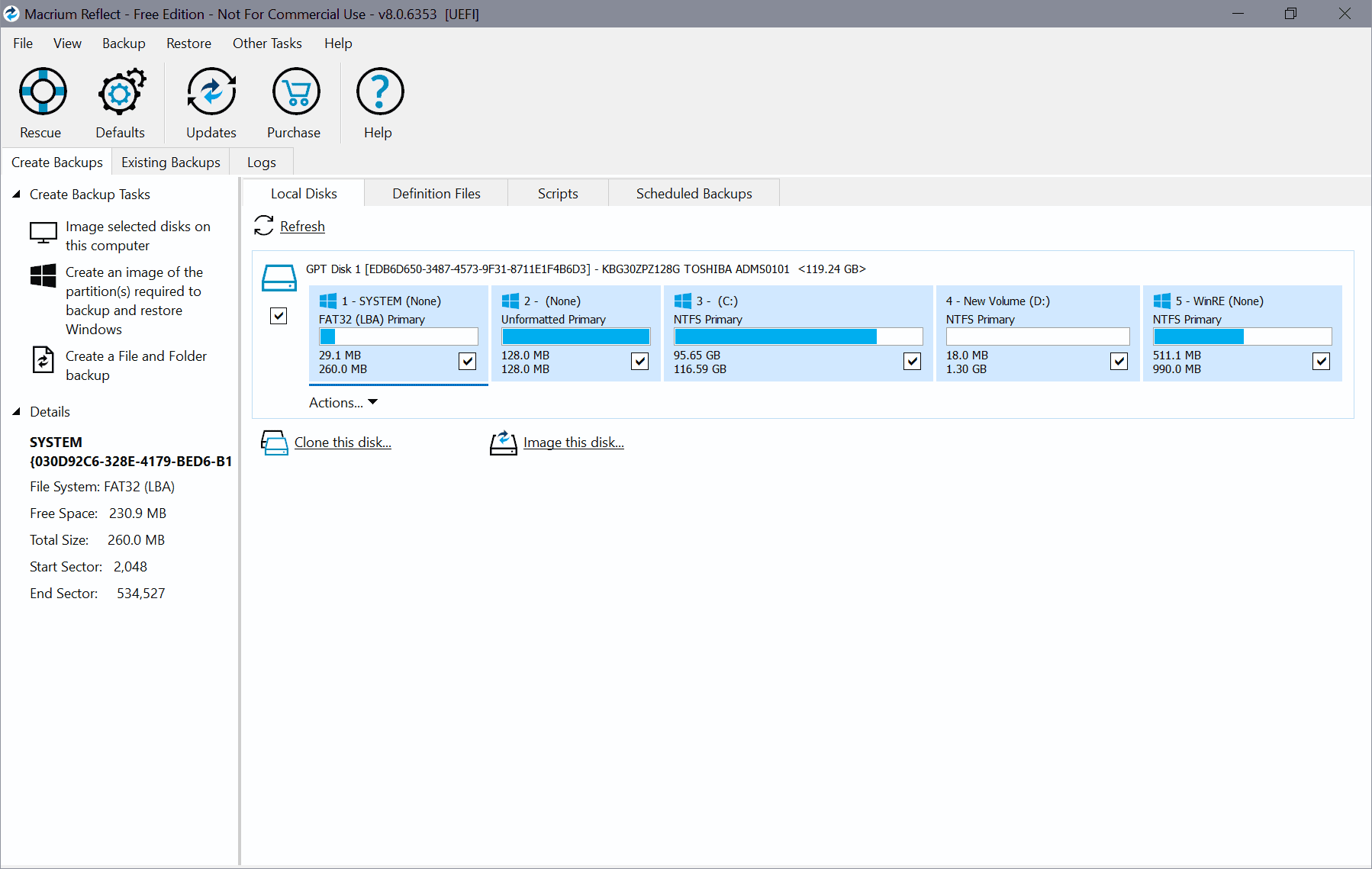

Rescuezilla is a powerful operating system agnostic backup solution. It's user interface may look dated to some, but it is easy to use for the most part. The program supports features, such as being operating system agnostic, that many regular backup solutions do not offer. On the other hand, it lacks support for scheduled backup jobs and some other features that backup programs such as Paragon Backup Recovery Free or Macrium Reflect 8 offer.

Now You: which backup solution do you use? (via Deskmodder)

This is probably a dumb question but, here goes:

Given that Rescuezilla runs from a USB source, can a single RZ instance service 2, or maybe 3 (2 Windows, 1 Linux) PCs, or is an individual USB required for each disk clone/system/data backup?

I too have been using Macrium Reflect with mixed levels of confidence (in particular working out which image to restore from), and Acronis (I’m not a fan of having to reboot to perform backup – I like the Macrium auto schedule). So, with the Macrium announcement, a rethink of backup convenience and safety is now due.

So I’ll be interested in seeing responses to my self-confessed dumbness.

AOMEI Backupper seems to have stopped offering “Explore Image” for its free version. So, it’s time to look for another system backup program.

Macrium Reflect is still my go-to backup software. I’m going to try MiniTool ShadowMaker as a second option.

https://www.runtime.org/driveimage_faq.htm

“Can I restore the image to a smaller partition?

No, you can only restore your data to a partition that is precisely the same size or larger, regardless of the data size.”

And presumably likewise for creating the clone from source to destination…?

This is the sort of issue that keeps me with Reflect. Not that Reflect has been trouble-free on Windows 10 (never had an issue on Windows 7 and sucessfully did at least one restore by swapping in a cloned ssd after a little “accident”). Had to upgrade to v.8 after v6 and v7 gave unspecified error and cyclic redundancy check fail (iirc) error. Clone done under v.8 looks good.

For me it looks best to explore clonezilla / rescuezilla further in due course when time permits for workarounds for the “destination smaller than source” issue, and/or to hope this gets addressed in rescuezilla at least (as it seems is contemplated by the developer).

For backing up and cloning (a partion, in my case) I use AOMeI Backupper. It does incremental clones and that saves a tremendous amount of time. I have a multiple backup system that I use, but mainly I depend on a secondary internal hard drive as well as massive thumb drives and an external hard drive. I loath compressed backups. If you don’t like that, then too bad. It works for me to not like them. Each to their own.

DriveImageXL (https://www.runtime.org/dixml.htm) for Windows – works on a live system, can restore individual folders/files. Small utility that can be executed from a live Windows restore session.

For Linux, Timeshift (rsync front-end) works perfectly.

Just had a look. It has no real help, only a forum. This is a deal-breaker for such a program. Highly technical, and far too many things could go wrong. Quality of help is one of my top criteria to select a program.

@ Peterc

> FreeFileSync’s RealTimeSync module

I’ve always stopped at that. RealTimeSync module is impenetrable to me. Why don’t they put such an needed feature into the program, instead of adding a “module” ?

Regarding your setup, Macrium can do file and folder backup, as well as imaging and cloning. So why do you prefer FreeFileSync, which adds a second program ?

@Clairvaux:

To be fair, FreeFileSync *does* in fact offer an online manual. It’s *very* succinct, but it’s a good starting point. Additionally, there is an online tutorials section.

I don’t know why FFS’s author made RealTimeSync a separate module, but I can see how doing so *might* help idiot-proof the program. Carelessly designed FreeFileSync batch jobs can wreak *havoc*. Forcing users to create separate RealTimeSync tasks “encourages” them to thoroughly vet their batch jobs by running compares in the GUI and seeing what the jobs will actually do. If users could simply enable a RealTimeSync option in FFS’s GUI, a lot more bad batch jobs could end up running automatically and causing cumulative damage until the user realized what was going on. (Of course, this problem would never affect you or me, because we’re just *too darn smart and careful*, and we’re *never* tired or distracted! ;-)

I don’t recall how well RealTimeSync was explained in the online documentation, but (from memory) it’s pretty straightforward. You launch RealTimeSync; you “open” a FreeFileSync batch file from it; it imports all of the folders in the batch job; you specify a triggering delay (from the time a change in one of the folders is detected to the time the associated batch job is launched); and you save the new RealTimeSync task. Then, when the RealTimeSync task is running (in background), it monitors all of the “imported” folders for changes, and it launches the associated FreeFileSync batch job X seconds after a change is detected.

It’s not perfect. For example, RealTimeSync doesn’t import exclusion filters from FreeFileSync batch jobs, and there’s no way to add them manually. FreeFileSync runs faster and captures more files by default if you specify a parent folder pair and use exclusion filters to get rid of a small number of problematic/unwanted subfolders than if you list all of the non-problematic subfolder pairs one by one. By using exclusion filters for Roaming AppData subfolders in my personal “Config” batch job, I got the total number of folder pairs in the job down from over 100 to around 30, *plus*, I back up user profiles for any new programs I might install without having to manually add their subfolder pairs to the FFS job. But my filtered Config batch job is *not* RealTimeSync-able. If I were to import it into RealTimeSync as-is, it would monitor all of the subfolders I’d filtered out of the FFS job and launch the job any time one of those filtered-out subfolders changed. Nothing from those filtered-out subfolders would actually get *copied*, but the FreeFileSync batch job would launch, run, and consume CPU cycles, RAM, and storage bandwidth nonetheless. And since a lot of browsers have files in their Roaming AppData subfolders that are continuously changing, well, RTS would cause my filtered Config FFS job to launch and run ALL OF THE TIME whenever one of those browsers is running. [Plus, with SSDs, you’d have the additional problem of prematurely “wearing out” the drive.] Nope. What you’d have to do is manually create a RealTimeSync task, replacing the FFS job’s parent-folder-pair-plus-exclusions with a full listing of all of the non-problematic subfolder pairs. And then you would *absolutely* have to manually add new subfolder pairs to the RTS task for any new programs whose user profiles you want RTS to automatically back up. Conceptually, it would be nice if, when RTS detected a “changed folder,” it asked “Are the *only* changes in this folder covered by exclusion filters? If so, then *don’t run* the associated FFS batch job.” Not being a programmer, however, I have no idea what kind of resource overhead this additional step might require.

I’ve never looked at Macrium for file and folder backups. One thing I appreciate about FreeFileSync is its relatively fine-tuned control over versioned backups. If Macrium Reflect offers similar control, then it might be an option. But can Macrium do real-time syncing, or would I have to manually create a bunch of scheduled tasks? And finally, FreeFileSync is available for Linux, which I plan to start using again “sometime soon” (once I have two big internal SSDs in my laptop). Macrium Reflect isn’t. In the past, when I was running both Windows and Linux, FFS’s cross-platform support was pretty convenient, because I could take at least *some* of my batch jobs from one system (“data” jobs, “big data” jobs, and, to a lesser extent, some of the “config” jobs), open them as XML files in a text editor, do global search-and-replaces to change paths (and backslashes/forward slashes), and save them as batch jobs for the other system, ready to be vetted. It saved me a fair amount of work.

Anyway, I’m very familiar with FreeFileSync; I’m passably competent at using it; I like that it’s fully cross-platform with Linux; and I actually *like* having a second program for file backups (as it avoids a single point of failure). That said, I’m aware of a lot of its shortcomings. It doesn’t do delta-copying; RTS doesn’t import exclusion filters (or *inclusion filters*, for that matter); RTS tasks have a “blind spot” while their associated FFS batch jobs are running (they don’t detect *new* changes that occur when the job has been previously triggered and is still running); and I’ve run into significant batch-job-parsing delays in FFS’s GUI when Windows has been up and running for a long time (either Windows 10 or FFS is apparently not doing a very good job of “garbage collection”). There’s no getting around FFS’s missing delta-copy functionality for big files (at least not without using an entirely different program); RTS tasks can be manually hacked to accommodate “filtered” batch jobs, though it’s a PITA; RTS blind spots can be reduced in duration (if not eliminated) by using multiple smaller batch jobs with separate RTS tasks in lieu of one huge batch job triggered by a single RTS task; and, well, I’m still trying to find a way around that parsing-delay problem short of rebooting. It’s possible I might be better served by a different program, like Syncthing — which also seems to adhere to the open-source ethos more faithfully than FFS does — but I’m not looking forward to learning how to use it to good effect. (I’ve been a full-time, round-the-clock family caregiver to a seriously ill and disabled relative for a few days shy of a year, and I began those duties just as I was beginning to recover from a year and half with long-haul COVID. I’m *exhausted*. Just having the time to post to gHacks a few times in the past few days has been a real luxury. Mastering a new, *very different*, syncing program requires a lot of attention to detail, and I’m not sure I’m capable of doing it right now!)

Having lived — whether vicariously, through friends and family, or personally, first-hand — through the IBM “Deathstar” hard-drive fiasco (vicariously), the WD “click of death” hard-drive fiasco (vicariously), several post-2014 system-borking-Windows-update fiascos (vicariously), one system-borking WireShark-update fiasco (personally), and one system-borking malware infection (vicariously), I’m borderline compulsive about maintaining up-to-date clones or images of my system drive and discrete backups of all of my data files and a lot of my configuration files.

Back when I had 32-bit Windows XP on an MBR drive on a desktop computer with an old-school BIOS, xxclone performed a combination clone/backup quickly and reliably in a single operation. (xxclone was even thoughtful enough to leave the target drive’s original volume label intact.) With the advent of 64-bit Windows, UEFI, and GPT drives, I had to switch to something different, and I ended up using Macrium Reflect for the cloning and imaging and FreeFileSync for the backing up.

My ideal solution (in Windows) is to have a second internal drive that I periodically clone the system drive to — typically, just before I’m about to install Patch Tuesday updates, but also if I’ve installed a new program and done a significant amount of configuration work on it. Then, between clones, I keep all of the data files and select configuration files on the clone drive up to date by automatically copying them over in close to “real time” using FreeFileSync’s RealTimeSync module. (Some folders don’t lend themselves to real-time syncing, like browser profiles, which are continuously changing, and LibreOffice user profiles, which crash RealTimeSync, so those copy jobs I’d run manually from time to time, when the program in question isn’t running. I expect I could also run them as “scheduled tasks,” triggered by their respective programs’ exit, but then I’d have to learn how to do something *new*. ;-) With this approach, if something borks my system drive, I can just switch to my clone drive and have ALL of my latest data-file changes and … well, a *lot* of my latest configuration changes … already onboard without having to take any special steps. The system worked magnificently well on my previous computers, with the added benefit that using FreeFileSync allowed me to maintain a depth of versioned backups from whatever folders I chose (typically, those containing reasonably sized data files).

My “new” laptop has only one internal drive, so for now, I’m stuck writing system-drive images to an external drive (and backing up *that* drive on other external drives), and maintaining a separate structure of file backups on an external drive (ditto). It works fine, but recovering from a borked system drive is going to take a couple orders of magnitude longer. I’m looking forward to the day I can install two big SSDs in my laptop and return to my old, heavily automated, tried-and-true method.

There’s one big thing I value in cloning/imaging utilities, and one big thing I value in backup/syncing utilities:

For the cloning/imaging utilities, it’s the ability to accurately and reliably clone a *running system*. On a good day, it takes Macrium Reflect over 3½ hours to image my laptop’s system drive, and I appreciate that I can continue using the laptop once I have got the imaging job launched and running. I don’t know whether any other cloning/imaging utilities for Windows support that, but I’m pretty sure that no Linux utilities do. (I believe that for a Linux system partition to be “perfectly”/completely cloned or imaged, it has to be unmounted.)

For the backup/syncing utilities, it’s whether the utility supports delta-copying/delta-syncing. If you’ve ever re-tagged a big collection of MP4s, or backed up virtual machines (without versioning), you’ll understand why. (And if you haven’t, it’s because these files are *big*, the changes to them may be quite small, and without delta-copying the *entire file* has to be copied from scratch. New backups take *hours* instead of minutes.) I’m reasonably accomplished at using FreeFileSync, but it doesn’t support delta-copying so I am about to investigate using Syncthing instead, at least for videos and virtual machines. There’s an old-dog/new-tricks problem with that, but on the other hand, old dogs don’t like waiting forever for backups to complete either.

And finally (YES!), if I once again end up with a computer that doesn’t have at least one Windows boot on it (and is therefore not amenable to cloning/imaging using Macrium), I will definitely take a look at Rescuezilla (and, why not, FoxClone, too).

In my initial post, I carelessly wrote “New backups take *hours* instead of minutes.” What I should have written was “UPDATED backups take *hours* instead of minutes.” Delta-copying doesn’t come into play with “new” (initial, original) backups, and the initial backup of a big file is going to take a long time regardless.

In other “updated” news, I’ve learned that Syncthing (like Resilio Sync) works only between different devices, not between different drives on the same device, so if I wanted to use it for backing up, I’d have to connect my “external drive rig” to a different computer.

I’ve also read up a little on the SyncBack family of utilities from 2BrightSparks. I remember using one or two utilities from 2BrightSparks back in the days of XP and thinking they were pretty good, but when I read about SyncBack, I noticed that to get real-time syncing you have to pay for the SyncBackSE or SyncBackPro edition (doable if they’re worth it), and further, that for real-time syncing to work, both ends of the sync have to be running Windows. (That eliminates real-time syncing between Windows and Linux computers as well as between a Windows computer and most NAS devices, as most NAS devices don’t run Windows.) At the time I tried to test real-time syncing (and manual syncing!) directly between a Windows and Linux computer using FreeFileSync, SAMBA was working *HORRENDOUSLY POORLY* in the distros I was using, so I never got a chance to find out how well FreeFileSync worked for that application, as I could *not* get a stable network path.

Anyway, for now I’m still using Macrium Reflect for imaging and FreeFileSync for backups … and grumbling a little about FFS’s lack of delta-copy functionality. I *did* in fact re-tag a bunch of videos on my main “always-connected” 2.5″ backup/video-archive drive a couple of weeks ago. I previously mirrored that drive to my other “always-connected” 2.5″ backup drives and have forgotten exactly how long that took (other than that it was *LONG* time). But I’m doing a monthly mirror to my two 3.5″ backup drives right now. My command script launched the first mirror job over 24 hours ago, and FreeFileSync tells me the second mirror job still has 3½ hours to go. I’m guessing that with delta-copy functionality, the total time required for the two mirrors would have been cut by a good 22-23 hours. So: grumble, grumble, grumble. ;-)

> FreeFileSync

App Notes (source: https://portableapps.com/apps/utilities/freefilesync-portable)

Later Versions Broken and Pay-For-Portable: All versions of FreeFileSync after 6.2 are broken and can’t be run from other apps. This prevents packaging, use of the base portable version with menus like the PortableApps.com Platform, use of the local version with a scheduler, use of the local version with an alternative start menu, etc. Details are in this bug report from March 7, 2014 which the publisher did not address. The current version of FreeFileSync can not be used portably without paying a fee, is no longer fully open source, and may be in violation of the GPL.

@owl:

Using much more recent versions of FreeFileSync than 6.x, I’m not sure whether I’ve run into any of these problems or not. I’ve been able to launch FreeFileSync’s and RealTimeSync’s GUI from Open-Shell’s menu; I’ve been able to schedule RealTimeSync tasks to start on user sign-in using Windows’ Task Scheduler; and I’ve been able to able to run at least one sequence of batch jobs using a Windows command script. On the other hand, I recently made a longer command script using exactly the same approach/structure as the first script but targeting a different drive and containing considerably more batch jobs (all of which run just fine in the GUI), and *that* script just launches a command window, displays the first command (to run the first batch job), sits there for 10 seconds or so doing nothing, and closes without even running my error subroutine. I’m not finding any obviously relevant error logs in Event Viewer and I’m at a bit of a loss to figure out how to debug it … but I’ll find a way eventually.

As for potential GPL violations, well, I’ve never studied open-source software licensing, but my impression is that the author actively develops and debugs the program and (understandably) wants to get paid for his work. The free edition is crippleware (being limited to single-threading for at least some operations), and if you want full multi-threading or portable, you have to pay (subscribe). Whether or not he’s technically in violation of whatever license he chose to release the package under, I’m guessing *that’s* why he was in no hurry to address whatever was causing the PortableApps problem. Anyway, while he similarly seems to be in no hurry to add new requested features that would require a lot of work and add complexity, he *does* generally respond to user feedback and he *does* fix bugs, and that alone puts him in the top half of “free and open-source” developers.

What benefit is an uncompressed 1:1 clone over a 1:1 fulldisk image with XZ compression? Other than being able to save the <15 minute restore time. Just the write amplification is crazy.

@Frankel:

“[T]he <15 minute restore time. . . ."

Really? That's amazing! I haven't had to restore from an image yet (and hopefully never will), but my boot/system-drive images are around 140GB in size and they take around 1½ hours to copy from one external USB 3.0 hard drive (with UASP) to another identical drive. By what magic would I be able to restore them to my laptop's internal SSD in under 15 minutes? Color me skeptical. Are you suggesting that it would be under 15 minutes if I imaged to a second internal NVMe SSD (which I don't yet have, and which on my current laptop would be limited to two PCIe lanes)? Or to an external SSD (which I don't yet have) via Thunderbolt 3?

With a cloned drive, if you have a properly functioning motherboard/UEFI, all you have to do to recover from a catastrophic system-drive failure is select the clone as the "new" boot drive. (On a friend's computer that I administered, I ran into a motherboard/UEFI whose boot-drive selection feature didn't work. You had to physically swap drives and "power-cycle" the computer's power supply for the new boot drive to be recognized. He had a tower computer with mobile drive racks, so instead of taking 1½ minutes to recover from a borked system — which happened to him at least three times that I can recall — it took him more like 3 minutes. And because I'd set up real-time updating of all of his data files on the cloned drive, he never suffered any data loss and he never had to restore any data files from a separate backup. It was truly 3 minutes to full recovery, with nothing additional to do. Well … apart from cloning the clone back onto the borked system drive, in background.)

Another advantage of clones over images is that you can *test* a cloned boot drive simply by booting to it. I suppose you could *tentatively* test a Macrium Reflect image by loading it in a hypervisor, but my understanding is that a hypervisor is not a perfect substitute test for a bare-metal install. (I'm happy to be corrected, with specific examples of hypervisors that *are* perfect substitutes.)

Finally, with a 1:1 clone, if your system drive suffers *physical/mechanical failure*, you're good to go and can keep using your computer while you're shopping for a replacement drive. If you've imaged instead of cloning, you have to wait until your new drive is installed before you can do *anything*.

Anyway, given my current hardware limitations, I'm having a hard time imagining how I could restore my system drive from an image in under 15 minutes.

@Peterc:

I’m not reading all that, but I store and restore from a Ryzen 3700X with NVME to a /home/partimage mount point in 15 mins for restore and compression is under 25 mins. Weak machines could use pigz or any other parallel ZLIB implementation at loss of effective compression. But any compression is better than none for software.

Of course often people don’t understand data separation and try to compress near incompressible data. Hopefully no tech literate is storing movies on their C:\ drive.

It works if you compress with the right algorithm and the correct data. For media i have dedicated other drives and use robocopy.

>Are you suggesting that it would be under 15 minutes if I imaged to a second internal NVMe SSD

Less even with parallel ZIP, provided your CPU can handle the action. Ryzen or bust.

Addendum: Again, I only use parallel XZ. But a backup and restore with parallel zip can be less than 10 mins from SSD to SSD.

Can you restore individual files from the backup easily, or just the whole image?

>Now You: which backup solution do you use?

I have recently started using Foxclone backup, restore, and cloning utility, a neat utility created by a Linux Mint user.

It is similarly cross-platform, burned to a USB memory and then booted from that, and seems to work well.

The comprehensive website explains all and includes a user manual download link.

“Rescuezilla does NOT yet automatically shrink partitions to restore to disks smaller than original. This feature will be added in future version.”

https://github.com/rescuezilla/rescuezilla

From the above it’s not clear to me without actually doing a trial imaging operation whether a source drive can be successfully imaged if it is, say, .3 of a GB bigger than the destination drive (but source drive only e.g. half full of data).

Related thereto: unless I’ve missed it, the page doesn’t say whether when imaging a disk, intelligent copying is employed i.e. unused / blank sectors are not copied (as in Macrium Reflect etc).

Clonezilla has this feature:

[https://clonezilla.org/clonezilla-live/doc/02_Restore_disk_image/advanced/09-advanced-param.php]

German:

[https://www.thomas-krenn.com/de/wiki/Wiederherstellung_auf_einen_kleineren_Zieldatentr%C3%A4ger_mit_Clonezilla#Restore_des_neuen_Disk-Images_auf_kleinerem_Zieldatentr.C3.A4ger]

As chance would have it, before coming back to this page I had just deployed via ventoy multiboot usb:

clonezilla-live-3.0.1-8-amd64.iso

and, being a beginner, selected beginner mode to clone the internal SSD to an external SSD.

After selecting relevant options I started the clone and it stopped with an error: destination drive smaller than source:

source: 468877312 sectors

destination: 468862128 sectors

So the fact that clonezilla says it does not copy unoccupied sectors makes no difference in beginner mode; it just fails if the source device is even marginally larger than destination device, even if source is only e.g. 1/3 full.

I see that link relates to advanced options for restore. When I have more time I may look for advanced options for clone – a quick search did not locate it. Meantime it’s back to Macrium Reflect (upgraded to v.7* as v.6 had started failing with an unspecified error due to some Microsoft issue….)

Yes Please Indeed!

OS-agnostic disk cloning? Yes please!!