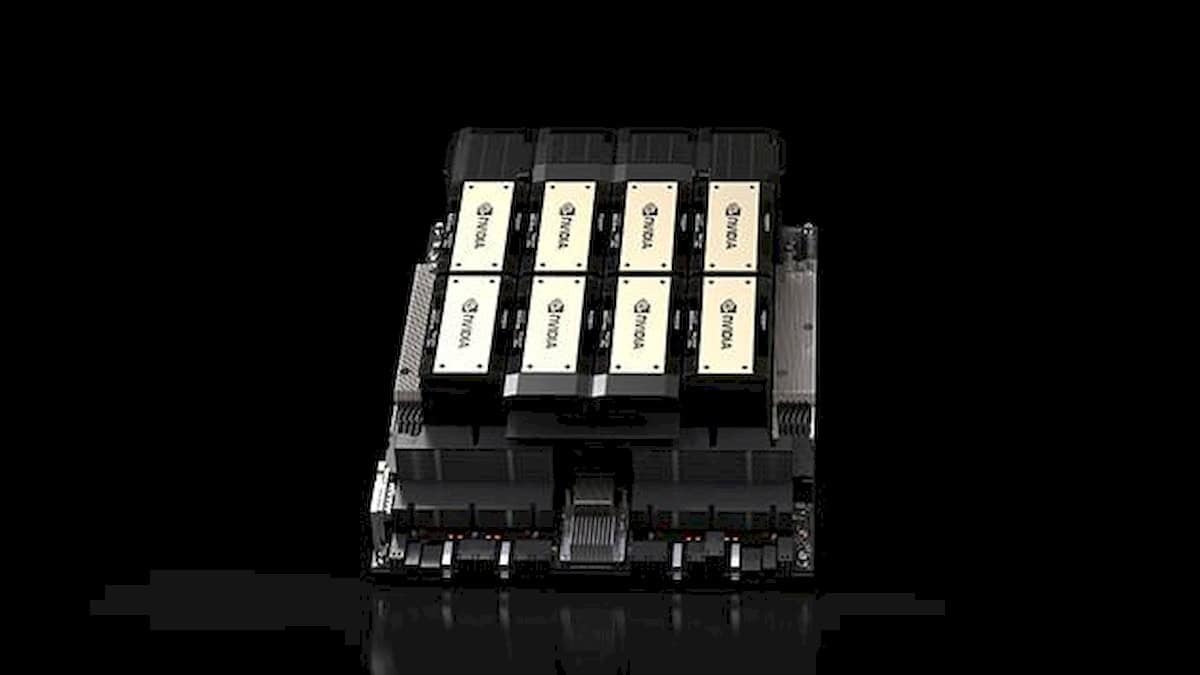

NVIDIA GH200 superchip will soon reinvent AI

NVIDIA's GH200 Grace Hopper superchip is in the works and set to change the way artificial intelligence (AI) and high-performance computing (HPC) work.

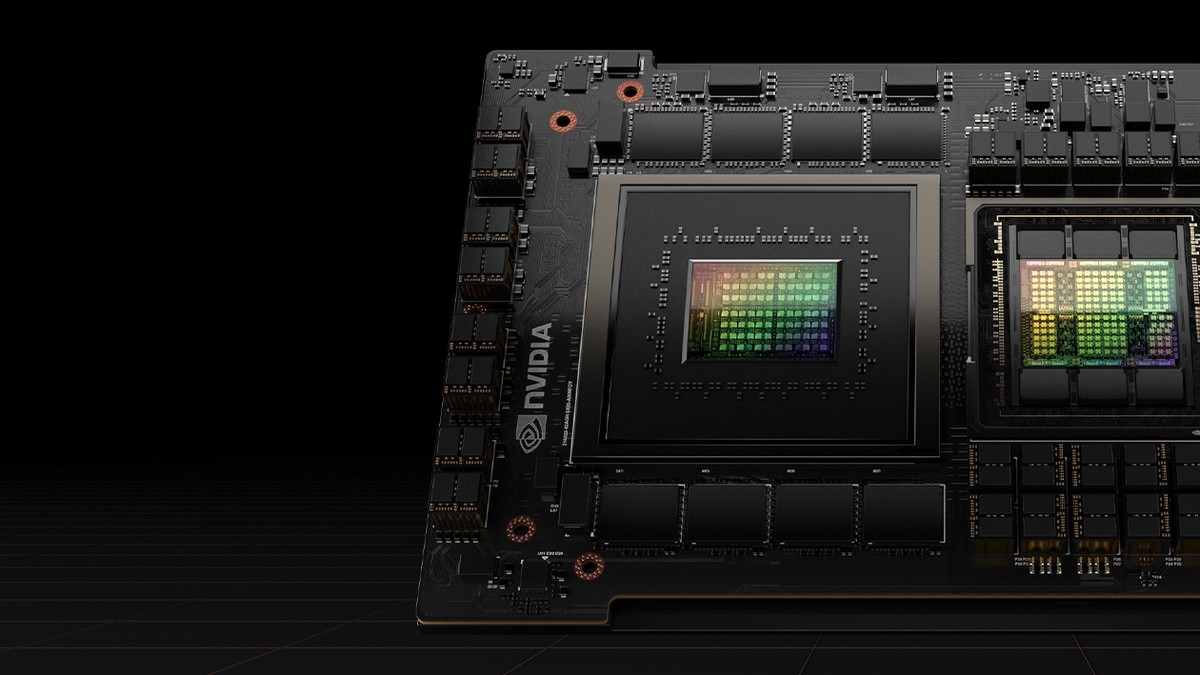

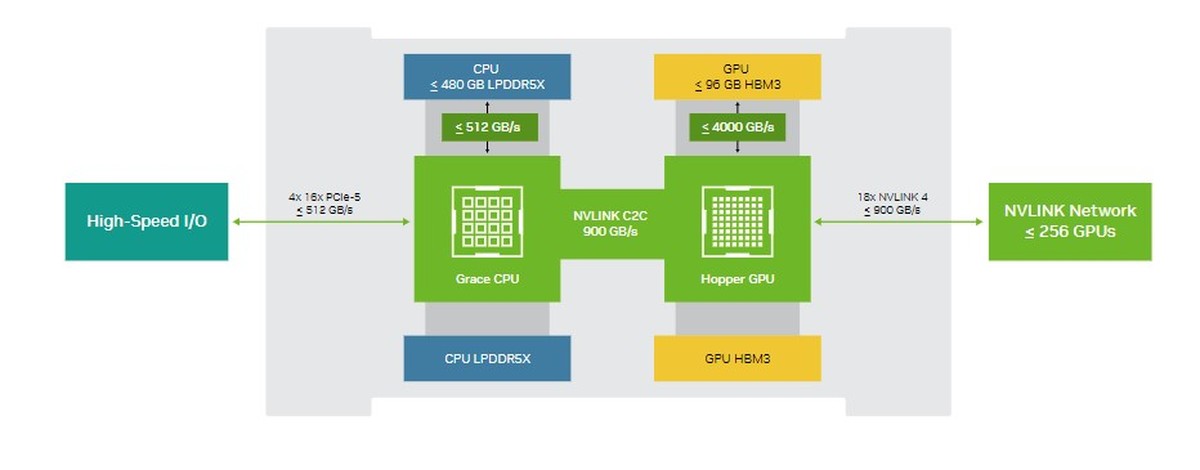

This advanced chip, equipped with HBM3e memory technology, signifies a remarkable leap in AI capabilities. By blending GPU and CPU prowess, the GH200 aims to cater to the demanding landscape of generative AI workloads.

Jensen Huang, CEO of NVIDIA, has underscored the GH200's exceptional potential to address the escalating demands of generative AI workloads. The incorporation of improved memory technology, bandwidth, and server design positions the GH200 as a catalyst for AI innovation.

Huang's vision envisions the GH200 as a solution to bridge the gap between burgeoning AI requirements and the computational resources needed to fulfill them.

NVIDIA GH200 superchip will include an HBM3e memory

NVIDIA's recent revelation of an updated variant of the NVIDIA GH200 superchip has sparked considerable interest within the tech community. This unveiling took place at SIGGRAPH, where the chip's enhanced features, particularly its utilization of HBM3e memory, took center stage.

The infusion of this memory technology is designed to substantially amplify the performance of the GH200, making it an instrumental player in the realm of generative AI tasks.

An ambitious name in the competitive HPC market

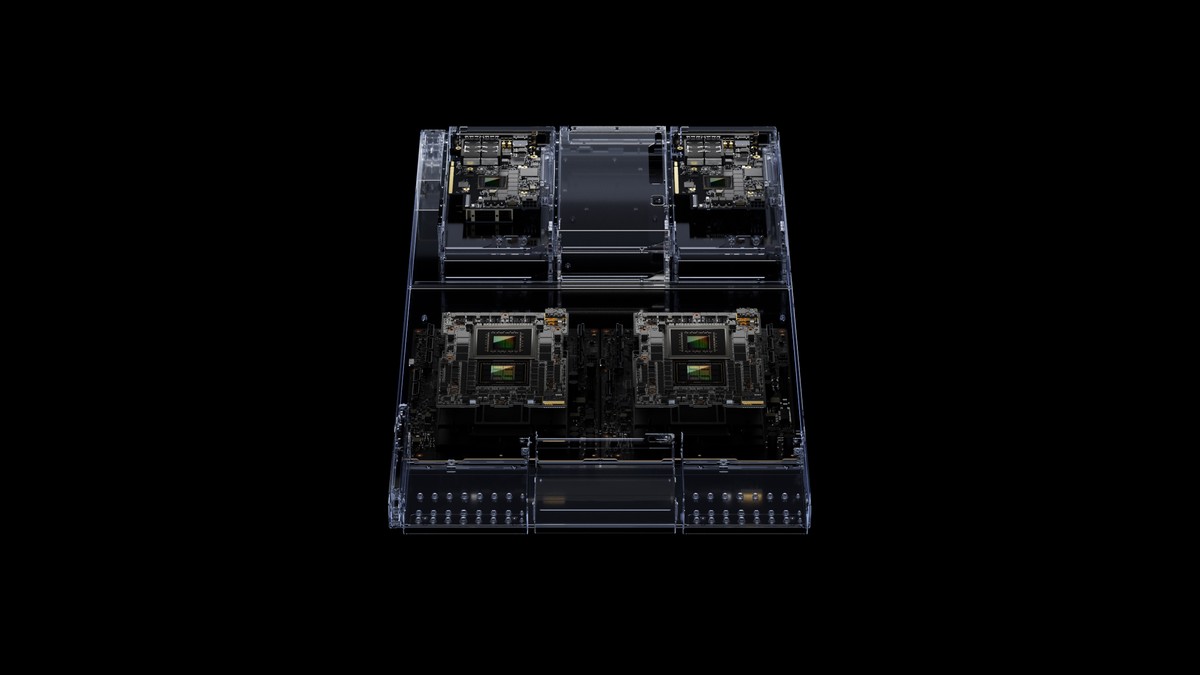

NVIDIA GH200 is strategically positioned to rule the competitive HPC market. By synergizing the strengths of both GPU and CPU components, this platform offers a comprehensive solution to address the complex computing requirements of modern AI workloads.

This holistic approach positions the GH200 as a versatile powerhouse capable of tackling a myriad of computational challenges.

What is GH200 capable of?

The GH200 arrives with a set of impressive specifications that underpin its exceptional capabilities. At its core, the GH200 boasts 72 Arm Neoverse V2 CPU cores, accompanied by up to 480GB LPDDR5X (ECC) memory. The GPU component comprises 132 GPU Streaming Multiprocessors (SMs) and 528 GPU Tensor Cores, demonstrating the chip's potency in AI processing.

Notably, the physical GPU memory is expandable from 96GB to a substantial 144GB through the incorporation of HBM3e memory technology.

Read also: Nvidia hits $1 trillion valuation riding AI wave.

Wait for it...

While the GH200 with HBM3 memory is currently in production, NVIDIA has its sights set on the future with the introduction of the GH200 version featuring HBM3e memory.

The timeline for this evolution is set for Q2 2024, emphasizing NVIDIA's commitment to pushing the limits of AI and HPC capabilities.

Advertisement

yes yes, very nice, but people want to know.

Will it run Crysis? 4k, full AA, rocksolid 120fps (no frame above 8ms). #jokingnotjoking

And will it be gamer affordable. After all, 4xxx series has flopped in that arena, I remember them being north of $3k at one point.

All this AI shit has little value if it does not run on affordable hardware at home, but instead is held hostage at some major “providers” who will milk access and spy you out for extra profit.