NVIDIA H200 will elevate AI technologies to unimaginable heights

The NVIDIA H200 is a powerful new AI and high-performance computing (HPC) platform that offers significant advancements in performance, memory, and efficiency. Based on the NVIDIA Hopper architecture, the H200 is the first GPU to offer HBM3e memory, which provides up to twice the capacity and 2.4 times more bandwidth than previous generations of HBM memory.

This makes the H200 ideal for accelerating generative AI and large language models (LLMs), as well as scientific computing workloads.

What does NVIDIA H200 set to enable us to do? Let Ian Buck explain in the YouTube video on the NVIDIA channel below.

Breaking down the NVIDIA H200 features

The NVIDIA H200, built upon the innovative NVIDIA Hopper architecture, is the first GPU to offer an impressive 141 gigabytes of HBM3e memory, operating at a rapid 4.8 terabytes per second. This marks a near doubling in capacity compared to the NVIDIA H100 Tensor Core GPU, complemented by a 1.4X enhancement in memory bandwidth.

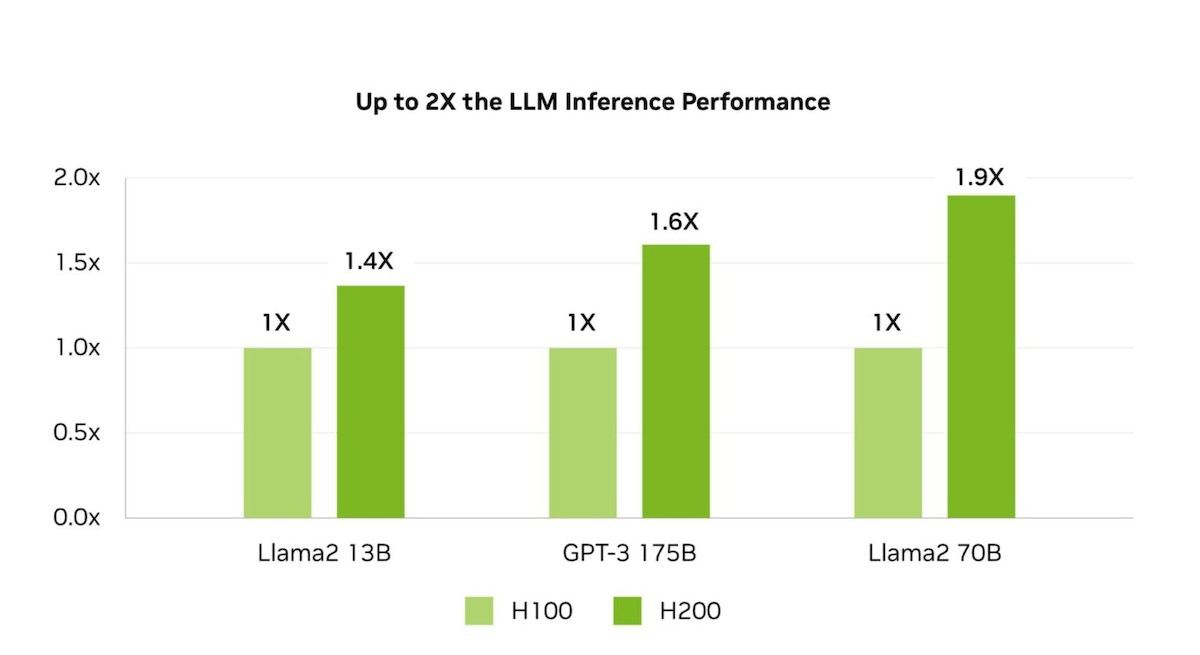

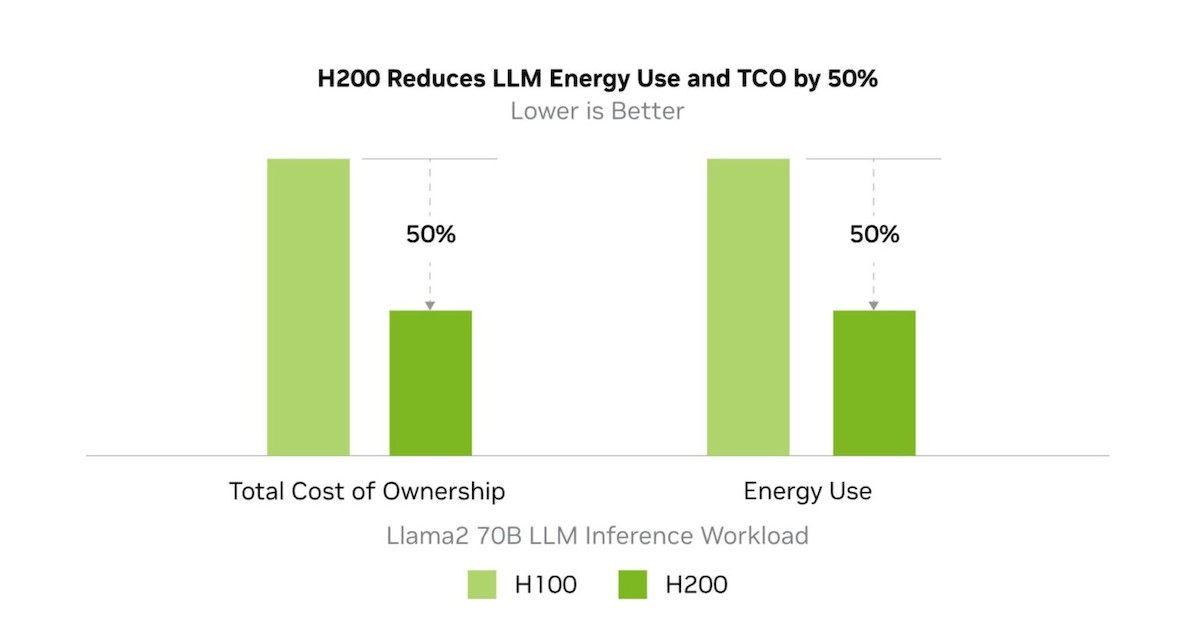

Businesses are increasingly relying on LLMs for a wide range of inference needs. For these applications, an AI inference accelerator like the H200 is essential. It stands out by offering the highest throughput at the lowest total cost of ownership (TCO), particularly when scaled for extensive user bases. The NVIDIA H200 significantly enhances inference speeds, achieving up to twice the rate of the H100 GPUs in handling LLMs, such as Llama2.

The NVIDIA H200 is not just about memory size; it's about the speed and efficiency of data transfer, which is crucial for high-performance computing applications. This GPU excels in memory-intensive tasks like simulations, scientific research, and AI, where its higher memory bandwidth plays a pivotal role. The H200 ensures efficient data access and manipulation, leading to up to 110 times faster results compared to traditional CPUs, a substantial improvement for complex processing tasks.

Apart from all that, this advanced technology maintains the same power profile as the H100 while delivering significantly enhanced performance. The result is a new generation of AI factories and supercomputing systems that are not just faster, but also more eco-friendly.

Here are the key takeaways from the NVIDIA H200:

- Up to 141GB of HBM3e memory with 4.8TB/s bandwidth

- Up to 4x faster generative AI performance than the Nvidia A100

- Up to 2.4x faster LLM inference performance than the Nvidia A100

- Up to 110x faster scientific computing performance than CPUs

- Same/lower power consumption as the Nvidia A100

Months to go

The NVIDIA H200 is scheduled for release in the second quarter of 2024. It will be accessible through global system manufacturers and cloud service providers. Leading the charge, Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure are poised to be among the first to offer H200-based instances starting next year.

The NVIDIA H200 is expected to have a transformative impact on the AI. Its ability to handle massive datasets and accelerate AI model development and deployment will make it a valuable asset for businesses and research institutions alike.

Featured image credit: NVIDIA.

Advertisement