Why you may want to enable Hardware Accelerated GPU Scheduling in Windows 10

Microsoft introduced a new graphics feature in Windows 10 version 2004; called Hardware Accelerated GPU Scheduling, it is designed to improve GPU scheduling and thus performance when running applications and games that use the graphics processing unit.

The new graphics feature has some caveats and limitations: it is only available in Windows 10 version 2004 or newer, requires a fairly recent GPU, and needs drivers that support the feature. The option becomes available only if the system meets all requirements.

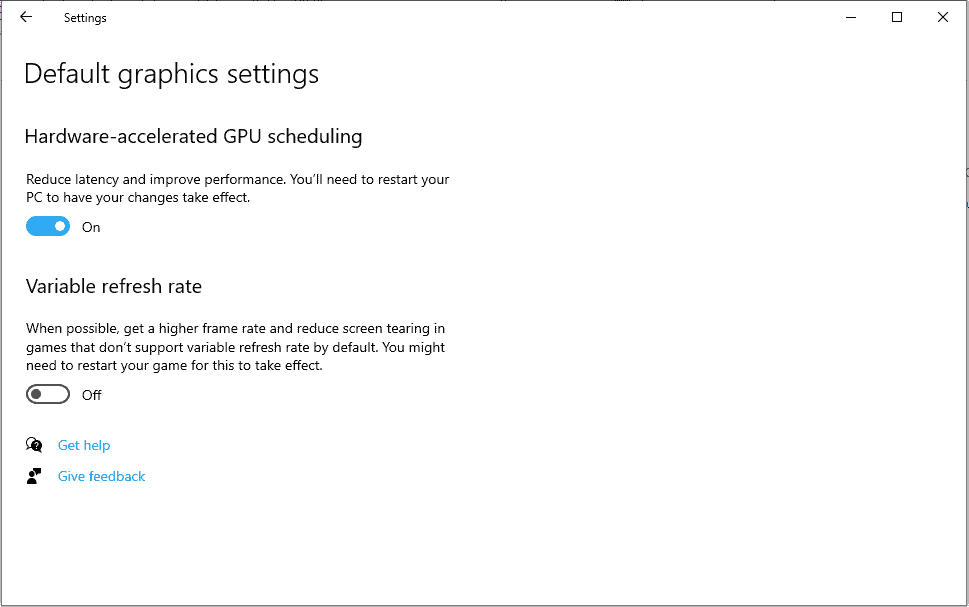

You may check the system's graphics settings in the following way to find out whether Hardware Accelerated GPU Scheduling is available on your device:

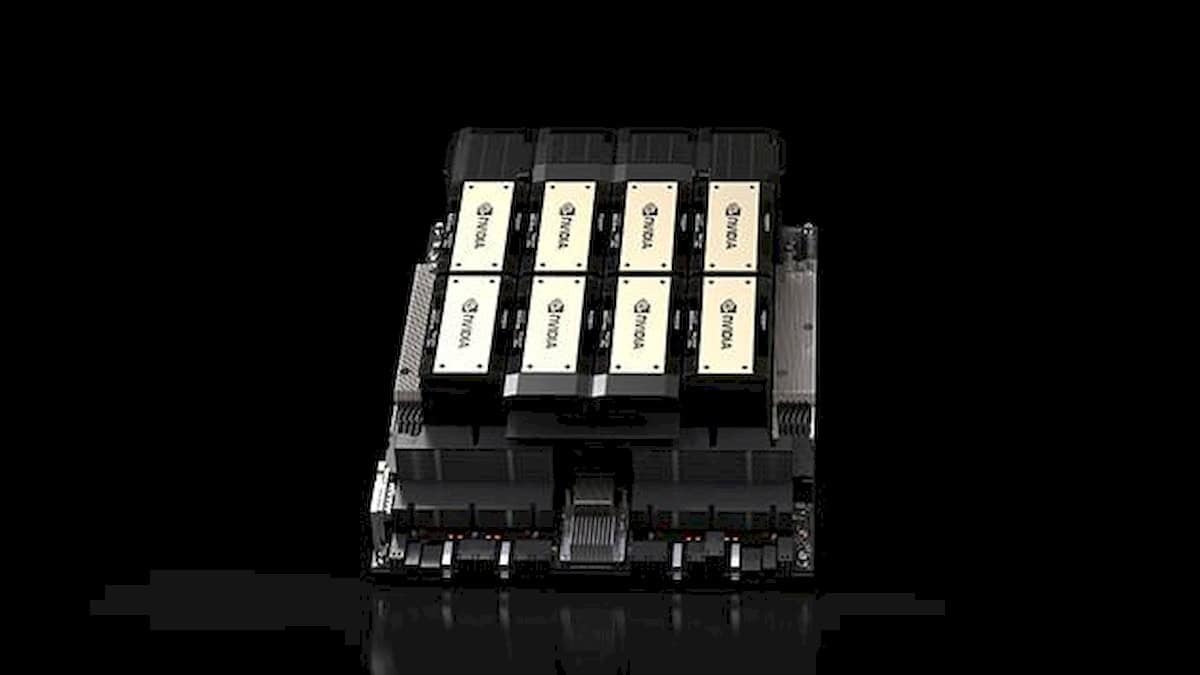

- Make sure you run graphics drivers that support the feature. Nvidia customers need the GeForce driver 451.48 or newer for instance as the company introduced support for Hardware Accelerated GPU Scheduling in that version.

- Make sure you run Windows 10 version 2004, e..g by opening Start, typing winver, and selecting the result.

- Open the Settings application on the Windows 10 system with the help of the shortcut Windows-I, or select Start > Settings.

- Go to System > Display > Graphics Settings.

- The Hardware-accelerated GPU scheduling option is displayed on the page that opens if both the GPU and the GPU driver support the feature.

- Use the switch to set the feature to On.

Microsoft reveals bits of the inner workings of Hardware Accelerated GPU Scheduling in a blog post on the Microsoft Dev blog. The company explains that the feature "is a significant and fundamental change to the driver model" and compares it to "rebuilding the foundation of a house while still living in it".

The company plans to monitor the performance of the feature and will continue to work on it.

Windows 10 users, especially those into gaming, may wonder whether it is worth enabling the feature, or if it is better to enable it at a later point in time when it is more mature.

The German computer magazine PC Games Hardware ran the feature through a set of benchmarks on Nvidia hardware. The testers followed Nvidia's recommendation and picked one of the fastest video cards, a MSI GeForce RTX 2080 Ti Gaming Z, for the test (Nvidia revealed that the most powerful cards benefit the most from the feature).

Benchmarks were run on games such as Doom Eternal, Read Dead Redemption 2, or Star Wars Jedi Fallen Order, with hardware accelerated GPU scheduling set to on and off.

One of the core takeaways of the published benchmark results is that Hardware Accelerated GPU Scheduling does improve performance in all cases. On average, testers noticed a gain between 1-2 frames per second while running the game in 2560x1440 resolution.

It is unclear if the performance improvements will be less with less powerful hardware but Nvidia suggests that it may be the case.

Still, as a gamer, it may make sense to enable Hardware Accelerated GPU Scheduling provided that no drawbacks are observed on machines on which the feature is activated.

Now You: Which video card do you run on your devices?

Thank you so much for your information. Thanks Yuliya. The end of that video pretty much sums up what the community at PCGH have likewise learned, which is that Hardware Accelerated GPU Scheduling is forward looking tech that has little benefit to most users now.

Good video on this subject; includes benchmarks:

Windows Hardware Accelerated GPU Scheduling Benchmarks (Frametimes & FPS)

https://www.youtube.com/watch?v=wlrWDb1pKXg

Thanks Yuliya. The end of that video pretty much sums up what the community at PCGH have likewise learned, which is that Hardware Accelerated GPU Scheduling is forward looking tech that has little benefit to most users now.

..AND causes some problems too, such as Shadow of Tomb Raider now crashes with the setting enabled using nvidia 451.67 driver.

This seems like such poor journalism. Literally, on the most powerful hardware, they got improvements of 1-2fps, which is literally such a negligible margin of error, on weaker hardware there might not even any differences. Not to mention that the test was done with only one hardware. It should have tested multiple hardware.

@Anonymous

“should have tested multiple hardware?”

They did..

PCGH is a community. That PCGH article and test was just to get the ball rolling, with a request to their community to test it on their systems. The PCGH community has since tested this on various systems, and have overall not found much to get excited about yet.

Thus being that you think that “this seems like such poor journalism” with ghacks and PCGH, then perhaps you simply need to examine the situation better.

Furthermore, the truth of this topic is news worthy, mostly because many overzealous tech geeks have hyped this tech too much, with much misinformation.

That said, I thank ghacks and PCGH for looking into this in a rational way.

LOL, just tried it and it destroyed my performances… in The Division 2 I went from a constant 60fps in 4K ultra to 22-27 fps. Just rolled back (i9900k + 2080Ti). What the heck is that?!?!

@Oxee

“What the heck is that?”

It was a test you did, and it failed, so you rolled it back.

I see nothing funny or surprising with that, as Martin warned that this may not work and is new, yet still perhaps worth giving a try for some users.

What did you expect?

I am amazed that people actually use windows 10. I’m still on 7 and the next OS will be Linux. Can you relate?

Being that you’re “amazed that people actually use windows 10”, then that shows you’re likely akin to those who can’t “relate” to others who are different than themselves. Perhaps you suffer from some sort of OS xenophobia?

BTW, “amazed” is more or less a good thing. Thus if you want stronger punch, next time I suggest you replace “amazed” with “shocked”, although “stupefied” may be more appropriate.

Regardless, I can relate to you, in at least that I used Windows 7 Pro until it was no longer officially supported, but I was on Windows 10 long before that on other PCs. Also, I have used various Linux distros as need be. I have no plans for what operating systems I will be using in the future, but I don’t expect much to change on these fronts.

That said, I have a solid history of successfully using what works best for me to simply get my work done and enjoy life, without having to hype my choices into some sort of high and mighty imperative.

Can you relate, yet?

…Okay??? No one asked, but good for you

“It is unclear if the performance improvements will be less with less powerful hardware but Nvidia suggests that it may be the case”

Would be pretty unfortunate. Because if the improvement on high performance hardware is just 1 – 2 FPS, it makes one think the feature is next to useless with weaker hardware.

@Martin or whomever

My system has this feature but my card is lower end, thus I doubt I should have “Hardware Accelerated GPU Scheduling” enabled.

But what about that other option for “Variable refresh rate”?

I would, but windows says my 2 year old system isn’t ready for the update still didn’t get last years update either.

2 y.o.? Your system is obsolete, with the current M$ inOS you need to change your rig before it’s 6 m.o.!

I’m just courious if this “feature” reduces the cpu/gpu load caused by dwm.exe while moving the mouse…

My Windows 10 Enterprise hasn’t decided to update to 2004 yet – is currently on 1909. Anyone else not getting the option to update yet? I don’t care much just curious.

I did that Stay on Target Version registry entry to stay on 1909,

Win 10 Pro.

This:

https://www.ghacks.net/2020/06/27/you-can-now-set-the-target-windows-10-release-in-professional-versions/

@Trey

Due to your PC’s configurating and make, I imagine there’s a whole host of reasons why Microsoft might delay 2004 to you.

For one, due to concerns with privacy, if you have disabled some Windows features with 3rd party software, then I imagine Microsoft may put you on the bottom of the update list for 2004, as they may not be able to detect all of your device’s configurations and such, thus you may have to manually update to 2004?

As for me on Windows Pro, I have automatic updates on, and I have recommended privacy setting turned on with O&O ShutUp10. I am still on 1909, with this message under my update settings:

“Feature update to Windows 10, version 2004. The Windows 10 may 2020 Update is on it’s way. Once it’s ready for your device, you’ll see the update available on this page.”

@Shake and Bake

Interesting and thanks for the info. I do have things tweaked with O&O and other similar apps.

I tried 2004 on a R5 2600 with an RX 5700 using the latest drivers with a 1440p 60 Hz monitor and the highest resolution I could get was 1080p 30 Hz so I had to roll back to an image I flashed before I tried this bug ridden piece of crap called 2004

Microsoft updates and upgrades are pure crap anymore, just the worst bug ridden virus-like mess they’ve ever released

Does this feature work with the Standard driver or do you have to use the unpopular DCH model? Also 1-2 FPS is definitely withing the margin of error, especially on a 2080Ti running over a hundred fps. btw if you’re looking for a better desktop experience get a 144hz monitor and change your refresh rate from the standard 60.

The new feature adds some latency, with noticeable stuttering, and pausing, would not enable.

@ Duke Thrust:

Please learn how to use a pastebin.

What if I have the latest Windows 10 2004 19041.330 build and yet I don’t have this option at all?

If I’m understanding Steve correctly, it’s still being randomly activated in the Insider Fast Ring.

From the link above:

https://devblogs.microsoft.com/directx/hardware-accelerated-gpu-scheduling/

Is your video card supported and do you have installed drivers that introduce support for it as well? It is possible that the feature is being rolled out over time.

I have GTX 1650 with the latest drivers installed, but the option doesn’t appear, I’m starting to believe the GPU doesn’t support it.

I also have the GTX 1650 and I have it (just found out).. Maybe you’ll get it later

not seeing much info anywhere on which gpus are supported

Nvidia supports Pascal and higher (10-series and onwards) GPUs.

Scheduling works 3 ways: RS

Smart compute shaders with ML optimising sort order:

Sort = (Variable Storage (4Kb to 64Kb & up to 4Mb, AMD Having a 64Bit data ram per SiMD Line)

Being ideal for a single unit SimV SimD/T & data collation & data optimisation,

With memory Action & Location list (Variable table),

Time to compute estimator & Prefetch activity parser & optimiser with sorted workload time list..

Workloads are then sorted into estimated spaces in the Compute load list & RUN.

CPU & GPU & FPU Anticipatory scheduler with ML optimising sort order:

Sort = (Variable Storage (4Kb to 64Kb & up to 4Mb, AMD Having a 64Bit data ram per SiMD Line)

Being ideal for a single unit SimV SimD/T & data collation & data optimisation,

With memory Action & Location list (Variable table),

Time to compute estimator & Prefetch activity parser & optimiser with sorted workload time list..

Workloads are then sorted into estimated spaces in the Compute load list & RUN.

Open CL, SysCL cache streamlined fragment optimiser with ML optimising sort order:

Sort = (Variable Storage (4Kb to 64Kb & up to 4Mb, AMD Having a 64Bit data ram per SiMD Line)

Being ideal for a single unit SimV SimD/T & data collation & data optimisation,

With memory Action & Location list (Variable table),

Time to compute estimator & Prefetch activity parser & optimiser with sorted workload time list..

Workloads are then sorted into estimated spaces in the Compute load list & RUN.

(c) Rupert S

ORO-DL : Objective Raytrace Optimised Dynamic Load & Machine Learning : RS

Simply places raytracing in the potent hands of powerful CPU & GPU Features from the 280X & GTX 1050 towards newer hardware.. While reducing strain for overworked GPU/CPU Combinations..

Potentially improving the PS4+ and XBox One + & Windows & Linux based source such as Firefox and chrome

Creating potential for SiMD & Vectored AVX/FPU Solutions with intrinsic ML.

This solution is also viable for complex tasks such as:

3D features, 3D Sound & processing strategy.

Networking,Video & other tasks you can vector:

(Plan,Map,Work out,Algebra,Maths,Sort & compare,examine & Compute/Optimise/Anticipate)

(Machine Learning needs strategy)

Primary Resources of Objective Raytrace:

Resource assets CPU & GPU FPU’s precision 8Bit, 16Bit, 32bit + Up to capacity,

Mathematical Raytrace with a priority of speed & beauty first,

HDR second (can be virtual (Dithered to 10Bit for example) AVX & SiMD

(Obviously GPU SiMD are important for scene render MESH & VRS so CPU for both FPU & Less utilised AVX SSSE2 is advisable)

Block render is the proposed format, The strategy optimises load times at reduced IRQ & DMA access times..

Reducing RAM fragmentation & increasing performance of DMA transferred work loads.

Block Render DMA Load; OptimusList:

64KB up to 64MB block DMA requested to the float buffer in the GPU for implementation in the vertice pipeline..

Under the proposal the Game dynamic stack renders blocks in development testing that fit within the requirements of the game engine,

Priority list DMA buffer 4MB 16MB 32MB 64MB

The total block of Ray traced content & Audio, Haptic, Delusional & Dreamy Simulated,

SiMD Shader content that fits within the recommended pre render frame limit of 3 to 7 frames..

1 to 7 available & Ideally between 3 & 5 frames to avoid DMA,RAM & Cache thrashing..

and Data load.

As observed in earlier periods such as AMIGA the observable vector function of the CPU is not so great for texturing, However advancements and necessity allow this.

SiMD Shader emulation allows all supported potential and in the case of some GPU..AVX2, AVX 256/512 & dynamic cull…

The potency is limitless especially with Dynamic shared AMD SVM,FP4/8/DOT Optimised stack.

Background content & scenes can be pre rendered or dynamically (Especially with small details)..

In terms of tessellation & RayTrace & other vital SiMD Vector computation without affecting the main scene being directly rendered in the GPU..

Only enhancing the GPU’s & CPU’s potential to fully realise the scenes.

Fast Vector Cache DMA.

So what is the core logic ?

CPU Pre frame RayTrace is where you render the scene details: Mode

Plan to use 50% of processor pre frame & timed post boot & in Optimise Mode :RS :

50% can be dynamic content fusion.

Integer(for up to 64Bit or Virtual Float 32Bit.32Bit)(Lots of Integer on CPU so never underestimate this),Vector,AVX,SiMD,FPU processed logic ML

The Majority of the RayTrace CPU does can be static/Slow Dynamic & Pre planned content.

(Pre planned? 30 Seconds of forward play on tracks & in scene)

Content with static lights & ordered shift/Planned does not have to be 100% processed in the GPU.

To be clear CPU/GPU planned content can be transferred as Tessellated content 3D Polygons or as Pre Optimised Lower resolution Float maths & shaders.

Potential usage include:

3D VR Live Streaming & movies : RS

With logical arithmetic & Machine learning optimisations customised for speed & performance & obviously with GPU also.

We can do estimates of the room size and the dimensions and shape of all streaming performers & provide 3D VR for all video rooms in HDR 3DVR..

The potential code must do the scene estimate first to calculate the quick data in later frames.

Later in the scene only variables from object motion & a full 360degree spin would do most of the differentiation we need for our works of action & motion in 3D Render.

The potential is real, For when we have real objective, dimensions & objects? We have real 3D.

The solution is the mathematics of logic.

3D VR Haptic & learn

Conceptually the relevance of mapping haptic frequency response is the same parameter as in ear representative 3D sound.

For a start the concept of an entirely 3D environment does take the concept of 2D rendering the 3D world & play with your mind.

Substantially deep vibration is conceptually higher & intense pulse is thus deeper,

However the concept is also related to the hardness of earth & sky or skin.

Ear frequency response mapping is a reflection of an infrared diode receptor & infrasound harmonic 3D interpretation, Such as Sonar & Radar.

(c)RS

All this can be ours : Witcher 3 Example Video : https://www.youtube.com/watch?v=Mjq-ZYK7oJ8

https://science.n-helix.com/2019/06/vulkan-stack.html

https://science.n-helix.com/2014/08/turning-classic-film-into-3d-footage-crs.html

https://science.n-helix.com/2018/01/integer-floats-with-remainder-theory.html

https://science.n-helix.com/2020/01/float-hlsl-spir-v-compiler-role.html

https://science.n-helix.com/2020/02/fpu-double-precision.html

Raytracing potent compute research’s:

https://bitshifter.github.io/2018/06/04/simd-path-tracing/

Realtime Ray Tracing on current CPU Architectures : https://pdfs.semanticscholar.org/7449/2c2adb30f1ea25eb374839f3f64f9a32b6c7.pdf

RayTracing Vector Cell : https://www.sci.utah.edu/publications/benthin06/cell.pdf

https://research.dreamworks.com/wp-content/uploads/2018/07/Vectorized_Production_Path_Tracing_DWA_2017.pdf

https://aras-p.info/blog/2018/04/10/Daily-Pathtracer-Part-7-Initial-SIMD/

https://aras-p.info/blog/2018/11/16/Pathtracer-17-WebAssembly/

Demo WebAssem: NoSiMD: SiMD & AVX Proof of importance

https://aras-p.info/files/toypathtracer/

(c)RS

(c)Rupert S https://science.n-helix.com

Update confirmed:

Nvidia even ray-traced the 980! in Vulkan … Works on AMD,Quadcom, Android, NVidia and PowerVR..

The potential exists for all,

Powerful CPU’s & GPU’s make all possible #TraceThatCompute2020 .

Felt like I just read a Shakespeare’s novel.

@Duke Thrust

Thanks for that extensive comment, but the dolts here with never read it, thus I summarized it:

Search Google and Wikipedia

lmao

This feature sounds nice in theory but it’s still rather buggy, particularly when it comes to video playback: BSODs, TDRs and GPU process crashing in browsers So it’s best to enable it with caution.

I tried playing a couple of games with it on/off and didn’t notice any performance changes on my setup, all difference was within a margin of error.

RX 580 here, also running Windows 10 2004 and still doesn’t have that option, hopefully in several days AMD users will have it.

Will need to be any card that is capable of rtx rendering.

Nope. It works on GTX 10 series cards, and AMD cards provided they have the latest drivers. (latest lastest, IE, the third version of AMD’s recent driver they had to hotfix twice. lol)

I don’t have W 2004 yet, but I’ve still activated in the windows graphics performance preferences some applications, including Firefox, which gives it a significant speed gain on my small Asus laptop with a small Intel(R) HD graphic.

How do you have this if you do not have Windows 2004? I thought they changed the WDDM model in Windows 2004 to have this feature.

@Raj

pat’s comment is deceptive and rather off topic, as he hasn’t activated Hardware Accelerated GPU Scheduling with that

Raj : Type in search box : graphics settings and you can add a list of apps to have high graphics performance.