Princeton Student Develops GPTZero, Software to Detect Plagiarism by AI Language Model ChatGPT

Princeton Student Develops GPTZero, Software to Detect Plagiarism by AI Language Model ChatGPT

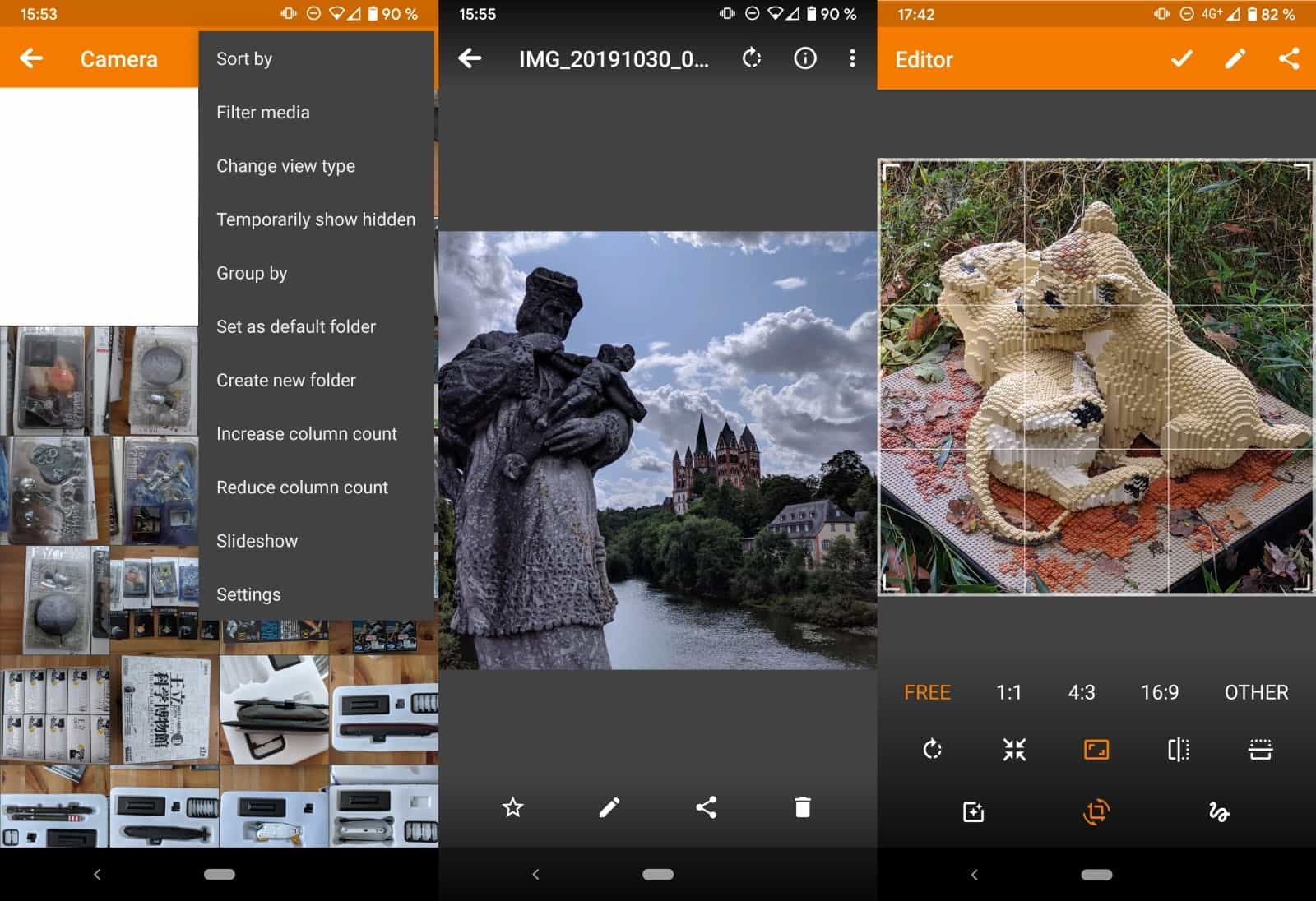

Upon discovering ChatGPT, a novel chatbot program, Edward Tian, a computer science concentrator who is currently working on his thesis concerning artificial intelligence (AI) detection, tasked the bot with generating rap lyrics. During his winter break, Tian spent several days coding a software, which he named GPTZero, in a Toronto coffee shop. This program is designed to identify writing created by AI.

On January 2, Tian shared the beta version of GPTZero on Twitter. Since then, his tweet has garnered over 7 million views, and the software has been downloaded by individuals residing in 40 states and 30 countries, according to Tian. Additionally, his product has been covered by several prominent news outlets, such as the New York Times, the Washington Post, and the Wall Street Journal, among others. Tian recently spoke with the 'Prince' about the future of GPTZero and his plans for the software.

Related: ChatGPT and Bing give problems: Emotional breakdowns, strange answers, and more

‘I had a bunch of free time over break,' he said in an interview. 'I was like, maybe I’ll just code this out, so everybody can use it.’

OpenAI, a prominent artificial intelligence research company that has been in operation for seven years, created ChatGPT, an AI chatbot that is capable of engaging in conversations with users and producing written content in various styles in response to plain English prompts. The remarkable accuracy and potential applications of ChatGPT have generated significant interest and debate among experts and the public alike. For Edward Tian, this conversation sparked an interest in the potential consequences of ChatGPT's exceptional writing abilities for academic writing and plagiarism.

‘The last few weeks, the hype around ChatGPT has been so crazy. That’s what’s got me thinking about the impact towards teachers and schools.’

As part of his work on AI detection for his thesis, Edward Tian had already spent time contemplating the technology and had a portion of it saved on his laptop.

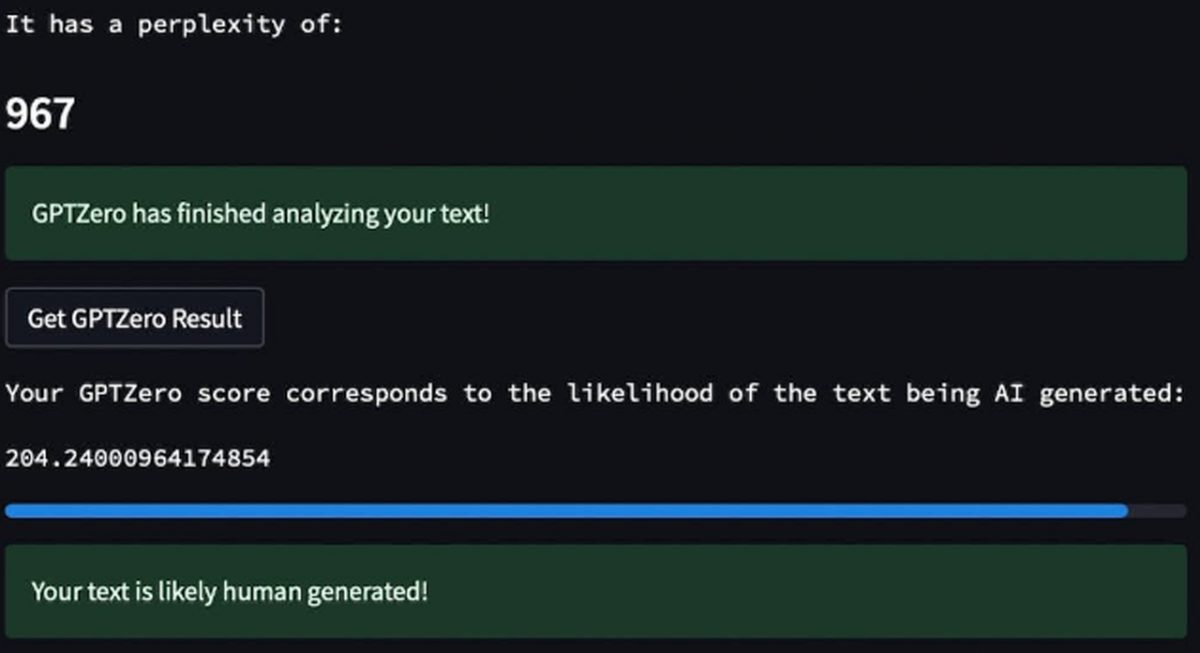

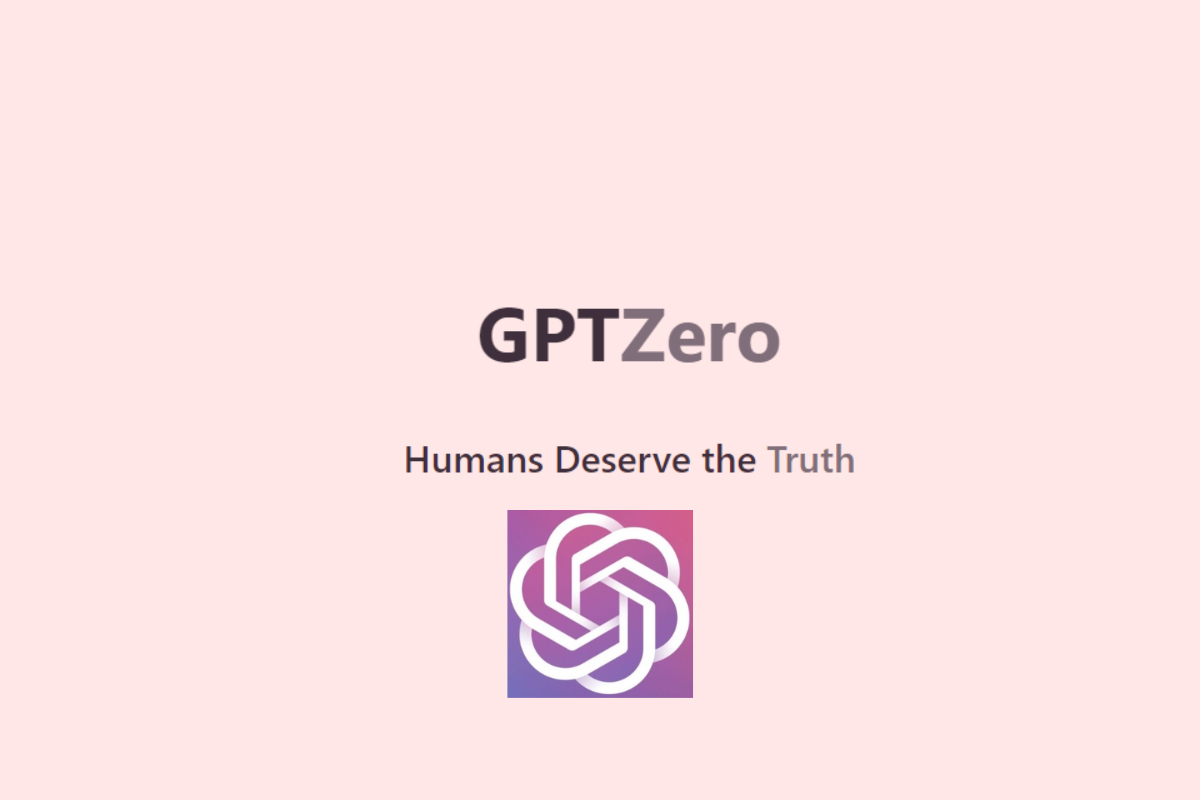

GPTZero, on the other hand, leverages two metrics to calculate the likelihood that a piece of text was created by AI: perplexity and burstiness. Perplexity measures the degree of randomness in the choice and structure of words within a sentence, while burstiness compares perplexity between sentences. Since ChatGPT works by predicting the most probable next word, the greater the randomness in the sentence construction, both within and across sentences, the more probable it is that the text was written by a human.

Jennifer Rexford, Chair of the Department of Computer Science, provided a statement to the 'Prince' in which she praised Edward Tian's GPTZero program, stating that ‘Tian’s innovative GPTZero application is a wonderful example of Princeton students engaging deeply with both the technical and the social implications of the rapid developments in artificial intelligence.’

Despite receiving multiple inquiries from venture capitalists, Edward Tian has opted against selling his software and, instead, is dedicating his focus towards enhancing it. To that end, he has enlisted the assistance of several recent Princeton alumni with whom he has collaborated before.

On January 15, the group introduced GPTZeroX, which includes several new features, including the capacity to highlight specific phrases or sentences within a text that the program deems potentially AI-generated. In their GPTZero Substack, they alluded to ambitious prospects for the software's future.

‘GPTZero may have started out small, but our plans are certainly not.’

Edward Tian revealed that the team is presently engaged in identifying implicit bias in text generated by AI. ‘Is there something implicit in machine-written articles that human-written articles do not have? We think that there probably is.’

The debut of GPTZero coincided with widespread apprehension about the potential misuse of ChatGPT by students to cheat on their essays and the effects it might have on conventional writing tasks. Consequently, school districts nationwide have restricted access to ChatGPT on school networks and devices. However, Edward Tian is not opposed to advancements in AI, despite these concerns.

‘Everyone should use these new technologies. But it’s important that they’re not misused.’

Edward Tian clarified that his inspiration for developing GPTZero arises from his appreciation for the artistry of writing. 'There should be aspects of human writing that machines can never co-opt,' he remarked.

The problem with tools providing results in terms of probability is that certainty is never the conclusion.

We are already in an era where certitudes are often built upon probabilities, high/low in the best case, 51%/49% in the worst. Easy to imagine the amount of those of us who will grant a probability the rank of evidence.

IMO the problematic is the same for AI than for AI detectors : doubt, doubt which by itself isn’t a flaw but gets to be given human nature dislikes doubts and worships certitudes. Except scientists.

The mental virus is spreading in a world already submerged by fake news when they might be true, true news when they might be fake, conspiracy theories in place of awareness and doubt. AI brought to the masses won’t help to ensure minds to think correctly before acquiring information and processing it as a good. After all not everyone can afford a Ferrari but anyone can afford being the big-shot who knows what others ignore : power, free power. That’s all what social websites are already all about. No earth at the horizon and the storm is just starting.

100% with you, Tom. “Certitudes” are a trap that too many people fall into and use to base their world view on. This only leads to various forms of tribalism, divisiveness, polarization and conflict.

Being well-educated (not necessarily via mainstream schools), well-read, open minded and intellectually vigilant is the only way to even have a chance to navigate in today’s online and media world. Not an easy job… but I believe critical thinking skills are the best defence.

So here’s a scary concept: Train AI to take into account any AI detecting algorithms…

For example, set up a procedure where ChatGPT’s response to someone’s query is first routed through GPTZero to measure perpexity and burstiness. Then train ChatGPT to modify its initial response so the measures of perplexity and burstiness are such that it is less likely to be detected as written by an AI. Might this lead to “stealth” AI? At least it might lead to an arms race of sorts. :(