Microsoft Bing AI has been proven wrong

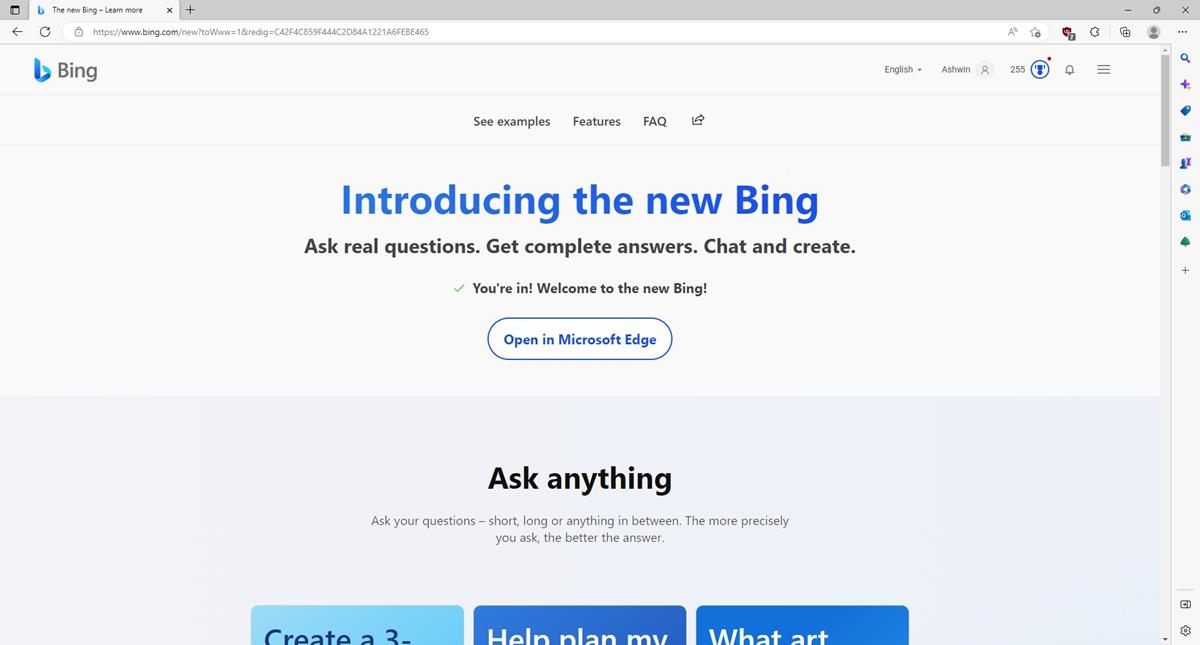

Both Microsoft and Google held presentations of artificial intelligence products last week. Microsoft presented integration of AI in the company's Bing search engine and in Microsoft Edge on Tuesday of last week.

Google revealed Bard, a language model designed for dialogue, the next day. Reception of both events was totally different. Microsoft awed much of the press and users with its careful presentation of new features and capabilities. Google, on the other hand, received lots of bad press, especially for not revealing a final product, but also for an error in one of the answers the AI made.

Now that the dust has settled, it becomes clear that Microsoft's AI is not infallible as well.

Tip: check out Ashwin's first impressions of the Bing enhanced with AI.

The errors of Bing AI

Several researchers, including Dmitri Brereton, highlighted errors in answers produced by Microsoft's artificial intelligence. It needs to be noted that some of the answers produced did not contain errors.

The pro and con list of the Bissell Pet Hair Eraser Handheld Vacuum made it sound like a bad product. Bing cited limited suction power, a short cord and noise as the main cons. Brereton looked up the source article and product itself, and concluded that the source was not providing the negative information about the product that Bing cited.

Brereton spotted another error when Bing's AI was tasked with writing a 5-day trip itinerary for Mexico City. Some of the night clubs that Bing suggested appear to be less popular than the AI made them look like. Some descriptions were not accurate, and in one instance, Bing's AI forgot to mention that it was recommending a gay bar.

Brereton found issues with Microsoft's demonstration of getting the AI to generate a summary of Gap's financial statement. Brereton found that several of the numbers in the summary were wrong, and that in one case, a number provided by the AI did not even exist in the financial document at all.

The comparison to Lululemon's data contained wrong numbers as well. Lululemon's numbers were not accurate to begin with, which meant that the AI compared inaccurate Gap and Lululemon data.

Closing Words

There is a reason why experts advise users and organizations to verify information that is provided by AI. It may sound plausible, but verification is essential. Microsoft's AI does list its sources, but that is of no help if the source can't be use as verification, as it may not include all the data that the AI provided. Sources may be incomplete, which Microsoft and other companies should address quickly.

Microsoft's stock did not tank after its presentation, and one of the reasons for that was that the errors were not as obvious as Google's error. One would have to look up the product, the suggested bars or the financial reports, and compare them to the answers provided by the AI, to encounter these errors.

All in all, it is clear that while AI may be useful and helpful, it is also miles away from providing information that one can trust without verification.

Now You: do you plan to use AI in the coming years?

I talk with ChatGPT one hour every night and it makes a lot of mistakes too. The only one that has made no mistakes at all has been the owner, that is very rich now. Thanks for the article.

They trying to make Bing better. I’ll give a try, if it isnt that bad. But as soon as google search is the king, I dont see realy going into.

When you think about it, this just shows us that these AI are even more like humans then we thought.

When they start causing harm for monetary gain, we’ll know they are truely sentient.

Question is, should we believe AI any more than anything else. It’s sold as the answers to all answers. Another overhyped attempt to sell us on artificial intelligence.

John, it is probably a good strategy to verify any content or claims that you come across, be it on the Internet or elsewhere. AI does not really change that, not until an infallible AI is created.

The article’s closing words say it all :

“There is a reason why experts advise users and organizations to verify information that is provided by AI. It may sound plausible, but verification is essential. Microsoft’s AI does list its sources, but that is of no help if the source can’t be use as verification, as it may not include all the data that the AI provided. Sources may be incomplete, which Microsoft and other companies should address quickly.”

If you have to check every ChatGPT answer to a user’s quest, I don’t really see the benefit.

To answer the article’s question : “do you plan to use AI in the coming years?” the answer is clearly : no. No, but less for a reason of principle than for that of reliability. For sure nothing is bullet-proof, AI included. As i see it the argument could be that AI is less likely to mistake than humans together with a synthesis capability. Perhaps, yet at this time it’ll be a “yes, but no thanks”.

+100, nothing to add.

Checking the accuracy of writing and redoing when necessary is faster than doing the bulk of writing yourself. Thats the benefit.

The whole thing is guided by the user.