Google Bot has privileges, or, how to browse the Internet as Google's Bot

Last year I reviewed a method to load all content on the Experts-Exchange website by disguising the browser as Googlebot. Or more precisely, your browser's user agent header.

The site blocked unregistered users from accessing content on the site, but allowed googlebot to access the content.

Apparently a similar story makes its way around the Internet these days with a more detailed approach detailing the steps that you have to partake to be identified as Googlebot.

It is not enough to simply change the User-Agent string to Googlebot if the website in question checks for cookies, uses Javascript for detection, or compares the IP to make sure it is indeed in Google's IP range.

Modifying just the User-Agent might work to gain access to some websites, but others probably will not work because they perform additional checks.

Google Bot user agent

Here are the five factors that are important:

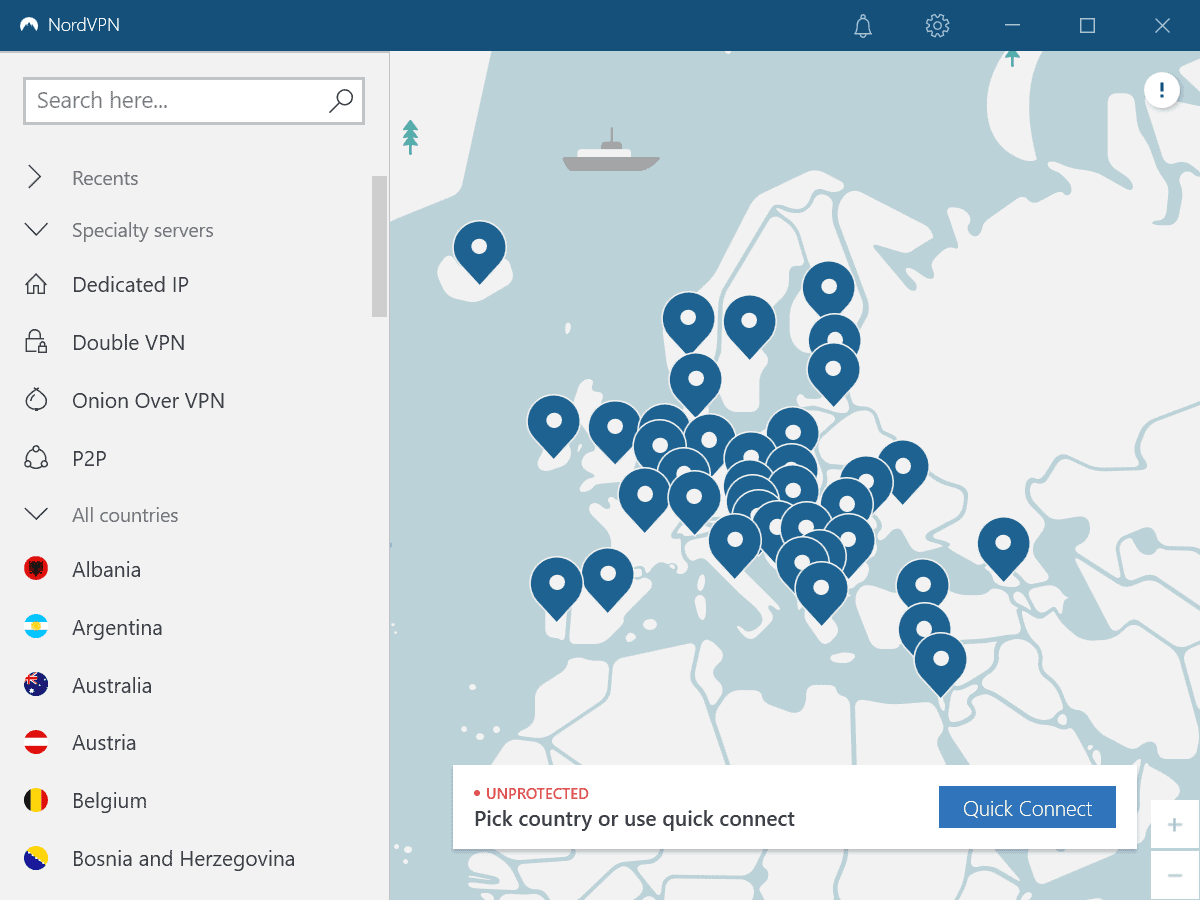

- IP: Use Google Translate to surf the site. You can alternatively use a web proxy or regular proxy, use the anonymizer Tor or a virtual private network for the same effect.

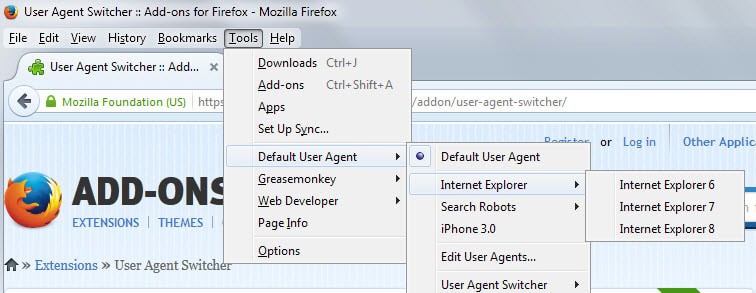

- User-Agent: Use the Firefox Extension User-Agent Switcher and add the information about Googlebot.

- Javascript: Use a Firefox Extension like No Script to turn it off on sites you visit (or more precisely, stop any JavaScript program from running automatically)

- Cookies: Use the Firefox Extension Cookie Safe to block cookies that the site tries to set.

- Referrer: Use the Firefox Extension RefControl to disable the Referrer.

Keep in mind that it may be sufficient to use some of the options and not all of them. Depending on the website, you may only need to change your user agent or IP to access the contents. The only thing you can do to find out is to test it using various setups.

The website describing the techniques is currently down because it was not able to handle the massive amount of visitors that Digg and other sites sent to it.

Update: The website is up again and you find all relevant information on it again.

Update 2: The website is down again and it is unlikely that it will come back up again. I have removed the link, but the information above should be enough to get you started.

The one thing that you need to do at all times is to set the user agent of your browser to Googlebot. If that is not enough, you may need to make use pf (some of) the other four factors outlined above to get it to work.

“Websites don’t grant Googlebot special access to their pages so that people go to them and have to register to see.

Googlebot just breaks in. It’s not a lure to make you register.”

That’s crazytalk.

In case that wasn’t very clear, I’ll simplify it.

Websites don’t grant Googlebot special access to their pages so that people go to them and have to register to see.

Googlebot just breaks in. It’s not a lure to make you register.

Actually it never was “a trick”. It never worked to begin with. It’s just been people saying “oh I bet this would work”. It doesn’t work because the concept is flawed. Googlebot does indeed have special access to “registered only” pages, but it’s not because of its user-agent, IP address, or anything like that.

Websites (forums particularly) don’t allow Googlebot special permissions, and they don’t check to see if Googlebot’s IP or user-agent is spoofed, unless an admin does it manually out of suspicion. The most they do is disallow certain parts of the site to Googlebot through the use of Robots.txt.

The REAL reason Googlebot gets into “registered only” pages is because the website didn’t disallow Googlebot from crawling them in Robots.txt, and Googlebot just breaks in essentially, because the only rules it follows are those defined in Robots.

I’m not sure what methods it uses to do such, but as an admin on several forums, I know for fact that it just “breaks into” our registered users only pages, as I’ve seen the Googlebot show up in logs as accessing anything from the main index to user profiles and private messages, even the Moderators Only sections of the forum, as a guest (or anonymous) user.

The only way to stop it is Robots.txt or to ban the entire Googlebot IP range (and even that doesn’t work for long because there’s always new IP ranges).

So pretending you’re Googlebot will never, ever work. You have to actually BE the Googlebot software.

Just use Google’s cache of the page.

hey anyone know how to do so in opera browser? like i do opera:config then go to user agent but i dont know how to add google bot.

Thanks Martin,

I tried several different combinations of googlebot found on the Internet but it hasn’t worked for me on any website I have tried so far.

I guess webmasters have wised up since this trick has been around for almost 3 years.

Anybody has had any more luck with this?

Threshold try the following settings:

Description: Googlebot 2.1

User-Agent: Googlebot 2.1

Well, in case this actually works, it would be nice if you explained how to fill the Googlebot details in User-Agent-Switcher for the teckie-challenged out here.

What goes into each field?

This trick is reported all over the net but not one that explains it clearly.

I think everyone should report sites like EE to Google for violating their policy of showing the bot one page and giving users a completely different page.

I only used the cached version of EE pages now as the normal linked page is completely useless.