Nvidia AI Workbench: Breakthrough or just hype

In sync with the prestigious SIGGRAPH event, the prominent annual AI academic conference, Nvidia unveiled AI Workbench. This innovative technology is crafted to empower users with the ability to construct, scrutinize, and tailor generative AI models on conventional PCs or workstations.

What makes this platform noteworthy is its ability to smoothly transition these models from a local environment to larger-scale deployment in data centers or on public cloud infrastructure.

“In order to democratize this ability we have to make it possible to run pretty much everywhere,” stated Jensen Huang the CEO of Nvidia, during a keynote at the event.

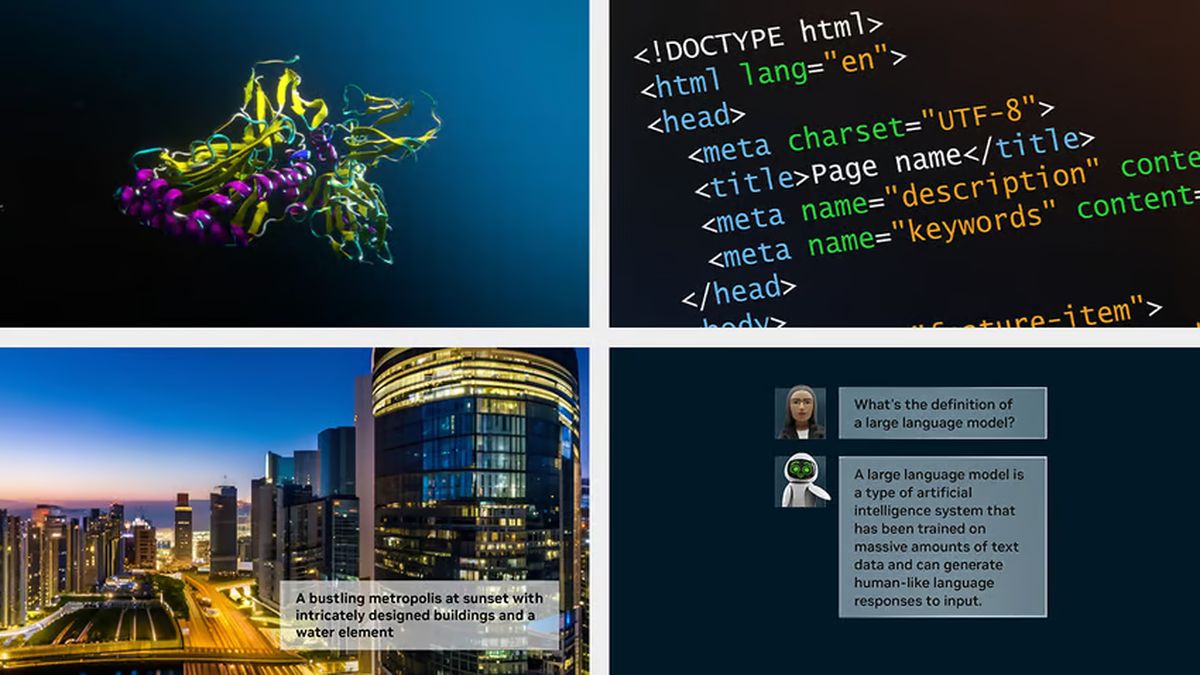

What is Nvidia AI Workbench?

Known as AI Workbench, Nvidia's newly launched service is accessible through a straightforward interface on a local workstation. It enables developers to meticulously adjust and examine models from renowned repositories such as Hugging Face and GitHub, utilizing proprietary data. When the time comes to expand, cloud computing resources are readily available.

Nvidia's VP of enterprise computing, Manuvir Das, elucidates that the driving force behind AI Workbench was the intricate and lengthy process of customizing extensive AI models. When venturing into enterprise-scale AI initiatives, finding the appropriate framework and tools across various repositories can become a complex task. This complexity grows manifold when the projects need to transition between infrastructures.

Indeed, the path to successfully launching enterprise models into production is fraught with obstacles. A survey conducted by KDnuggets, the prominent data science and business analytics platform, reveals a startling insight: about 80% or more of data scientists' projects never make it past the deployment of a machine learning model.

With the advent of AI Workbench, Nvidia aims to address these challenges, providing a more streamlined approach to developing, testing, and deploying AI models, breaking down barriers and potentially increasing the success rate of enterprise-scale AI projects.

“Enterprises around the world are racing to find the right infrastructure and build generative AI models and applications. Nvidia AI Workbench provides a simplified path for cross-organizational teams to create the AI-based applications that are increasingly becoming essential in modern business,” Das said in a canned statement.

The question remains about how much the AI Workbench truly simplifies the development process. However, as Manuvir Das pointed out, the platform's capabilities allow developers to consolidate various components, including models, frameworks, SDKs, and libraries for both data preparation and visualization. These components can be drawn from open-source resources into one cohesive workspace.

The explosion of interest in AI, especially in generative AI, has led to a surge of tools designed to tweak large, generalized models to suit particular needs. Emerging startups are striving to simplify the process for companies and individual developers, making customization possible without the heavy investment in cloud computing.

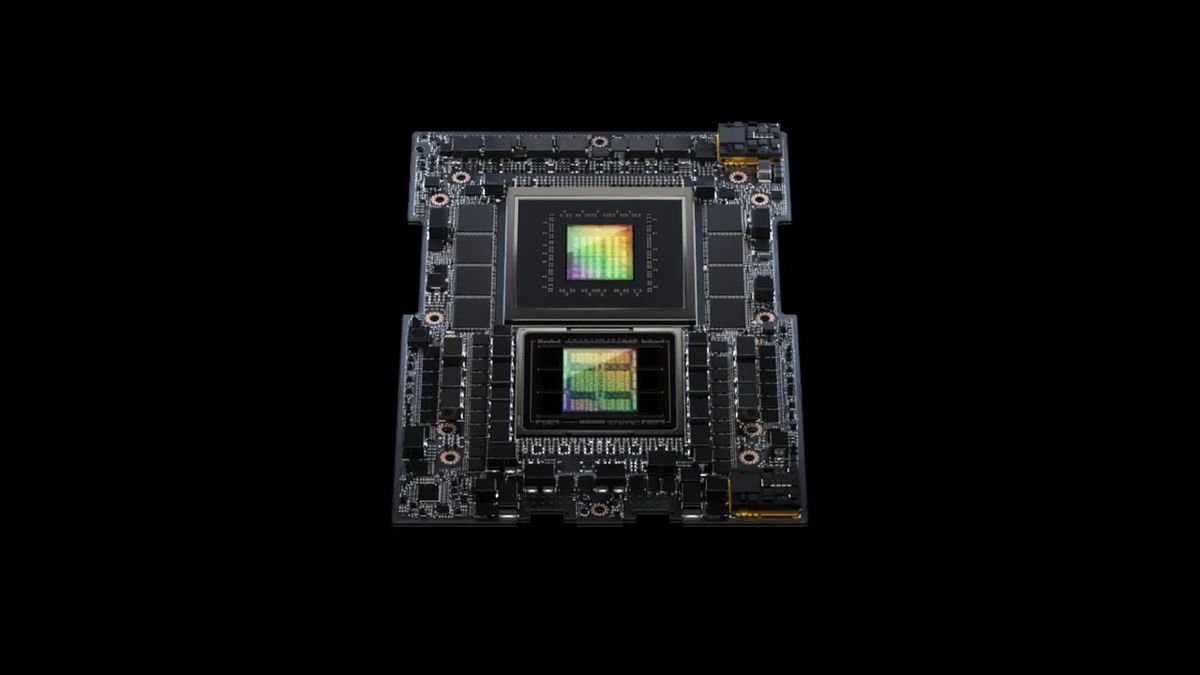

Nvidia's AI Workbench represents a departure from the conventional cloud-based approach to AI development. It emphasizes local fine-tuning, a strategy that aligns with Nvidia's interests and its portfolio of AI-enhancing GPUs. While Nvidia's marketing subtly leans towards its RTX lineup, this locally oriented approach might attract developers looking for more independence from specific cloud services in their AI experimentation.

The boom in AI-driven demand for GPUs has buoyed Nvidia's financial performance, propelling it to new commercial successes. The company's market cap briefly touched the $1 trillion mark in May after reporting a revenue of $7.19 billion, a 19% increase from the preceding fiscal quarter. Nvidia's AI Workbench, in line with this growth, positions the company to further leverage the expanding AI market by offering a more flexible and locally grounded approach to AI development.

Advertisement