Can Siri read lips? Apple thinks so

Siri, Apple's virtual assistant, is constantly evolving. In recent years, Siri has become more accurate at understanding voice commands, even in noisy environments. But what if Siri could also read lips?

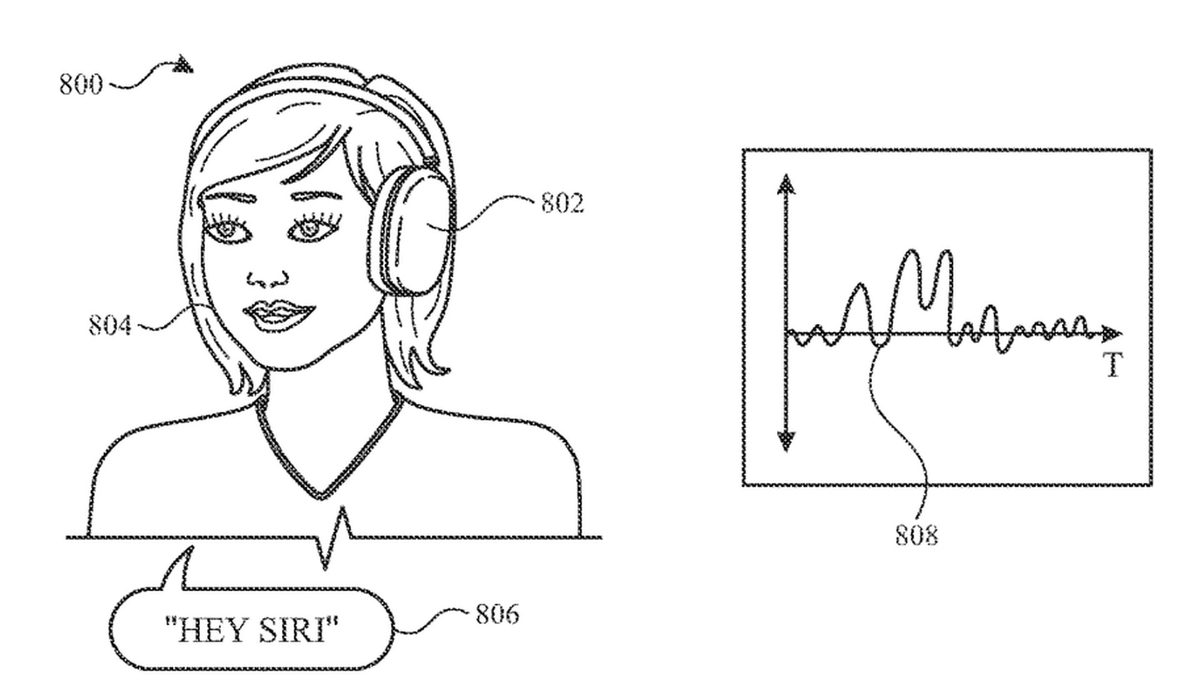

A new patent application from Apple suggests that this may be the future of Siri. The patent application describes a system that would use a camera to track the movements of a person's lips and then use artificial intelligence to translate those movements into words.

Keyword Detection Using Motion Sensing

Apple, as reported first by Apple Insider, acknowledges the apparent limitations of speech recognition technologies like Siri. Other sensors that constantly monitor people's voices need a lot of battery life and computing power, and sounds can be distorted by background noise. The camera on the smartphone wouldn't have to be used for this to work. Instead, the device's motion sensors would capture any movements made by the user's lips, neck, or head, and the program would analyze the data to decide if they were indicative of human speech. The patent application is titled "Keyword Detection Using Motion Sensing."

Apple's patent suggests that these sensors are attached to accelerometers and gyroscopes, which are more resistant to interference than microphones. The patent explains how this motion-detecting technology might be included in AirPods or even a vague reference to "smart glasses," which would then transmit this data to the user's iPhone. According to the paper, the equipment would be able to identify even the most minute movements of the face, the neck, or the head. Even if Apple's hopes for smart glasses died a long time ago, the corporation still has high expectations for its Vision Pro headset.

The patent application does not specify when or if Apple plans to bring this technology to market. However, it is an interesting example of how Apple is constantly looking for new ways to improve Siri.

There are several potential benefits to lip-reading Siri. First, it would allow Siri to understand what someone is saying even if they are wearing a mask or in a noisy environment. This would be especially helpful in situations where it is not possible to speak, such as in a crowded room or while driving. Also, lip reading Siri could be used to improve the accuracy of Siri's voice recognition. This is because the AI would be able to see the movements of the lips and use that information to help it understand what someone is saying.

There are also some challenges that would need to be addressed before lip-reading Siri could become a reality. One challenge is that lip reading is not always accurate. This is because there are many different ways to say the same word. Another challenge is that lip reading can be difficult in noisy environments. This is because the AI would need to be able to distinguish between the movements of the lips and the background noise perfectly.

Advertisement

Maybe this time they’ll get permission from users before they implement. Oops, forgot it’s Apple, the dystopian company.

Hey, maybe this time it’s not their fault, just another AI that went rogue and started reading lips without them being aware !