How Webmasters use social sites to create a keyword monopoly

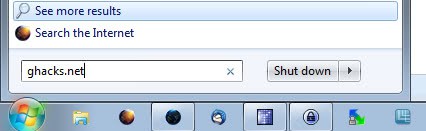

The regular user thinks that if he searches for a keyword in Google he will receive a listing of ten different websites that are the best matches for the keyword entered. This common believe is wrong. Clever marketers have found ways to make their website appear in all of the search results on that page or at least in many of them.

We have two different approaches though. The first is to get a so called double ranking. If two pages of a website are on the same results page the lower ranking one gets moved just below the higher ranking one. If you have the number 1 spot the lower ranking one will get the number 2 spot even if it would only qualify for number 9 or 10.

That's two spots of ten already. What happens if you submit a site to a social news or bookmarking website? Right, it gets a new entry in the search engines that points to the original url that the marketer wants to promote. Many social sites like Digg have such a high standing at Google that their results are often seen in the top 10 or 20 if you search for a keyword.

There is another way that is currently possible. Google treats subdomains as if they were different domains. Marketers create keyword rich subdomains that are pushed in the search engines as well. So, even if you expect to see 10 different results the reality could be different.

Update: The situation has evolved even more over the last years. With Google adding more of their products into the search results, webmasters now use those products to dominate a search results listing. If they see YouTube links in there hard coded, they create and upload videos to YouTube, and optimize them afterwards to make their videos appear on Google's frontpage.

Advertisement

Yup, I’ve experienced this first-hand. The marketing guys at work are always after me to add more keywords in my writing.