Firefox 59: mark HTTP as insecure

The Web migrates from using HTTP predominantly to HTTPS. More than 66% of all Firefox page loads are now secured by HTTPS, an increase of 20% when compared to the January figure of this year.

HTTPS encrypts the connection to protect it against tampering or spying. The rise of Lets Encrypt, a service that offers certificates for free, and the push to HTTPS by Google Search, and companies that create browsers, surely played a role in the big year over year increase.

Most web browsers will mark non-HTTPS websites as insecure beginning in 2018. Plans are underway already; Google Chrome for instance marks HTTP sites with password or credit card fields as insecure already, and Mozilla announced plans to deprecate non-secure HTTP in Firefox, and highlights HTTP pages with password fields as insure as well.

Mozilla added a configuration switch to Firefox 59 -- currently available on the Nightly channel -- that marks any HTTP site as insecure in the web browser.

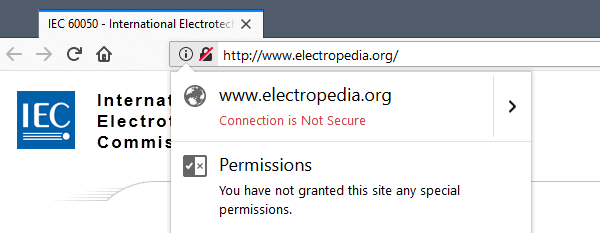

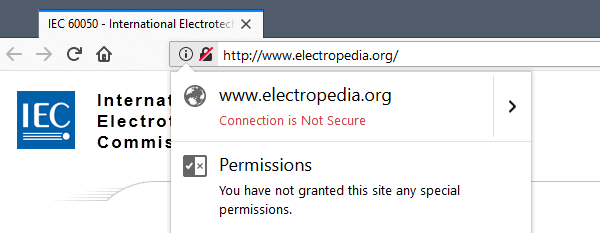

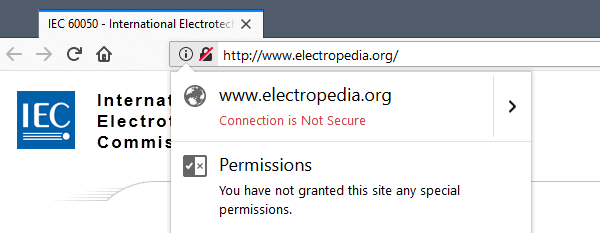

Firefox displays a lock symbol that is crossed out on non secure sites. A click on the icon displays the "connection is not secure" notification that current versions of Firefox display already.

The switch makes the fact that the site's connection is not secure more visible in the browser. It is only a matter of time until this is implemented directly so that users won't have to flip the switch anymore to make the change.

You can make the change right now in Firefox 59 in the following way:

- Load about:config?filter=security.insecure_connection_icon.enabled in the browser's address bar.

- Double-click on the preference.

A value of true enables the insecure connection icon in the browser's address bar, a value of false returns to the default state.

Firefox users who only want the indicator in private browsing mode can do that as well:

- Load about:config?filter=security.insecure_connection_icon.pbmode.enabled in the address bar.

- Double-click on the value.

A value of true shows the new icon, a value of false does not.

Closing Words

It is only a matter of time before browsers like Chrome or Firefox will mark any HTTP site as insecure in the browser. Websites that still use HTTP at that time will likely see a drop in visits because of that.

Now You: Do you access HTTP sites regularly? (via Sören)

Next we should address Cloudflare’s anti-DDoS technology which involves making Cloudflare an invisible Man in the Middle for all your HTTPS connections to websites using there services. That’s a few millions of them totalling several orders of magnitude more users.

Cloudflare decrypts HTTPS traffic, analyzes it, reencrypts it and send it along to its destination. All you see is the destination’s name.

Here’s a blocker https://addons.mozilla.org/en-US/firefox/addon/block-cloudflare-mitm-attack/

All ( ? ) CHROME Clones Completely Block Sites With RC4 HTTPS Issues – 1 Of The Few Reasons I Still Keep FF As A Backup Browser.

What Now ?

—

XPOCALYPSE FOREVER !

—

https is full of flaws, every few months there’s some issue… surely solved in a little timespan, but who knew that flaw before it went public?

I find these changes so silly, I mean… who cares if now there’s a prominent icon to show that you’re in a HTTP site? It’s like Mozilla and Google are afraid of shoving the insecure status of a page in the face of the user…

And it gives the impression to the user that HTTPS is a “bulletproof” solution, which isn’t.

“these “security” companies”

I still remember COMODO, today in charge of certificates etc, spreading its dangerous toolbars redirecting to porn sites.

It is interesting to follow this encryption / VPN / SSL/TLS security mania. The only thing these technologies secure you from, is from ordinary citizens/script kiddies. They do not secure you from people that have the technical know-how, expertise and equipment that can bypass all of those without much trouble. Who says that your TLS/SSL connection is really secure – do you trust the CAs not to share their master keys with third parties – this is a joke to be honest. This security mania looks more to me, like a move to generate business for a bunch of these “security” companies. The DPI / sniffing equipment today is able to sniff/bypass/monitor all of the above and you will be none the wiser about it.

That argument is like saying because there are expert locksmiths and the police who can simply knock down your door, why bother locking any doors?!

You’re thinking about it in black-and-white terms – where either you’re totally secure or totally insecure. That isn’t the case. Is TLS completely 100% impervious to three-letter agencies? No, it isn’t, there are indeed ways around it. But it drastically raises the cost. Collecting or tampering with unencrypted HTTP sessions is simple and can be done with almost no risk of detection, if you have the proper position on the network. Using TLS (or a VPN, or etc) turns that into an active attack, and creates several ways that users (and security people in general) will see alarms going off. A man-in-the-middle attempt will cause the browser to scream about a certificate error. A compromised CA that’s been used to issue malicious certs runs the risk of being noticed via certificate-transparency logs. Know what scares the NSA? The prospect of running an attack and then having it be front-page news in the security and tech world within a few days. Its worthwhile to encrypt everything (instead of just stuff that “needs” it) because ubiquitous encryption narrows the space where bad things can be gotten away with. Remember Verizon inserting tracking headers in HTTP requests, or Comcast injecting Javascript popups into pages? TLS, flawed as it is, kills stuff like that dead. And the uses that really do need strong crypto don’t stand out when encrypted traffic is everywhere.

You don’t have to make interception perfectly, theoretically impossible. You just have to make it so difficult and costly that it doesn’t make any sense to do it.

@techamok: Google is a bad example, because they also own a browser, and do things like certificate pinning or checking which certificates Google sites (or fake ones in your example) present to the browser, so they’d catch the ISP pretty quickly.

In general, what you describe can be defeated by Google-like techniques, or by comparing behaviour across ISPs… something that may be done in an automated, crowd-sourced way down the line! (if it doesn’t already exist, I haven’t researched it)

TLS /does/ raise the bar considerably. Listen to Some Anon.

Source: I work in IT Security.

The MITM will cause the browser to scream only in case the traffic flow to the CAs, browser / OS built-in CA stores have not been manipulated. If your ISP can redirect DNS requests, rewrite network packets, it has the equipment / ability / know-how to manipulate everything on the network, including making you believe that you are visiting Google, when in fact you may be visiting something entirely else. And yes there are verification mechanisms but they are so primitive that anyone on the ISP network – MITM intruder or anyone else can fool them easily. And inspecting / modifying TLS/SSL today is not much more expensive because of the advent of a whole new generation of chips/asics/accelerators that can do native SSL/TLS processing/offload for encryption standards/algorithms, and because of the advent of big data processing. If an ISP wants to sniff/decrypt SSL/TLS it will do it, and your browser will behave the same way as it behaves now, you will not see any warnings, as an ISP is a man in the middle, and it can redirect your CA verification / OCSP and other requests to the nodes it controls. The DNS spoof possibility itself can make the whole SSL/TLS story a moot point.

And yes you may change your DNS servers on your computers, but again, an ISP can rewrite those requests and point them to their own servers, and then just return a response as if it comes from the DNS you entered on your computer.

Well said!

“The DPI / sniffing equipment today is able to sniff/bypass/monitor all of the above and you will be none the wiser about it.”

My understanding is different, if you have updated info I certainly would like to see a link. Maybe differentiating the capabilities of someone like the NSA as compared to a common ISP would be useful. I wouldn’t be surprised that a government entity has upgraded capabilities. For that matter, the NSA knowing about my fetish for “milk shakes” doesn’t really worry me. ;)

“HTTPS will not prevent DPI (Deep Packet Inspection) from looking at the TCP packet and examining the destination port to guess what protocol it is for. But it will prevent the DPI from learning the actual application data payload of the protocol.”

The eternal problematic of relying on so-called expertise. If delegating one’s security to security experts is one thing (and unavoidable when the user is not an expert himself), believing that doing so would be an international passport to security is another. Maybe an analogy with antivirus, “universal” anti-malware solutions which can lead a naive user to believe he is entitled to move around the Web in an inconsiderate way.

Adopting security tools: yes, but relying on them as if they were the cyber savior: no, for sure. I believe we should always behave on the Web as if we had zero protection, whatever the shield.

@jupe:

> Yeah I run into enough certificates errors as is is now, the more sites that switch the more annoying exceptions I will have to make.

This sounds like a MITM problem with your security software. If your security software offers a feature like “HTTPS scanning” (almost all have such a feature), really, you should disable this feature. There is no security benefit but it causes problems like yours.

Not sure I understand this obsession with HTTPS for everything – why should, say, the BBC News website necessarily move to HTTPS if all it does is provide news stories with no requirement for logging in? Some website types simply don’t need the added hassle and overhead.

https://doesmysiteneedhttps.com/

ShintoPlasm: “why should, say, the BBC News website necessarily move to HTTPS if all it does is provide news stories with no requirement for logging in?”

1) BBC News does provide user accounts (called iBBC) for comments, bookmarking of news topics & programmes, customizing locality preferences (eg. weather reports, traffic news, travel updates., etc.), etc. Since some users prefer to login (or have to login for commenting purposes), it is only fair that BBC ensure that their logins are secure, even if there are people who never make use of this function.

https://www.bbc.co.uk/usingthebbc/account

https://account.bbc.com/account

2) BBC News has already migrated to HTTPS for parts of its website, initially on a smaller scale around mid 2014, followed by increasingly larger phases since April 2016.

The below blog post by BBC explains the benefits of using HTTPS, which are applicable to all internet users (& not just BBC News site users). HTTPS is not merely limited to securing logins:

http://www.bbc.co.uk/blogs/internet/entries/f6f50d1f-a879-4999-bc6d-6634a71e2e60

3) On a related note, below is BBC’s recent blog post (11 Dec 2017) detailing its adoption of HTTP/2:

http://www.bbc.co.uk/blogs/internet/entries/9c036dbd-4443-43c6-b8f7-64e5b518fc92

HTTPS is not only about securing logins, it’s also about integrity of ressources and that’s an important security aspect for _every_ website. Also HTTPS is a requirement for HTTP/2 which is a good thing for website performance. HTTPS is also a ranking signal for search engines and that’s very important for news websites like BBC News.

I completely agree with everything you’ve said, I am of course basing that on my very basic knowledge of the subject, such as it is.

“HTTPS is a requirement for HTTP/2 which is a good thing for website performance” which brings up one observation of mine, which is when changing the about:config entry ‘network.http.spdy.enabled.http2’ to false I see an increase in page load times and I realize it can have privacy implications but… in this instance I choose performance. It seems like in the last year a huge percentage, maybe over 90%, of the websites I visit have moved to HTTPS. I can’t help but think that is a very good thing. Benchmark>

“https://www.httpvshttps.com/”

@Marti Martz,

> DNS requests are almost always visible somewhere and encryption is usually quite weak.

DNS certainly is a potential vulnerability. Maybe would it be pertinent to emphasize on DNS encryption as it is done with HTTP. Personally I’ve been running for years now a tool called DNSCrypt, in my case as DNSCrypt-Proxy but GUI front-ends exist. I have to rely on what I’ve read and been told about DNS potential security issues and DNSCrypt’s ability to reduce them with encrypted requests.

> Translation into a more common terminology Digital Rights Managements *(DRM)*. Some resources need protection but others really don’t and violate fair use among other rights. Tread carefully.

Digital Rights Management has NOTHING to do with my comment. Please use Google or any other search engine if you don’t understand what integrity of ressources in this context means.

> That’s debatable.

No, it’s not at all. The differences between HTTP/1 and HTTP/2 are facts. How much you perceive a difference depends on the website but it’s not debatable that HTTP/2 is a good thing for the performance.

> SEO has very little to do with it other than pressuring sites into using TLS *(SSL)*.

There is no doubt that SEO is an important factor for news sites. ShintoPlasm wrote about a news site. And if search engines see HTTPS as ranking signal then it has a lot to do with ShintoPlasm’s example.

> Not every site needs https

No, not every site needs https. But every site should use HTTPS. In fact there are not a lot of reasons not to use HTTPS. Maybe there are cases but in most cases it does not make sense not to use HTTPS if it’s possible.

> Don’t think that encrypted data means encrypted communications…

I didn’t say that.

> it’s also about integrity of ressources

Translation into a more common terminology Digital Rights Managements *(DRM)*. Some resources need protection but others really don’t and violate fair use among other rights. Tread carefully.

> HTTPS is a requirement for HTTP/2 which is a good thing for website performance.

That’s debatable.

> HTTPS is also a ranking signal for search engines and that’s very important for news websites like BBC News.

SEO has very little to do with it other than pressuring sites into using TLS *(SSL)*. Not every site needs https especially when anonymous browsing goes nearly out the window with https. Don’t think that encrypted data means encrypted communications… DNS requests are almost always visible somewhere and encryption is usually quite weak.

> I run into enough certificates errors as is is now

It’s very big business to sell third party verification e.g. corporate greed usually comes into play *(go figure)*. Let’s Encrypt is a step in the right direction however some assets still don’t need any certificates. I’m sure every one has read about the “Net Neutrality” being challenged as of late… if/when it goes big business will dictate what you can read and where.

So long as there is always choice there shouldn’t be too much of an issue… just some ignorance from both sides of the coin.

Yeah I run into enough certificates errors as is is now, the more sites that switch the more annoying exceptions I will have to make.