Google rolls out Project Astra-powered features in Gemini AI

Google has begun rolling out new features for its AI assistant, Gemini, enabling real-time interaction through live video and screen sharing. These advancements, powered by Project Astra, allow users to engage more intuitively with their devices, marking a significant step forward in AI-assisted technology.

With the new live video feature, users can utilize their smartphone cameras to engage in real-time visual interactions with Gemini. For instance, a user can show Gemini a live feed of their surroundings and ask questions or seek assistance based on what the AI observes. This capability enhances Gemini's utility in providing contextual support and information.

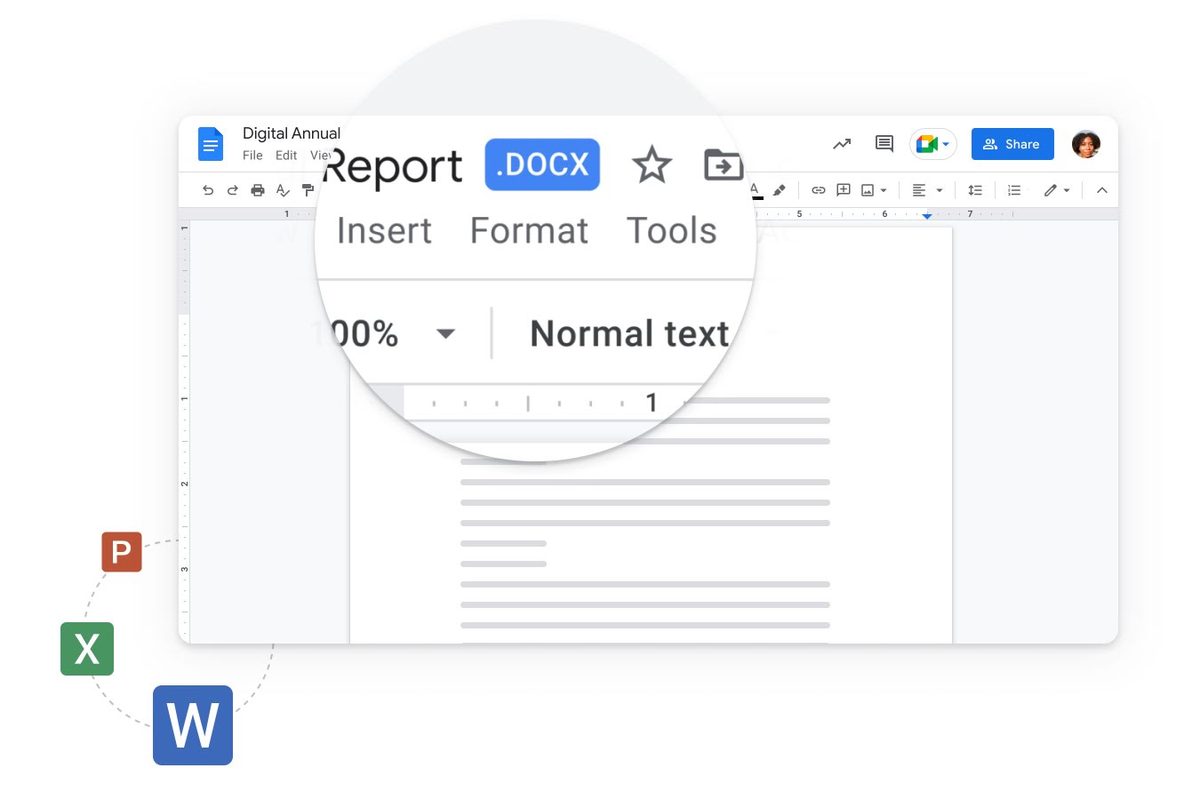

The screen sharing feature allows users to share their device's screen with Gemini, enabling the AI to analyze and provide insights on the displayed content. This functionality is particularly useful for tasks such as navigating complex applications, troubleshooting issues, or seeking recommendations based on on-screen information.

These features are part of Google's Project Astra initiative, which aims to enhance AI's ability to understand and interact with the real world in real-time. By integrating Astra's capabilities, Gemini can now process visual inputs more effectively, offering users a more immersive and interactive experience.

The new functionalities are currently being rolled out to Gemini Advanced subscribers as part of the Google One AI Premium plan. Users have reported the appearance of these features on their devices, indicating a gradual deployment.

Google's introduction of these features positions Gemini ahead in the competitive landscape of AI assistants. While other tech giants like Amazon and Apple are developing similar capabilities, Gemini's real-time video and screen sharing functionalities offer users a more dynamic and responsive AI experience.

Early adopters have shared positive feedback on the new features. For example, a Reddit user demonstrated Gemini's ability to read and interpret on-screen content, showcasing the practical applications of screen sharing. As these features become more widely available, they are expected to transform how users interact with their devices, making AI assistance more context-aware and integrated into daily tasks.

Source: The Verge

Advertisement