Is Your Site Being Outranked By Scrapers? Report Them!

So called scraper sites or scrapers are one of the dark phenomenons of the Internet. These sites republish the RSS feed of one or multiple unique websites on a domain, usually without rights or link back to the original source.

Technology in this sector has advanced in past years, and scraping has been combined with article spinning to create unique low quality articles instead of 1:1 copies.

One would assume that search engines like Google or Bing had the tools to distinguish between original and copy and act accordingly. This is unfortunately not always the case.

These sites often rank for long tail keywords which drive some traffic from search engines to the sites. And since the majority of them runs Adsense ads, they make a pretty penny from that.

It is bad enough that those sites can copy and paste contents automatically on their blogs and earn money from it. Even worse is the fact that the process of setting up a new scraper site does not take more than ten minutes tops, and with automation even less.

So called auto blogs have been a trend in recent years in the Black Hat communities.

Some legit webmasters even experience something that they should not: A scraper site outranking the site where the article was published originally.

The search engines leave webmasters who experience the issue more or less alone. They basically ask the webmaster to fill out DCMA requests and send them to the scraper sites. Problem here is that many use proxy hosting or other forms of obfuscation so that it is not possible to contact the webmaster directly. Plus, webmasters usually deal with multiple scraper sites which leads to a never ending cat and mouse game, especially if you take the easy set up of new sites into account.

Webmasters have criticized Google in particular for this in the past, considering that Google could identify the majority of domain owners easily through their Adsense program as the majority of scraper sites uses Adsense for monetization.

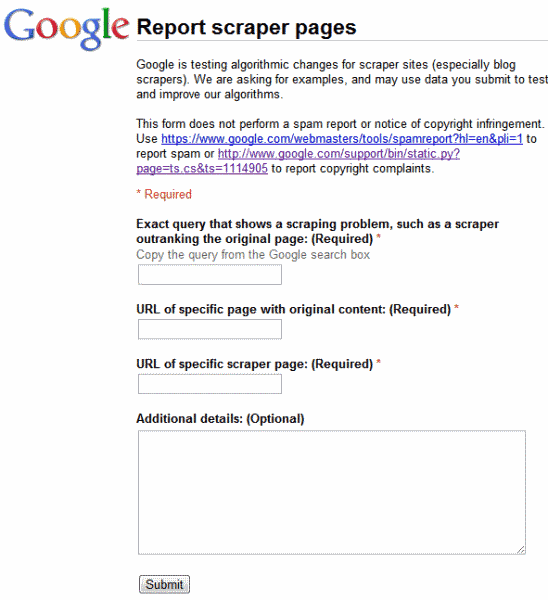

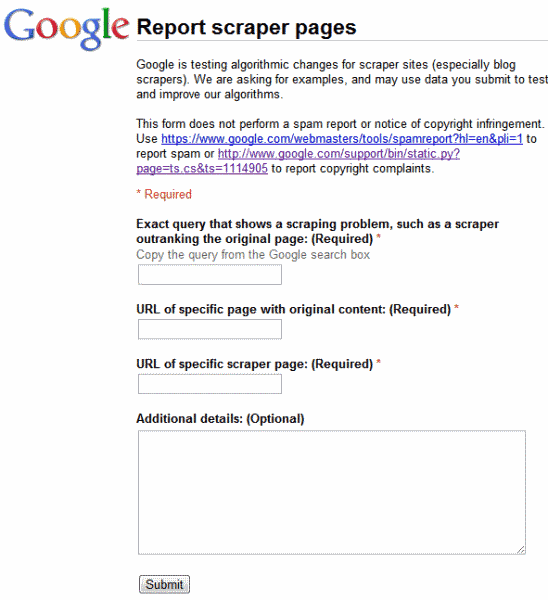

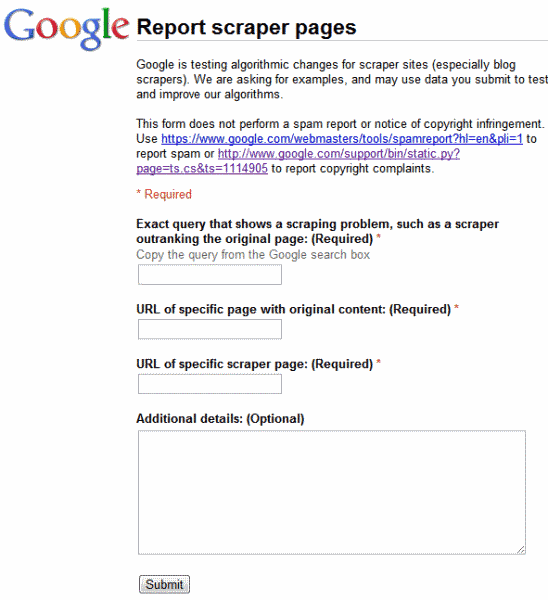

Google recently asked webmasters to report scraper pages to them. The data will be used to test and improve algorithms that target those scraper sites.

Webmasters can submit scraper sites on this web form.

It is about time that Google puts an end to this practice, especially since the company's recent drive to promoting "quality" sites in their search engines.

Here are several good resources for webmasters who want to do more than just reporting.

How to deal with content thieves

How to deal with content scrapers

Report Spam To Google

If you are a webmaster, what's your experience with scraper sites?