DupeKill is a freeware tool that can scan for duplicate files on your computer

If you are running out of storage space on your hard drive, there are a few things you can do. Run Windows' Disk Cleanup tool, delete the browser data, or use programs like WizTree to see what's taking up the most space.

You may find out that you have duplicates on your hard drives and that they take up a sizeable chunk of disk space. DupeKill is a free application that you can use to scan for duplicate files.

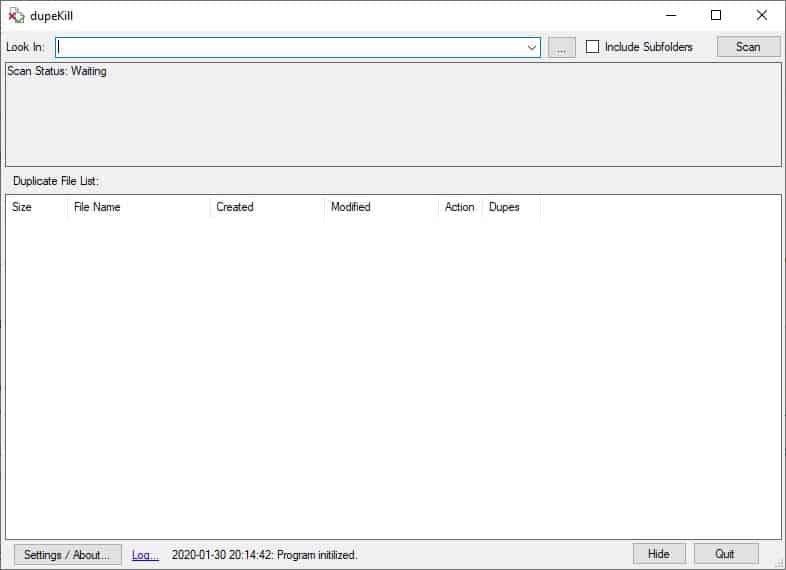

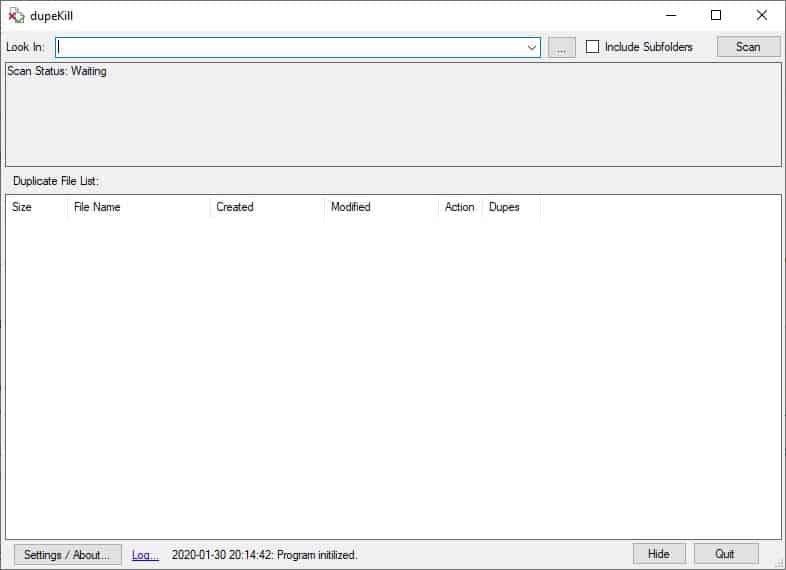

When you run the application for the first time, it will ask you where to store the settings. The options are to save these in the folder you extracted it to or in the user profile directory. DupeKill's interface is quite simple and straightforward. The top of the window has a "Look In" box. Click on the 3-dot button next to it to browse for a folder or drive which the program should search for duplicate files. Enable the "Include Subfolders" option if required and hit the Scan button to run a scan for dupes.

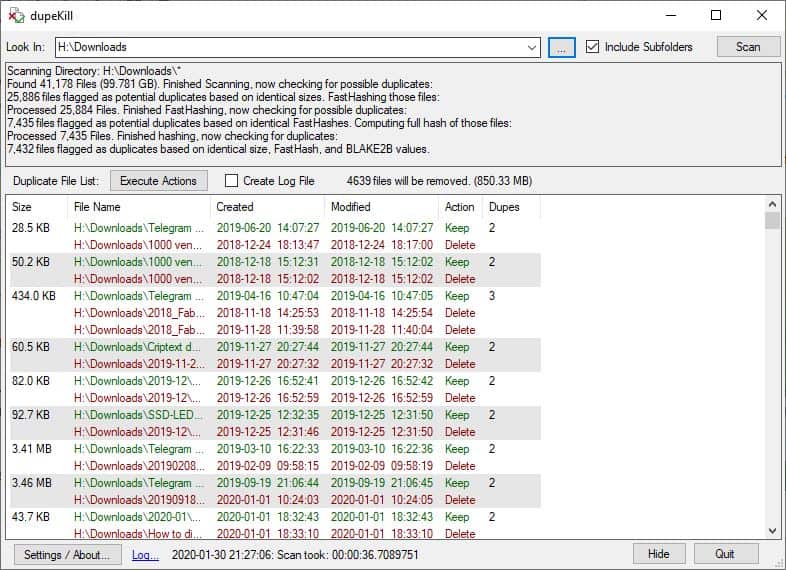

The program will scan the selected folder and check the detected files for duplicates. The scan status is displayed in a small pane below the "Look In" bar. According to the documentation, DupeKill checks the filenames, and if it finds files "Copy Of screenshot", "document1.txt", these are considered duplicates. Instead, it will suggest you to retain the original files "Screenshot", "Document.txt".

When the scan has been completed, the results are displayed in the large white-space area on the screen called the Duplicate File List. You can see the file properties such as the size, name and path, date created, date modified, for each duplicate file that was found.

The last 2 columns are special; the Action column displays the recommended action, i.e., to keep or delete the file or to create a link (shortcut to the original file). The Dupes column displays the number of duplicates found for a file.

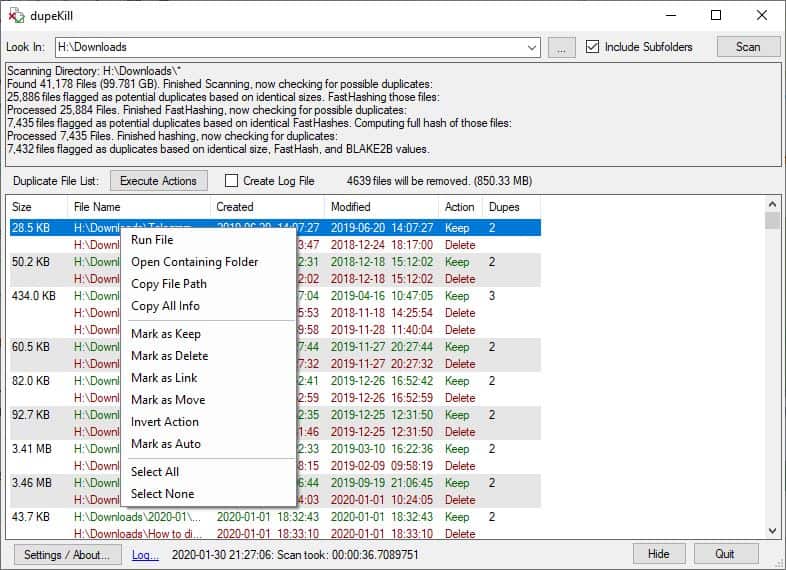

Right-click on a result to view the context menu. Here you can run the file, open the containing folder, copy the path or all info. It also has options to choose an action (Keep, Delete, Link, Move). The total number of files to be deleted and their file size are mentioned above the results pane. Confirm that the files have been correctly marked, and click on the Execute action button to erase the duplicate files.

Advanced Scan

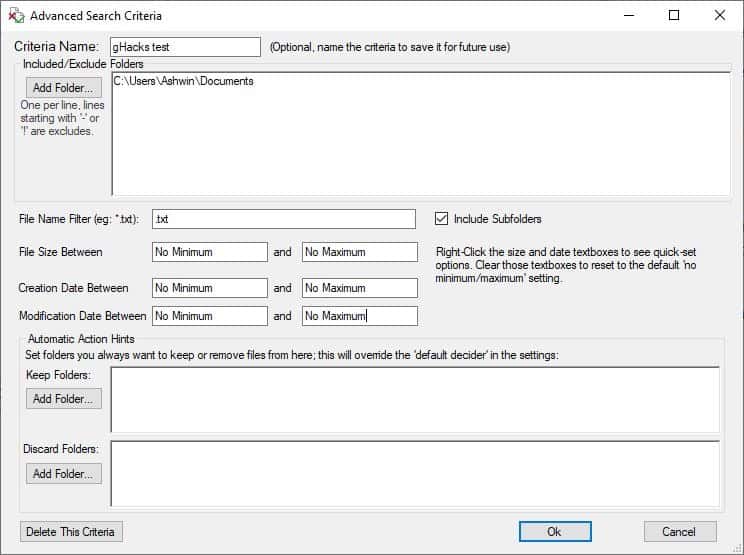

Click inside the Look In bar and select "Advanced Criteria". This opens a pop-up window with some advanced search options. You can save the "Criteria" for future use by giving it a name. Exclude and Include folders to be scanned, set a file name filter using the extension (wildcard), file size limit, or restrict by choosing a range for the file's created or modified date. You can also set Keep and Discard folders, which will make Dupekill mark the contents of the folder for retention or deletion respectively.

Closing Words

The program is quite fast at scanning files, and accurate too. You may want to check out alternatives such as Double File Scanner or Doublekiller as well.

I made duplicate copies of some files and placed them in various folders and it spotted them correctly. Click on the Settings/About button to modify the program's settings such as closing it to the tray, adding it to the Start Menu or Explorer's Context Menu, retain history, modify the Hash Algorithm, default action, etc.

Warning: There is always a chance of a file being incorrectly marked for deletion. Take your time to go through the results, before executing the action.

The application is portable. Refer to the official website for the list of available shortcuts and command-line switches.

This looks like it compares hashes, i.e. the fiels have to be binary clones of each other.

There’s a tool called “similarity” which can compare images and songs for duplicates and will show a % match, because it uses medium specific fingerprints and is able to match songs which are not binary clones of each other. It even finds two separate recordings of the same song (albeit with a smaller % match)!

I just wanted to emphasize that unnecessary System Restore Points and Shadow Copies can take up a *significant* amount of drive space (though you *can* control *how much* space they do in your computer’s System properties). Windows’ built-in “Disk Cleanup” tool (in Win7, at least) gives you the option (in the “Other Options” tab) of deleting all but the most recent Restore Point, and it’s something I do before periodically cloning my system drive. In fact, I did it just now to refresh my recollection of details for this comment, freeing up *33GB of space*. And my previous Disk Cleanup was only a couple of week ago! So as I said: *significant*.

CAVEAT: I maintain a relatively recent, “known good” clone of my system drive, and I generate versioned backups of select categories of files using FreeFileSync/RealTimeSync, so I don’t really *need* Shadow Copies or multiple Restore Points. Your situation and calculus may be different. There are other methods (both command-line and GUI) that allow you to manage System Restore Points and Shadow Copies in a more granular fashion, but I don’t use them, so I’ll leave it to other commenters to mention them.

As for the main focus of the article, I recently completed a *massive* amount of data-file “curation” in anticipation of setting up an NAS. I had different collections of data going back 20 years, on drives from dead computers and on an assortment of external drives. There were a good number of outright duplicates and of superseded versions of the same file, and I used AllDup to winnow those out and end up with a single collection of unique, up-to-date files. AllDup is the most sophisticated duplicate file finder I’ve used, but it has a learning curve and you need to pay close attention to the options you choose. I’d previously used Auslogics Duplicate File Finder and Ashisoft Duplicate File Finder — both pretty straightforward, given that I was able to use them without “reading the manual” — but both ended up alienating me. With Auslogics, I *vaguely* remember being bombarded with banners, popups, and up-sells on its site (in a reasonably locked-down browser!) every time an update was available. As for Ashisoft, I *think* they lost me when they turned the free version into a highly crippled crippleware version.

Anyway, my “curation” work is mostly done (to the point of “good enough”), and in the future I’m going to try to be *much* more disciplined about avoiding duplicates and multiple versions of the same file in different places, because eliminating duplicate and out-of-date files can be tedious, painstaking, time-consuming work, even with a good duplicate file finder. (Automatic cross-device syncing and a single set of age- and number-capped versioned backups are your friends!)

PS: For a variety of reasons — cost, speed, security, and privacy — using cloud storage on a large scale to avoid “version splintering” doesn’t appeal to me.

From their website :

“What sets this one apart is that it will make guesses about which files you want to keep based on the filename. The app tries to select the shortest, most descriptive name as the one to keep. As an example: a file with a name containing “copy of”, or “.1.txt” would be considered less descriptive than one without; and a file named “lkePic.jpg” is considered less descriptive than “Lake Pictures.jpg”.”

I don’t think this is good. It opens the way to many false positives and false negatives. It makes choices for you.

“The app also makes use of a speed improvement I haven’t seen anywhere else: It makes an extra pass of the file list to create a ‘fasthash’. The fasthash uses small samples of the file (16 kilobytes), taken from the beginning, end, and three places in the middle; then does a duplicate check based on the hash of the samples. This is very quick for large files, and it eliminates the vast majority of potential duplicates, as most files will have different samples. Most other duplicate finders omit this step, but it really speeds things up.”

This is very confusing. Other (good) deduplication programs offer you a choice : either search on the basis of file names, and possibly other data such as creation date, or search on the basis of contents (and then, possibly choose your hash method).

What does this one do ? Does it force you to use this “fast hash” method ? Can you opt out of it ? It does not claim to find all duplicates, anyway.

Deduplication is more complex than it seems. One of the more difficult steps is examining the results, and deciding what to do with them. It’s very easy to delete useful files by mistake at this point. Programs vary widely in the help they bring to that operation.

From what I see here, I will stick to Duplicate Cleaner Free. It’s the best I have found up to now in the free category (it also has a Pro, paid-for version).

this app only detects duplicates based on the files hashes (configured from the settings). Only binary-exact duplicates will be shown.

The ‘fasthash’ is a way of speeding up the hash process by eliminating obviously different files from consideration quicker.

The filename-based ‘guesses about which one you want to keep’ only changes how the default actions (keep or remove) are applied *after* a set of duplicates have been detected; it tries to auto-select the best name to keep. Filename and dates are never used to detect a duplicate, only the file contents.

I just ran it on the main (C:) drive of my computer.

Scanning Directory: C:\*

Found 415,518 Files (231.451 GB). Finished Scanning, now checking for possible duplicates:

368,383 files flagged as potential duplicates based on identical sizes. FastHashing those files:

Processed 367,148 Files. Finished FastHashing, now checking for possible duplicates:

159,816 files flagged as potential duplicates based on identical FastHashes. Computing full hash of those files:

Processed 159,816 Files. Finished hashing, now checking for duplicates:

159,724 files flagged as duplicates based on identical size, FastHash, and BLAKE2B values.

It wants to remove 105,537 files – some 19GB.

Many files in Windows directories appear in the list of files targeted for deletion..

I’m thinking it’s far too dangerous to proceed and one should limit such things to “safe” targets such as duplicate music or documents, but in any case nothing to do with the operating system.

Correct, I would not run this on the Windows directory or other system directories unless they contain only user-generated data.

Be careful! The example above, which is fairly typical, found thousands of duplicates. Unless you’re manually transferring files from one disk to another, comparing folders or something similar and want to be sure nothing is missed, all dupes found should be reviewed. If it’s uncertain whether deletion is safe, keep the dupes.

Dupe finders work best when you tell them where and for what to look.

“it lacks some of the advanced scanning options like comparing hashes”

Well, it supports several hash algorithms: MDA, SHA1, SHA256, SHA256Cng, SHA384, SHA512 and BLAKE2B. That’s what I really like about it.

For those not familiar with all those optional algorithms, BLAKE2B should cover almost every duplicate check you run. Because the BLAKE series uses numerous processor optimizations to speed up file analysis vs. older formats, it’s more efficient (speed vs. specifics) for anything but very old (10+ yr) computers.

SHA512 meanwhile is the slowest and definitely serious overkill for duplicate checks.

You are right, corrected this!