GNU/Linux for beginners: How Audio Works

One of the things that I found pretty confusing about GNU/Linux during my transition from using Windows as my primary OS to using GNU/Linux, was how audio worked.

In Windows, you don’t really have to think about anything, or know how to configure any specific utilities for the most part; audio just works. You might need to install a driver for a new headset or soundcard but that’s about as heavy as things get.

Audio in GNU/Linux has come a long way and nowadays functions fairly well when it comes to the simplicity that users migrating from Windows are accustomed to; but there are still some nuances and terms that new users may not be familiar with.

This article is not meant to delve too deeply into things, this will likely just be common knowledge for anyone with mild experience in the GNU/Linux world, but hopefully this will help clarify some things for the greenhorns.

Audio in Linux

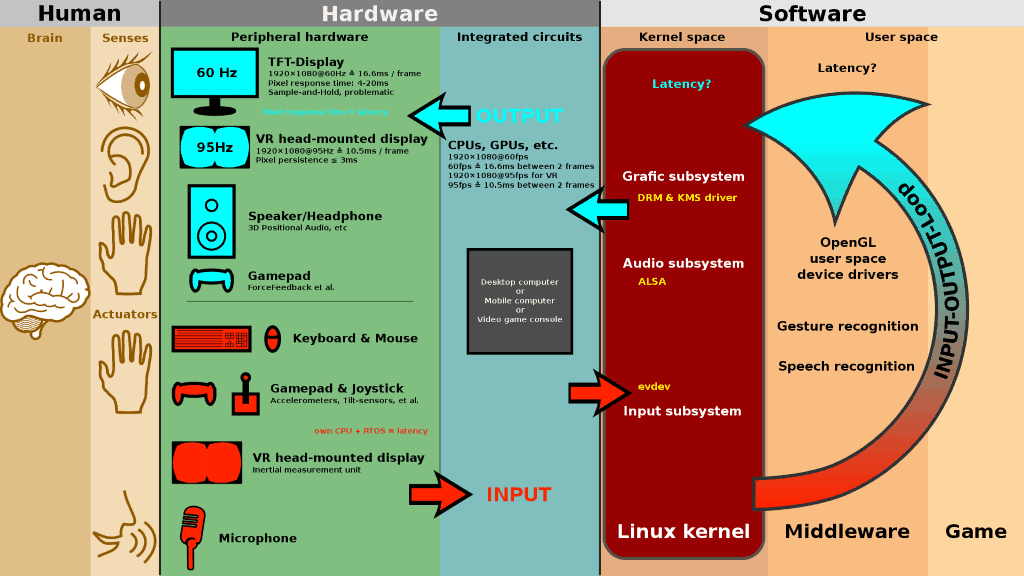

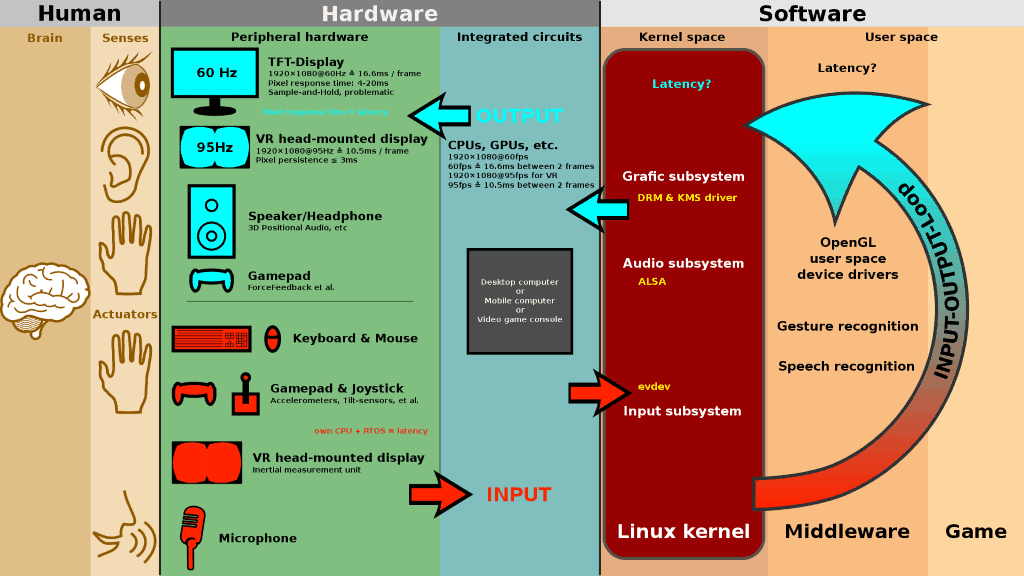

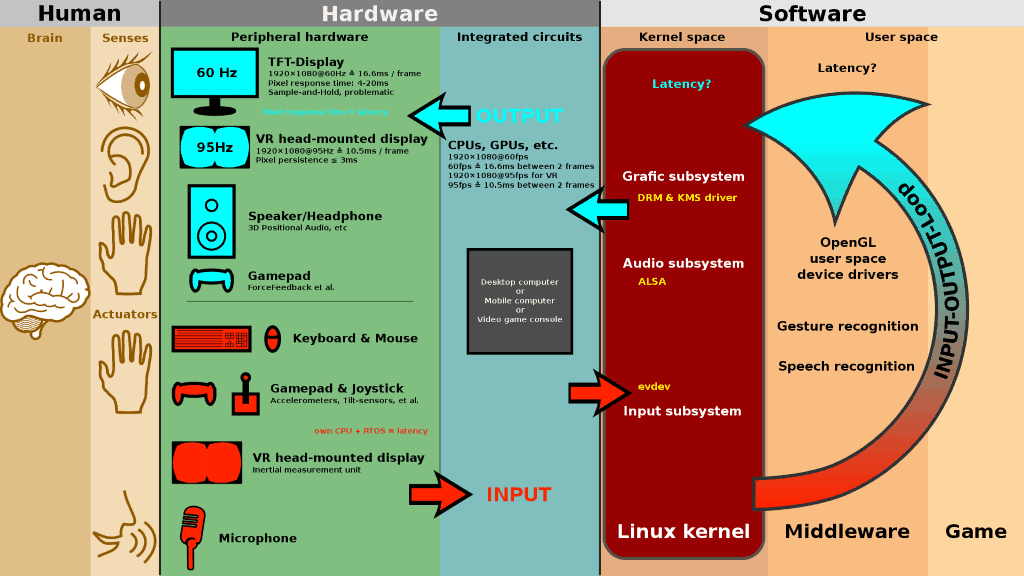

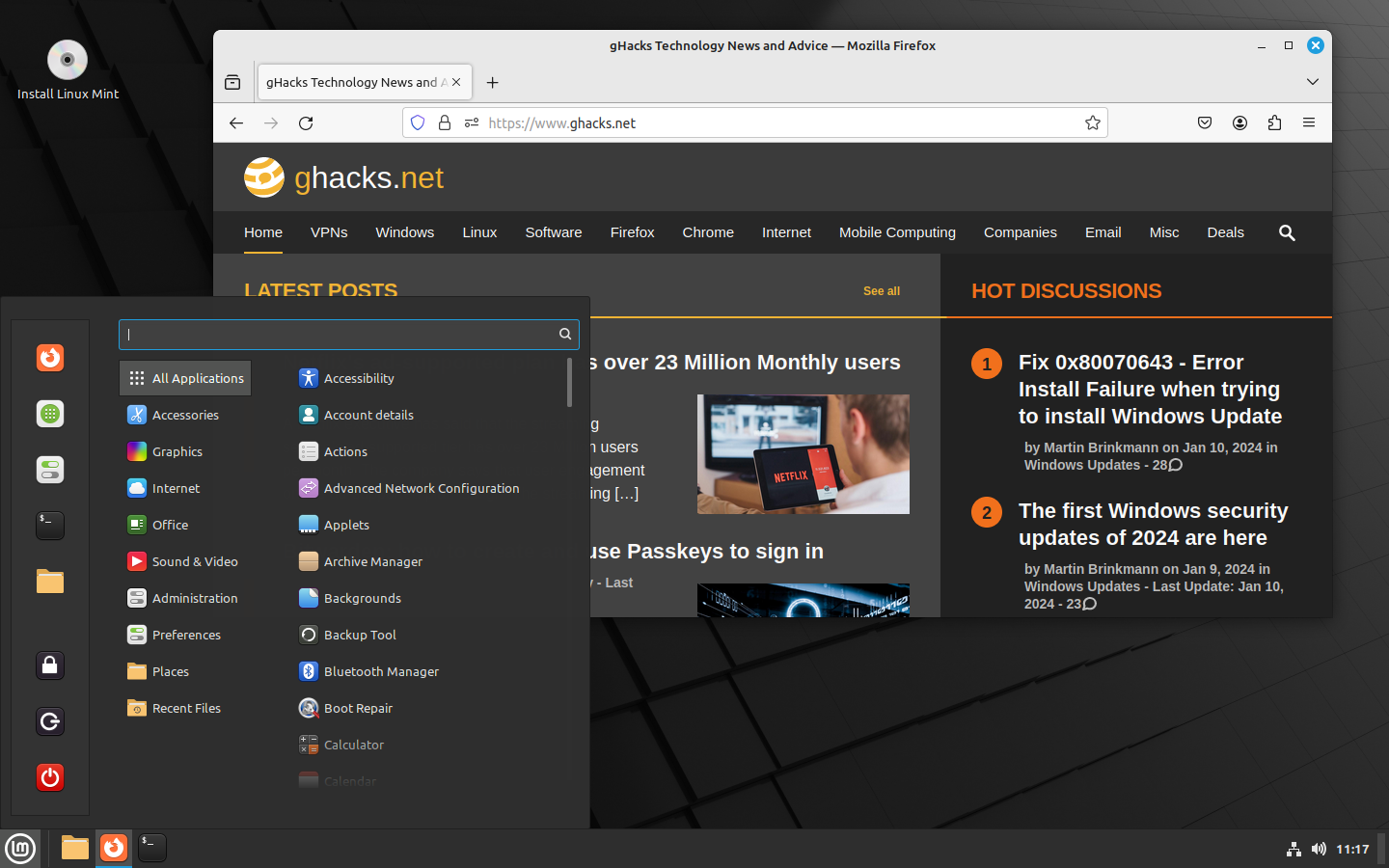

The image below, shows how sound works in GNU/Linux, which will be expanded upon:

ALSA

ALSA stands for, “Advanced Linux Sound Architecture†and is the root of all sound in modern GNU/Linux distributions. In short, ALSA is the framework that sound drivers communicate through, or in itself you could somewhat refer to it as a sound driver itself; sort of.

There was another somewhat similar system called OSS (Open Sound System) that some people still prefer, but it’s mostly been phased out and is rarely used anymore.

ALSA is nowadays the basis for all sound in a GNU/Linux system. The Kernel (Linux itself) communicates with ALSA, which then turn communicates with an audio server such as PulseAudio, which then communicates with the applications on the system. You can still have audio without a server like PulseAudio, but you lose a lot of functionality and customization; as well as other features we will cover shortly.

Sound Servers

PulseAudio

PulseAudio is included with practically every major pre-built GNU/Linux operating system. Ubuntu, Opensuse, Manjaro, Mageia, Linux Mint etc, all use PulseAudio for example.

I don’t generally like referencing Wikipedia, but a great explanation of PulseAudio can be found there in better words than I might have used...

“PulseAudio acts as a sound server, where a background process accepting sound input from one or more sources (processes, capture devices, etc) is created. The background process then redirects mentioned sound sources to one or more sinks (sound cards, remote network PulseAudio servers, or other processes).â€

Essentially, PulseAudio directs the sound it receives from ALSA, to your speakers, headphones, etc.

Without PulseAudio, typically ALSA can only send sound to one place at a time. PulseAudio on the other hand allows sound to come from multiple sources at once, and be sent out to multiple places at the same time.

Another feature of PulseAudio is the ability to control volume for separate applications independently. You can turn UP Youtube in your browser, and turn DOWN spotify, without having to adjust the volume as a singular entity, for example.

Most Desktop Environments have their own utilities / tray tools for changing volumes / listening devices through PulseAudio, but there is an application called ‘pavucontrol’ that can be installed if you want to mess with PulseAudio directly, and see exactly what I’m referring to. It’s straight-forward and easy to figure out, and the package is available in practically every distributions repositories.

PulseAudio has numerous other features, but we will move on, however if you want more information on PulseAudio you can get it here.

JACK

JACK stands for JACK Audio Connection Kit. JACK is another Sound Server similar to PulseAudio, but is more commonly used among DJ’s and audio professionals. It’s quite a bit more technical, however it does support things like lower latency between devices, and is very useful for connecting multiple devices together (like Hardware Mixers, turntables, speakers etc, for professional use.) Most people will never need to use JACK, PulseAudio works quite fine unless you need JACK for something specific.

Final Thoughts

Audio on GNU/Linux ‘sounds’ more complicated than it really is (see what I did there), and hopefully this article will help things to make a little more sense when you’re browsing the web and seeing names like ALSA or PulseAudio being thrown around!

Enjoy!

Thanks so much! This article really helped me understand the theory behind what’s actually happening under the hood. Great write-up.

(1) It would be VERY helpful if you could add PulseAudio to your graphic.

(2) The description “The Kernel (Linux itself) communicates with ALSA, which then turn communicates with an audio server such as PulseAudio, which then communicates with the applications on the system.” makes it sound like the sound activity originates in the kernel, but actually I would suspect it should be from the applications. In other words, the apps call PulseAudio, which calls ALSA, which makes use of kernel resources, which is the opposite direction to the quoted description.

This seemed more like what I am suggesting ‘ “PulseAudio acts as a sound server, where a background process accepting sound input from one or more sources (processes, capture devices, etc) is created. The background process then redirects mentioned sound sources to one or more sinks (sound cards, remote network PulseAudio servers, or other processes).†‘

But then the next sentence “Essentially, PulseAudio directs the sound it receives from ALSA, to your speakers, headphones, etc.” again makes it seem like sound is coming from ALSA.

In fairness, I have never seen a good explanation of this, and I suspect that is why so many people find Linux sound maddeningly difficult to debug. Your description is better than most, but it still falls short. Without the right mental model of how these blocks interoperate, it is impossible to develop a plan for systematically debugging where the problem is that results in no sound.

Thanks for posting the article, and I hope this feedback is helpful if you want to do an update.

This is probably related: http://harmful.cat-v.org/software/operating-systems/linux/alsa

Since the two graphics in the link above haven’t been updated in some time, I’d like to ask: Does GNU/Linux still suffer from issues regarding dependency hell when it comes to audio?

Perhaps it depends to some extent to what distribution you are running and how the packaging is done. For me it is slightly harder to get things going on Debian stable, but there is plenty of people that do it. Perhaps, it is a matter of enabling the right repositories. I have been using Arch Linux in the last 3 or so years and pacman – AUR take care of the package environment just brilliantly. 0 problems encountered so far in managing dependencies.

As far as dependencies loops go, most pro audio applications will work fine with alsa (and perhaps its related libs, utilities and tools) and a jack installation of some sort (perhaps best jack2-dbus). This is the setup I have been running with quite for a while when I was using OpenBox. Full blown DEs (GNOME3, which I am using now, and KDE, for example) typically pull in PulseAudio as a dependency.

In few words: if you have access to the latest version of audio software through your repos you can build very slim audio system setups, like linux-rt + alsa stuff + jack + openbox + plethora-of-audio-software (my previous setup).

Otherwise, if different versions/builds are scattered among different repositories it might get trickier.

This whole thing sounds more complex, bulky and generally inferior to what Windows does. Or is it not?

More importantly, do all these layers affect audio quality? (obviously not talking about system sounds or YouTube audio, but higher quality sources)

No, it isn’t. Actually, Windows is very similar in many regards. In modern Windows systems there are various audio subsystems and APIs: WASAPI, ASIO and DirectSound. So, as you can see the audio stack is fragmented, just as in Linux. Usually, normal system sound is interrupted when ASIO applications are working (most professional audio applications are ASIO applications, just as in Linux most professional audio applications are JACK applications). We could sort of drawn the equivalence between ASIO and JACK (although they are very different beasts in many regards).

The biggest difference is that Linux users are actually exposed to the complexity: it isn’t hidden under the carpet and the user is in charge of taking care of the configuration.

You didn’t mention Mac OS, but it is worth to comment on that too. Mac OS perhaps has the simplest audio stack, but (depending on Mac OS version) it is possible to observe user space daemons taking care of routing audio streams. This is reminiscent of PulseAudio implementation. So, as far as desktop OSes go, Linux us not really very very different, but just made more explicit to the user.

These layers, if properly configured, do not affect audio quality. Papers were published (years ago) that demonstrated it (you can find links in the article I posted few commends above). It would be nice to find updated results, but I would assume things improved from there. There is actually little that can hurt audio quality: audio in a computer is pretty much a buffer of numbers. If the audio stack is not well configured this buffer will loose samples (under/ovverruns). Other issues tend to be more hardware and hardware support related.

The communist paradise ! lol

I have been using Linux for years (but not for everyday use), I didn’t have the less idea of how all these things worked…

Hi There!

Nice introductory article! I think it wasn’t too accurate about ALSA (I mean, as far as I know; please correct me if I am wrong).

ALSA is part of the kernel. In fact, there is no need to install it: it comes with the kernel. It isn’t quite true, I think, that not using a sound server comes at the expenses of functionality and customization. Latest ALSA releases allow much deeper configuration than any sound server actually, as well as software mixing: http://alsa.opensrc.org/Hardware_mixing,_software_mixing. In general, asoundrc allows very complex and deep customization, as the creation of virtual devices or devices behavior. Perhaps, it is more accurate to say that configuration within ALSA is way more technical with respect sound servers (although defaults will work well in most cases nowadays).

I wrote a similar article time ago, you can find it here: https://thecrocoduckspond.wordpress.com/2016/11/19/the-linux-audio-anatomy/

Here’s a article that covers the same Linux audio issue in a little more detail, note that it is somewhat dated (2010) so take that into account:

http://www.tuxradar.com/content/how-it-works-linux-audio-explained

I wish that you had gone into some more depth with the article. There was a problem with a third-party audio file recently on my Ubuntu 16.04 OS and I was asked to remove it, which I did. I don’t know where it came from, possibly Vivaldi, which I also had problems with, and I also removed it. I still have audio, so I guess that the file wasn’t really necessary.

Hey Mike and Martin, thanks for the article! I am myself considering a move to GNU or BSD from OS X, since I can’t handle 10.9+ nonsense and the article certainly shed some light on a very important question. Especially for semi-audiophiles :)

Also I would like to complement Martin for being a good tech blogger in the time when most just re-post headlines and in particular for supporting freedom of speech on a few precedents! Wish you all the best! Cheers!