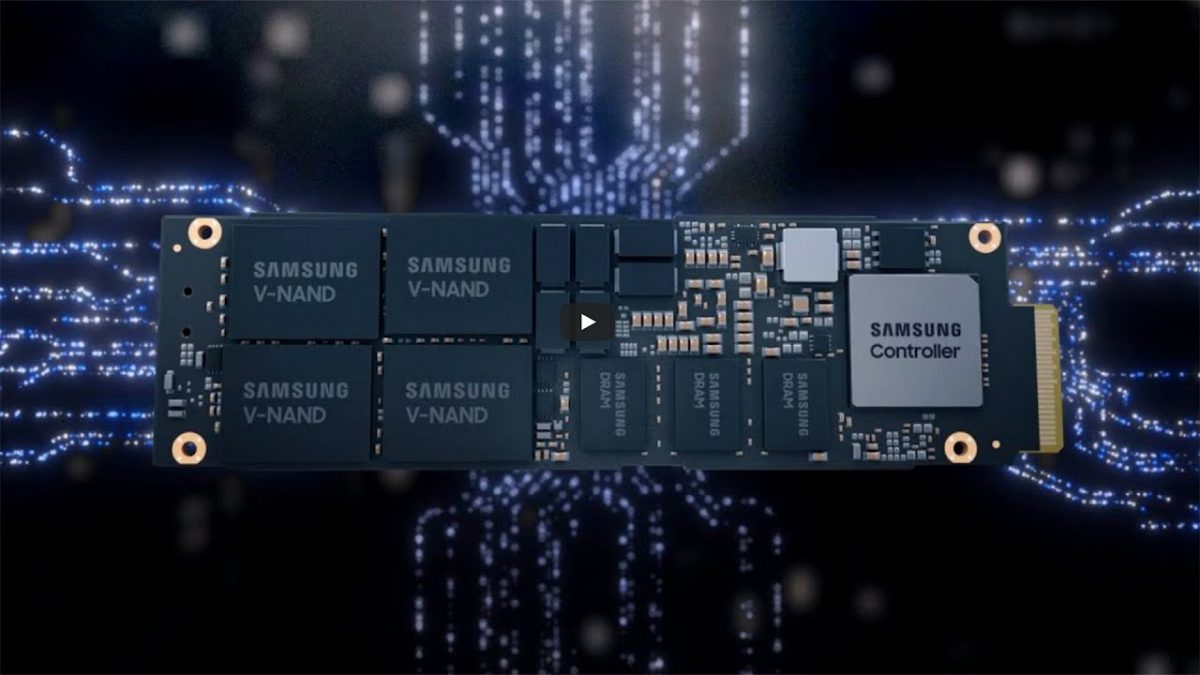

The Risks and Benefits of AI Assistants in the Workplace: Lessons from Samsung Semiconductor

Let's learn some lessons about using AI assistants in the workplace, thanks to Samsung Semiconductor.

Samsung Semiconductor recently allowed its fabrication engineers to utilize an AI assistant called ChatGPT. However, it was discovered that the engineers were inadvertently sharing confidential information, such as internal meeting notes and data related to the performance and yield of their fabrication processes, while using the tool to quickly correct errors in their source code. Consequently, Samsung Semiconductor plans to create an AI service similar to ChatGPT for internal use, but, for the time being, it has limited the length of questions that can be submitted to the tool to 1024 bytes, according to a report by the Economist.

![]()

Samsung Semiconductor has reported three instances in which the use of ChatGPT resulted in data leaks. While this number may not appear to be significant, all three incidents occurred within a span of 20 days, which is cause for concern.

One of the reported incidents involved a Samsung Semiconductor employee who used ChatGPT to correct errors in the source code of a proprietary program. This action, however, unintentionally revealed the code of a highly classified application to an external company's artificial intelligence.

The second incident was even more concerning. An employee entered confidential test patterns designed to identify defective chips into ChatGPT, and requested optimization of the sequences. These test sequences are strictly confidential, and optimizing them could potentially shorten the silicon test and verification process, leading to significant time and cost savings.

Another employee used the Naver Clova application to convert a recorded meeting into a document, which was then submitted to ChatGPT for use in creating a presentation. However, these actions posed a significant risk to confidential information, prompting Samsung to caution its employees about the potential dangers of using ChatGPT.

Samsung Electronics has informed its executives and staff that any data entered into ChatGPT is transmitted and stored on external servers, making it difficult for the company to retrieve it and increasing the risk of data leakage. Although ChatGPT is a useful tool, its open learning data feature can potentially expose sensitive information to third parties, which is unacceptable in the highly competitive semiconductor industry.

Samsung is taking steps to prevent similar incidents from occurring in the future. If another data breach happens, even after implementing emergency information protection measures, access to ChatGPT may be restricted on the company network. Nonetheless, it's evident that generative AI and other AI-powered electronic design automation tools will play a crucial role in the future of chip manufacturing.

Regarding the data leakage incident, a Samsung Electronics spokesperson declined to confirm or deny any details, citing the sensitive nature of the matter as an internal issue.

Balancing the benefits and risks of AI assistants in the workplace

The integration of AI assistants in the workplace has the potential to bring about numerous benefits, including increased productivity and efficiency. AI tools such as ChatGPT can swiftly detect and rectify errors, freeing up valuable time for employees to focus on other tasks. Moreover, AI assistants can learn from previous interactions and adapt to better serve the needs of employees, resulting in even greater productivity gains.

However, the use of AI assistants in industries that deal with sensitive information raises significant concerns about data privacy and security. The inadvertent sharing of confidential information by Samsung Semiconductor's employees using ChatGPT highlights the potential risks of using AI tools in high-stakes environments. If not managed properly, the use of AI assistants can lead to data breaches and leaks, exposing confidential information to unauthorized third parties.

It is important to note that the risks associated with AI assistants are not limited to intentional data breaches or malicious attacks. Even well-intentioned employees can unintentionally share sensitive information by entering it into AI tools without fully comprehending the potential consequences. Additionally, the open learning data feature of AI assistants can potentially expose sensitive information to third parties, further increasing the risk of data breaches.

Given these risks, it is crucial for companies to carefully evaluate the potential benefits and drawbacks of using AI assistants in the workplace, especially in industries that handle sensitive information. Companies must establish clear guidelines for the use of AI tools, including limitations on the types of data that can be entered and stored in these systems. Furthermore, companies must implement strong data privacy and security measures, such as encryption and access controls, to minimize the risk of data breaches and leaks.

AI: A double-edged sword if there ever was one

The recent data leaks resulting from Samsung Semiconductor employees' use of ChatGPT have raised crucial concerns about the potential risks and benefits of incorporating AI assistants in the workplace. While AI tools like ChatGPT can significantly enhance productivity, they can also expose companies to data privacy and security risks.

Samsung Semiconductor has acknowledged these risks and is implementing measures to prevent future incidents. Nonetheless, this situation serves as a valuable reminder for other companies considering the adoption of AI assistants in their operations. To mitigate the risks associated with AI tools, companies should establish well-defined guidelines for their use and implement robust data privacy and security measures.

The case of Samsung Semiconductor emphasizes the importance of striking a balance between the potential benefits and risks of AI assistants in the workplace. As AI tools continue to proliferate, companies must take proactive measures to ensure that confidential information is shielded from unauthorized access and disclosure. Ultimately, the successful integration of AI assistants in the workplace necessitates thoughtful consideration of the potential risks and benefits, as well as the implementation of effective risk management strategies.

Advertisement