Troubleshooting a maxed out Linux hard drive

Yesterday I sat down at my main desktop PC to do some work and, out of nowhere, error message after error message popped up informing me the hard drive was at 100% capacity which meant the operating system had no room to write to. This baffled me as I was 100% sure there should have been over 60 gigs of space available. My first inclination was to search for larger files that might have grown out of hand - torrents especially.

After much searching I saw nothing. I even started at the / directory and was coming up with nothing. So naturally I went right to the log files. Believe it or not, it was not in the log files that I discovered where the problem was. Of course I thought I should share this experience with ghacks in order to illustrate how troubleshooting a Linux machine can go.

After the futile manual file search for files I went to the logs. The first log I went to (which is the first log I always turn to) dmesg which prints the message buffer from the kernel. To view this you just type dmesg at the terminal window. This was my first strike as the kernel buffer knew nothing of my capacity drive.

My next step was to head on over to /var/log and take a peek around any of the log files that might offer up a clue as to why my hard drive was maxed out. My instincts always take me to /var/log/messages first. This particular log file keeps track of general system information regarding boot up, networking. Another strike.

At this point I realized I had to take a break and clear some space because the warnings wouldn't stop. I doubled checked to make sure the reports were correct by issuing the command:

df -h

which confirmed that /dev/sda1 was at 100% usage. I managed to free up a couple of gigs of space by deleting some torrents. The errors went away and I could continue working.

My next step was to check the size of my proxy logs and Dansguardian logs. I had to move both systems over to my main desktop and had a feeling those logs needed to be rotated. I was right, but it didn't solve my problem. The tiny prox logs weren't huge (by any stretch of the imagination), but they were many. So I deleted the older logs and moved on.

I was running out of log files to check and nothing had given me any idea what was going on.

Search and destroy

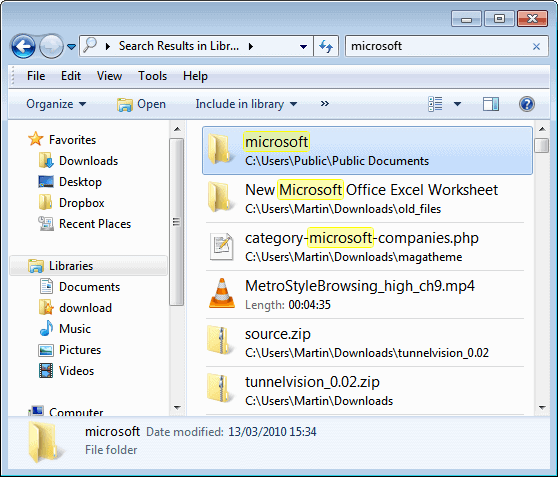

It was time to go back to the search method. But instead of using the manual method (how long would it take to weed through the ENTIRE Linux file system - I didn't want to know) I opted to employ a little help thanks to the find command. The find command allows you to add switches to your search to indicate file size. In my case I wanted to first see if there were any files larger than 100 MB in size. To do this I will issue the command:

find / -size +1000000k -print0 | xargs -0 ls -l

as either root or using sudo. What this command does is tell find to search for files > 1000MB and send them to the standard output (that's basically the terminal window), and pipe them to xargs so that you can see the detailed list (using "-l" of ls). Because I was starting at the root directory, I knew this would take some time.

It did. But after some time I discovered five files that were each 12 gigs in size in /var/cache/. These files were from a backup program I was working with and forgot to disable. So once a week my entire /home directory was being backed up. I deleted the files (recovering sixty gigs of space) and disabled the backup program. Problem solved.

Final thoughts

There are times when even the best logging system available will not tell you what you need to know. At those times you have to employ your best sluething techniques. Fortunately the Linux operating system encourages these types of administration tricks.

Advertisement

what we need is a script(perl or bash) that will go through the files and delete all the ‘copying’,notes,authors, todo, new, and other entitled duplicated files in linux, collect them and delete them automatically

then we can pick up an emergency couple of megabyte as one step in a cleanup system

Very useful troubleshooting case history. As a noob, I’ll add it to my slowly growing knowledge base. Thanks

There are major bugs associated with lack of space in Linux. These issues apply in Windows as well but are worse in Linux in my experience. By default most distros allocate 5% of the / partition to the user root for “file-system recovery” which in my experience has caused the problem in the first place. Additionally settings in the home directory (compiz, nautlius, gnome, etc.) will be wiped. I’ve even had modules removed forcing reinstallation for less “from sratch”-oriented distros. Yeah I have run into this problem A LOT because I multiboot and have a 40gb 1.8″ harddrive in my laptop. My recent 320gb modular second hdd project should help in the future but this is a serious issue that has been around forever and seems to be ignored by the devs.

And yeah

$ du -sh

and

$df -h

are useful for monitoring disk usage.

Graphically gdmap (a clone of sequioaview) works wonders. Baobab on the other hand is crap.

So when some non-root runaway process eats up your hdd before you can kill it… your root user can clean up the mess and get the system back up and running. Otherwise you would have to boot into a liveCD to clean it up, and that’s no fun! + what if the damage is done on your modular hdd, and your optical drive is unavailable ! q:

right. du -h would be the way to go when you search what’s taking all the space.

du -h works well, also

gt5 is an outstanding tool. if you could specify specific file sizes it would have been the perfect (and much easier) solution for this problem.

(at least I don’t believe you specify file size. if i am wrong please correct me.)

I use gt5 (http://gt5.sourceforge.net/) to determine storage usage on my hard drive from the console.

It’s great. Run it in any directory, and it’ll give you a breakdown of the storage usage of subdirectories. You can even navigate down the directory structure to find the exact folder.

It’s in the Ubuntu repositories, just “sudo apt-get install gt5”.