Scientists Use AI to Reconstruct Images from Brain Activity

The idea of an artificial intelligence capable of interpreting your imagination, translating mental imagery into real-world depictions, may seem reminiscent of a sci-fi novel; however, recent research indicates that this concept has become a reality.

Related: The AI race is on

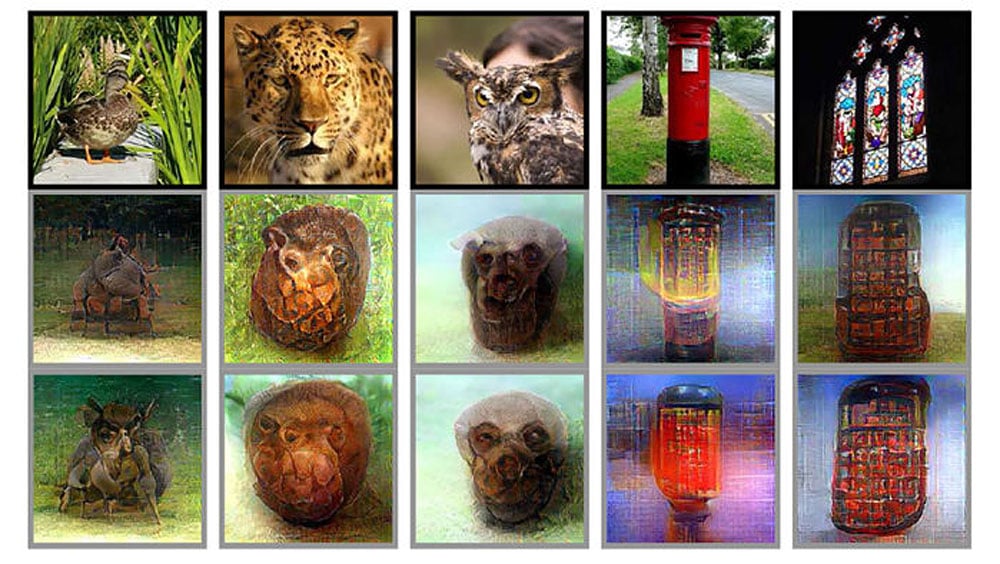

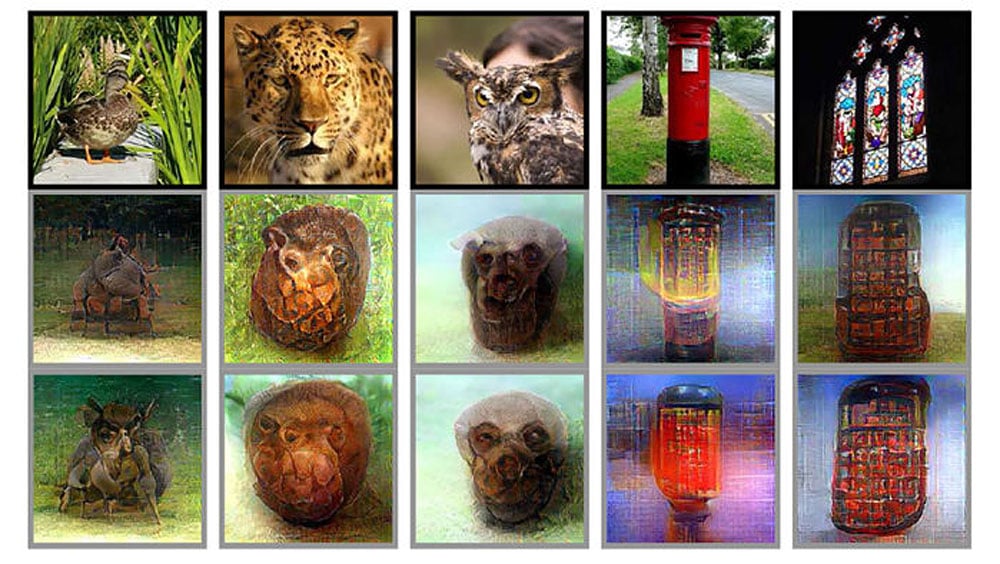

As per a recently published paper, researchers have achieved the ability to construct remarkably precise and detailed images from brain activity, utilizing the widely used Stable Diffusion image generation model, as outlined in a December publication. In contrast to earlier endeavors, the authors noted that no specialized training or fine-tuning of the AI models was necessary to produce these images.

According to the researchers hailing from the Graduate School of Frontier Biosciences at Osaka University, their method involved creating an initial projection of latent representation, which serves as a model for the image's data, from functional magnetic resonance imaging (fMRI) signals. Subsequently, the researchers introduced noise to this model through the diffusion process. Finally, text representations from fMRI signals within the higher visual cortex were decoded and employed as input to generate the ultimate reconstructed image.

As per the researchers' report, while a handful of earlier studies had achieved high-resolution reconstructions of images, they had done so only after training and fine-tuning generative models. This approach was hampered by the complexity of training these models, which can pose significant challenges, particularly given the scarcity of samples available in neuroscience.

To the best of the researchers' knowledge, prior to this study, no other researchers had endeavored to use diffusion models for visual reconstruction. The researchers regarded this study as a means to glimpse into the internal workings of diffusion models, adding that it was the first-ever to provide a biological interpretation of the model from a quantitative perspective. For instance, the researchers created a diagram demonstrating the correlation between stimuli and noise levels in the brain, with higher-level stimuli resulting in higher noise levels and higher resolution images. Another diagram showed the involvement of different neural networks in the brain and how they denoise and reconstruct images.

The researchers noted that their findings suggest that image information is compressed within the bottleneck layer during the initial stages of the reverse diffusion process. As the denoising proceeds, a functional separation among U-Net layers emerges within the visual cortex, with the first layer typically representing fine-scale details in early visual areas, while the bottleneck layer corresponds to higher-order information in more ventral and semantic areas.

Previously, we have witnessed other instances of brainwaves and brain activity being utilized to produce images. For example, in 2014, a Shanghai-based artist named Jody Xiong employed EEG biosensors to connect sixteen individuals with disabilities to balloons of paint, which they would burst using their thoughts to create their own paintings. In another EEG-based artwork, artist Lia Chavez created an installation that generated sounds and light works based on the electrical impulses in the brain. Viewers would wear EEG headsets, and the signals transmitted would be reflected through color and sound via an A/V system.

With the progression of generative AI, more and more researchers have been exploring the ways in which AI models can interact with the human brain. In a January 2022 study, researchers at Radboud University in the Netherlands trained a generative AI network, a precursor of Stable Diffusion, on fMRI data from 1,050 unique faces to produce actual images from brain imaging results. The study demonstrated that the AI was able to deliver unmatched stimulus reconstruction. The latest study, published in December, demonstrated that current diffusion models can now achieve high-resolution visual reconstruction.