Why I read only user reviews with average ratings

Reviews can be very helpful before you make a purchase on the Internet or even locally. They may help you understand the product better, or prevent you from making a decision that you might regret at a later point in time.

This goes for reviews by critics but even more so by users. Some sites, most shopping sites in fact, publish only user reviews while others, Metacritic for example, list critic and user reviews.

Most user reviews come with ratings. While the rating scheme is different from site to site, some use thumbs up or down, others a 5, 10 or 100 point rating scheme, most use ratings, and sometimes even ratings for users who left a review.

The aggregate score of an item is important, especially on shopping sites but on other sites as well. Customers use the ratings to pick items, and companies try to get positive ratings and reviews as it helps them improve the visibility and click-through rate of their products on those sites.

User reviews are broken

The user review system on most sites is broken. If you check out any review on Amazon or any other site that lets users rate items, you will likely notice the following: the majority of users either rate an item very low or very high.

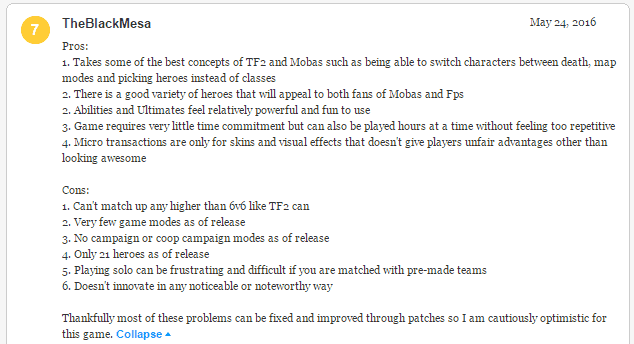

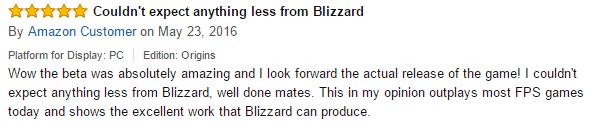

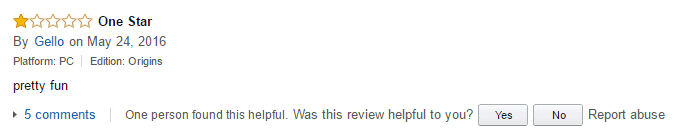

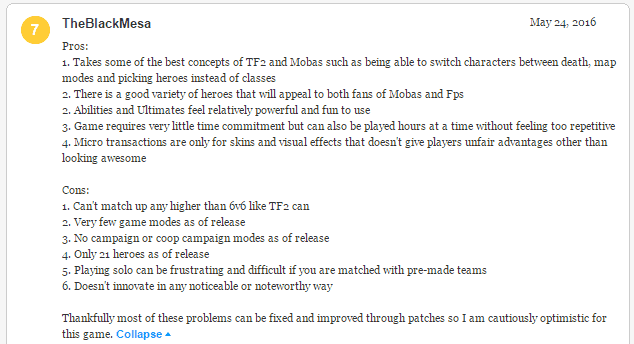

Take Blizzard's new game Overwatch for instance. If you check Metacritic user reviews, you will notice that the majority either awarded the game a 9 or 10 score, or a 0 or 1 score (with more high-end ratings than low-end ones).

While there is nothing wrong with giving a game such a rating, the reviewers fail more often than not to explain why the product deserved the rating.

Giving a game a 0 out of 10 rating because an item is too expensive or lacks content, or handing out a 10 out of 10 rating because you have bought the game and need to justify purchasing it, is not helpful at all.

I'm not saying that there are not good reviews among the high or low raters, but more often than not, you get ratings that are not backed up by the review itself.

Average ratings

That's why I started to look at reviews with average ratings almost exclusively. Unlike "the item is the best ever" or "this item is the worst ever" reviews, they are usually weighted which means that you get positive and negative aspects mentioned in the review.

If you consider buying an item, it is average reviews that will help you the most when it comes to making an educated decision.

I'm by no means saying that all reviews that hand out godlike or awful ratings are not worth reading, but more often than not, they either provide no value at all, or seem hell bend on justifying the reviewer's own agenda.

The same holds true for average reviews. You may find bad reviews among them too but the percentage seems a lot lower.

Also, and this problem is found most often on shopping sites, concentrating on average reviews helps sort out paid reviews that give products perfect ratings.

Now You: Do you read user reviews when you shop online?

I remember one Amazon 5-star review (which unfortunately I can’t find) that read something like “Haven’t opened the box yet but it’s the coolest thing ever!”

With Amazon, I often find it handy to check the review record of people who award low marks, to see if they’re serial complainers – quite a few are.

In a way such a naive statement is touching, like a kid on Christmas morning. Point is neither Amazon nor anyone is Santa :) Obviously the reviewer wasn’t into fraudulent tactics, yet it is relevant of some users’ approach to reviewing a product : total subjectivity, emotion, confidence. Not really more unfounded than a Firefox user rating an add-on 1 star over at AMO because — and that was his review — he considered the add-on’s toolbar button/icon disgraceful…

C’est la vie (… so you never can tell) :)

I only read the 2 Star 3 star and 4 star reviews. I generally find that people put a lot more thought into their assessment of a product if saying it has some good points or it is lacking one or two features, rather than just giving it one star or 5 Stars.

I get to wonder if the rating feature itself is not in some way and sometimes an obstacle to the argumentation. I can perfectly well imagine users bringing their appreciation, critics good and bad of a product without rating it. That would leave aside the approximation and/or the irrational feelings expressed by the ratings, would oblige readers to read the comment and not only follow quickly those ratings in a so-called diagonal lecture. Of course no averages would be available but after all what is the sense of an average?

Just a comment.. I was on holiday last week & used the wife’s tablet to access ghacks. I was bombarded by commercials, sound, video etc. It was a painful experience. On my computer because of script blocking, adblocking etc the site is amazing. A very good & interesting read however on the tablet I would never have gone back to the site.

The only site that has consistently extremely high reviews and deserves them is Ghacks.

I’m sure there’s been one or two “one star” ratings for Martin’s site but all the ones I’ve seen have been “five star.” And rightly so.

Keep up the excellent work, Martin. Your site is one of a kind and a very good one at that.

I always look at the worst ratings because it helps give me an idea of the types of things I should expect to fail on the product.

Amazon reviews now contain far too many of the “I received this product for free in exchange for my honest review” disclaimers, typically inflating the average review rating for the product. I now make it a point to boycott any product that contains these reviews. These practices have all but ruined the power of the Amazon review for consumers attempting to make an intelligent buying decision, imo.

I could not agree more regarding reviewers who were “paid” with free or discounted products. I have seen listings where more than 90 percent of positive ratings were awarded by folks who were given the product in exchange for their “honest” review. This practice creates a conflict of interest, or at the very least a positive bias, and renders the “user rating” system almost useless. I make a point of marking such reviews as “Not” helpful, and I also boycott the product.

You are describing the classic statistics problem of outliers: values that are unusually high or low and do not reflect typical experiences. Most websites show you an average score for a product to try to simplify the information in the reviews. But averages are usually a terrible metric on which to base your opinion, because averages are very vulnerable to outliers.

Some websites go a step further and show a bar graph summarizing how many reviews were in each category (e.g. excellent, very good, average, poor, terrible, etc.). This is what you should be looking at, because it gives a reliable overview without being overly influenced by outliers. For example, if you are comparing two products with these graphical summaries:

Product 1:

**********

*****

**

*

Product 2:

***

******

****

*

It should be obvious that Product 1 is better than Product 2 (assuming the top of the graph shows the better reviews).

Product1 is better? Perhaps Product2’s (installation, compatibility) is just widely misunderstood by folks who have purchased… and then posted a review due to their self-inflicted frustration. Regardless of the product involved, you can observe that a considerable portion of 5 star (5 of 5) reviews reflect the purchaser justifying their purchase decision.

Haha, “Liars… and outliers”. The star ratings are subjective, and are usually emotion-driven. I doubt that “the classic statistics problem of outliers” is relevant in such a context. People who experience a “meh, mediocre” reaction to a product are probably far less motivated to post a review. In a context of quizzes / surveys / polls / reviews, wouldn’t those “outliers” in fact merit a higher weighting?

I disagree. If only the extremely happy and extremely unhappy consumers are motivated to post reviews, this, too, will be obvious in the graphical summary. You will get a bimodal distribution:

******

**

**

******

If you see that, then fine, ignore the reviews. But you won’t see this very often if there are enough reviewers. In fact, take a look at a site like TripAdvisor (where there are often hundreds of reviews for a hotel) and see how many bimodal distributions you will find… Not many.

Within the reviews placed to newegg and amazon, I’ve been amazed (appalled) to find that regardless what component I’m shopping for… it’s difficult to find a product which has achieved a 5-star rating across 80% of reviews. This trend has held across several years.

No, I don’t outright discount (filter) the reviews reflecting an extremely high or low score ~~ I find that the devil’s in the details. It’s helpful to read extremely low reviews to find commonalities/patterns in “what’s wrong with this product”. Patterns like:

certain graphics cards, across brands: intolerable coil whine

rosewill and asus branded products: poor packaging and/or high DOA rate and/or miserable warranty/RMA service

Sadly, many reviews reflect the travails of uninformed/witless purchasers. For instance, lotta folks purchasing an SSD can’t grasp why the drive (installed to their aging PC, having a SATA II drive controller) fails to make their system “go faster”. Similarly, reviews across various brands/speeds of RAM sticks indicate widespread and unfair “blaming” due to purchaser cluelessness.

Yes, especially at Amazon, one encounters far too many reviews placed by shills/collaborators. An acquaintence of mine is a prolific Amazon reviewer. He has repeatedly bragged about receiving offers for “free stuff” in response to unfavorable (and unfounded) reviews he has submitted. Didn’t buy the product reviewed, hasn’t bought anything at all from the particular SELLER affected by a given review…. tsk, tsk, Amazon really needs to enforce more stringent guidelines/policies to counter such nonsense.

Martin, the bottom line for me is that I’m more inclined to dismiss (scroll past) too-terse or too succinct “reviews” than discount ’em based on extremism reflected in their star ratings.

p.s.

I’ve noticed that reviewer clueless (or, lack of detailed explanation) pervades reviews of opensource “products” as well as purchased “products”. Most of the reviews across the range of Ubuntu Software Centre, and the Mint Software Center amount to useless entries like “it don’t work” or “i like cuz its pretty”.

Reviews are one reason I prefer shopping online. I always look over the I-hate-this-product comments first to see if they are consistent enough that there might be a real problem with the item. Then I read the mid-range reviews because they usually include both likes and dislikes. If there is a specific feature that I consider either an absolute no-no (such as the ribbon GUI in software) or an absolute must, I use the search function of my browser to find related comments.

I agree that Amazon does a pretty good job presenting reviews, and I love the question/answer capability. I’ve had plenty of my own questions answered, so I always try to return the favor when asked about a product. However, you have to be careful when looking for electronics on Amazon, as the reviews presented are not always related to the product. Recently I was looking for a hard drive and after reading several comments, I realized they were not referring to the drive I was interested in. Same company, same size, but not the same product ID.

Many companies these days are realizing the value of good reviews, and how easy it is to manipulate them. 3rd party shill review companies will “review” your product for a fee. These are most typically used by small companies with little to no name recognition, often out of Asia.

At least for Amazon, there’s a good site to combat these shills… http://www.fakespot.com . Put the product link in, and they’ll use a pretty solid algorithm to evaluate the reviews for authenticity.

If I am interested in a product I want to have it in principle, so in principle my opinion about it is positive. However, I do not want to delude myself with my own positive opinion at the outset, and I do not want to reinforce my opinion by the positive reviews of others. So I read the negative reviews & try to discern a pattern that may indicate a true shortcoming of the product.

If the negative reviews make sense, then I am likely to discard the product.

If they are all over the place I know it is probably because those users have problems that are specific to them (slow PC, wrong installation, not understanding the product properly, ….). In that case I read the positive ones to see if there is a trend or if the hype does not make sense.

If there is a lot of hype in the positive reviews I am likely to discard the product. But if there is a trend in the positive reviews, I am more inclined to get the product.

I do much the same, eliminating the over-the-top reviews on either end of the spectrum.

Legitimate reviews that are entirely negative are often prompted by one person’s bad experience with one facet of the product. If a product is actually broken in some respect, or doesn’t deliver what’s promised, then more than one reviewer is going to say so.

In any case, all the reviews are really anonymous, signed or not, because we have no idea who those people are or why they wrote the review.

I do the same, average or even some with just 1 star in order to identify fast if there’s some serious problems. Usually, you can easily tell those 1-starred comments whether they are trolling, the result of ignorance, specific bugs that won’t hurt me or some serious defects.

Never the less, the average scores usually entail the best and most useful comments. These guys know what they’re doing. They don’t vote impulsively.

Oddly enough, some people give a rave write-up, then award one star.

That’s funny: I do the exact opposite: I look at the rave reviews to see the benefits of a product, and I look at the worst reviews to see why people hated it.

The user’s argument, the explanation he gives to his scoring and, indeed, these appear more often on non-extreme ratings.

Also, very high and very low ratings may more likely handle a fake, be it that of “collaboration” or that of a competitor’s “collaborator”. Even if no doubt smart “collaborators”, knowing the risk of being extreme in ratings and even in their review, may display a tricky rhetoric which nevertheless can be spotted when argumentation fails : if you love/hate, even if you have the right words, lack of facts is basically suspicious.

So, two reefs to avoid : dishonesty of “collaborators” and aberration of “emotional distress”. Still, IMO, a “collaborator” may point out valuable arguments and an emotional reaction may as well hold sense. Finally, it all resumes to facts, arguments, explanation.

Even disinformation and intoxication sometimes deliver truth :) I’d say, let’s beware of ourselves as well and always look at the traffic be the lights red or green.!

First of all I try find real full length review, that is where you’ll really learn about the product.

Next I mostly base myself on the lowest ratings on webshops.

As those are the worst complaints and you pretty quickly know if they are funded or not.

I want to know which problems ppl encounter with the product and the store.

Then when you take into account what they complain about and give your own score to that, you know the risks.

Also take a look at the numbers of the ratings. webshops rarly show a gauss curve, but if you find one you know that the rating is rather ok.

Full length reviews indeed but full-length critics, argumentations as well.

As long as 99 high or low non/poorly explained ratings don’t blind us from one low/medium/high fully detailed rating than why not ratings, even if in this perspective rating remains IMO totally accessory. I mean, what’s the aim of consulting other users’ ratings? Is it to believe a product is good or bad because of the votes or is to consider the reason of a vote?

The question may seem odd but if applied to all voting systems maybe would many of us start thinking more and be less affected by manipulation. I am stunned to notice how more and more surveys ask not what the user wants but what he believes most users want. This is a well-known process which uses a democratic approach with the aim of manipulation only. If we forget the vote’s scoring, at least on blogs, forums, and concentrate on the argumentation then, IMO, it’s a winner-winner approach as it inclines the rater to think and explain and the reader to forget a majority and focus on an argument.

Beware with democracy because if it remains the best system it is not perfect. No liberty without liberty of thoughts and no liberty of thoughts without asking ourselves : why? Why do I make such a choice? If I know why then only may I share that choice with others within a true, healthy, impartial debate which is the best democracy brings.

I try to take a look at all of the recent reviews, at least enough to see if it looks like a “real” review (meaning, something with actually helpful info). An individual rating doesn’t mean that much. I think Amazon, for example, does a pretty good job of organizing and presenting reviews and ratings, questions and answers, both of products and sellers. (Now, if they’d only let me pick my preferred shipper, then I’d buy a lot more stuff there.)

I never see at the ratings, they’re always broken.

I do see review when I want to buy something.

usually sites nowadays include ‘helpful’ things on the review.

I find ‘helpful’ reviews are actually helpful to me.

Reviews by websites are broken in an even more fundamental ways. Most of them review the item over an extremely short period of time(sometime a single use!) then they move on and never retest it. When you want to have an idea of the durability/longevity/stability of an item, those are useless.