Create A Cached Website Copy

Many websites tend to be discontinued after a time. This can be extremely frustrating if that website did contain some valuable information that are not accessible in the same form anywhere on the Internet. Google Cache might be a solution but it usually caches one of the last states of a page which does not necessarily have to be the one containing the important information. There are various ways to preserve information on the Internet. It is possible to save the information on a per-page basis using the web browser's Save As option, to use website downloaders like HTTrack or online services like BackupUrl.

All methods have various advantages and disadvantages. Using the Save As function in web browsers is probably the fastest way to download a page to the computer. The structure makes it on the other hand uncomfortable to work with on larger projects. Website downloaders on the other hand deal perfectly with large websites, they do require some knowledge and configuration though before they even start to download the first byte.

The online service Backupurl offers another way to create a cached copy of a website. The user enters the url of a page that he wants to preserve in the form on the website. The service will then cache that url for the user and provide two addresses to cached versions of the page. The main advantage of the service is that the cached pages are not stored locally. This might be favorable in environments with strict data storage policies. The disadvantage is obvious as well. Only one page can be cached per run which means it becomes as impracticable and uncomfortable as using Save As if multiple pages need to be cached. There is also no guarantee that the service will be there when the information need to be retrieved.

It would also be an interesting option to retrieve all pages that have been cached at once. The only way to keep track of all cached pages is to copy and paste all created urls into another document. Backup URL can be an interesting option under certain circumstance. Advanced users are better off with applications like HTTrack or similar applications.

Update: Backup URL is no longer available. I suggest you use the formerly mentioned HTTrack instead or the browser's own save web page feature.

Advertisement

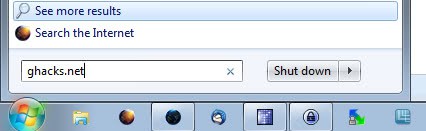

If I want to browse all the previous articles on a website like ghacks.net, I can either spend a very long time browsing all the webpages online (currently over 1,000 pages), or download the whole website with HTTrack. However the individual article URL’s are so well named that if there were a way for me to download or generate a list of the URL’s only under http://www.ghacks.net, for instance, it would make it much quicker & easier to browse them offline, in my own time. Then it is just a matter of typing in the URL online to go to the article itself.

Does anyone know if there is a way to do this?

Thanks

Another solution for Firefox users is Scrapbook.

It can capture pages, elements of pages & allows in-depth capture of a site.

The files are saved in a unique folder for each capture, therefore a full site with a complicated folder structure will all be saved (with parsed links) to a single folder.

It has an import/export tool so the capture can then be exported to another location. I use it to save archives & to view them on devices that aren’t connected to the web & don’t have Firefox installed.

In-depth capture starts but to make sure it doesn’t follow all links, it’s best to pause the capture at the beginning & specify limited to the domain name or directory file structure within the domain.

http://amb.vis.ne.jp/mozilla/scrapbook/

This extension was the reason I switched to Firefox & I use it every day (snippets etc).

Another option for finding a long-lost site that’s more effective than trying to use the google cache is using the Internet Archive Wayback Machine at http://web.archive.org (which also allows you to see older versions of a site that’s still up).

I cache my blog, http://vartak.blogspot.com by using this hack:

I set the number of posts per view to a high number and then use the email address webinbrowser to send a copy of the page to my Gmail :)