How Internet Search Engines work

I've published a short article on how search engines work in 2002 and thought it would be a good idea to present it to you as well. The information it contains are still valid so take your time and read through this article and post your comments and questions in the usual place.

1. Search engine structure

A search engine consists of eight interweaved elements, those are.

- Url Server, Crawler, Parser, Store Server, Lexicon, Hit List, Repository, Searchers

The Url Server manages a list that contains unverified urls, new urls are added to the Url Server in different ways, for example by using a form on the search engines' website. Another possibility that new urls are added is that a visited url contains links to new urls that are not verified. Each url gets a so called docID, which is easier to archive than the full url.

The Crawler gets unverified urls from the Url Server and changes the url into ip addresses using DNS. As soon as the ip address is available it opens a HTTP connection to the ip. If this is successful it starts a GET command to receive the page's contents (source). The page content is then transferred to the Storeserver that compresses the content.

The Parser decompresses the sources retrieved from the Storeserver. After that the source is analysed in the following way. First it searches for words that are not in his Lexicon, if it finds a new word it is added to the Lexicon. Words that already exist in the Lexicon are added to the hitlist with a remark on how often it occurs in the source. Additionally information like the title, part of the text or the whole text are saved in the Repository.

The Lexicon contains all words that the parser found in all urls processed, each word has a pointer to the hit list of that word.

The Hit List has pointers to the Repository. This makes it possible for the search engine to present results pretty fast. All information about the site that is stored in the repository is presented in the search result window. (normally title, url and the first line(s) of the page)

The Searcher is the link between the user and the search engine. Users enter search phrases in the searcher, hit enter and the searcher uses the Lexicon and the Hit List to present results.

Example:

----------

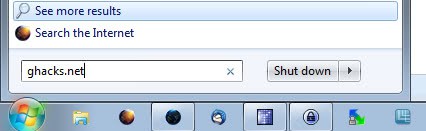

User types http://www.google.com/ into his web browser, then enters the search phrase "security website" into the search field. (which is the Searcher component)

The Searcher uses the Lexicon to check the pointers of the two words (the pointers are connected to the Hit List), follows the pointers to the Hit List, checks the first 10 entries in the Hit List, follows the pointer to the Repository and creates a new webpage containing the first 10 entries of the hitlist, the title and some lines of the pages from the Repository

The most important element of a search engine is its rating system. Search engines measure urls to determine which results are displayed first when a user starts a search. I've chosen Google as the representative search engine due to the fact that search engines measure differently.

Google awards points to every url, the more points a url got the higher the rank in the Hit list. important elements that add points are the url itself, the title, the keywords, the content, headings aso. Additionally urls get points for every link that links to them from other urls. Links from urls that have a high rating themselves give more points than links from urls with low ratings.

2. Advanced search methods:

Search Engines use Boolean operators for advanced searches. The operators AND, OR and NOT are used by every known search engine.

word1 AND word2 means that the search engine looks for urls that contain word1 and word2, urls with only one of the words are not displayed in the results

word1 OR word2 means that the search engine displays all urls tha contain word1 or word2 or word1 and word1.

word1 NOT word2 means that the search engine looks for pages that contain word1 and NOT word2

Examples: (google)

----------------------

"Clinton President" - displays results with urls that contain Clinton and President but not where one of them is missing

"Clinton AND President" - same result as above

"Clinton +President" - same result as above

"Clinton OR President" - displays results with urls that contain either Clinton or President or both

"Clinton NOT President" - displays results with urls that contain Clinton but NOT President

"Clinton -President" - same results as above

Google uses additional features, those are explained below.

"allintitle" - (allintitle:deny security), displays urls that contain the terms in their title

"allinurl" - (allinurl:security advise), displays urls that contain all terms in their url.

"cache" - (cache:www.deny.de), displays a cached version of the requested url

"date" - (google advanced search, no shortcut), presents results of a specific period

"filetype" - (deny filetype:pdf), displays urls that contain the search terms and the specified file type.

"info" - (info:www.deny.de) Goggle lists stored information about the requested website

"intitle" (intitle:security advise), displays url that have the first term in their title and the others anywhere on the page.

"inurl" - (inurl:security advise), displays urls that contain the first term in their url and the other terms anywhere on the page

"languages" - (google advanced search, no shortcut), displays only results in a specified language

"link" - (link:www.deny.de), displays all urls that link to the page

"occurences" - (google advanced search, no shortcut), specifies where the search term has to occur on the page

"phrase searching" ("to keep an eye on"), only urls are displayed where the phrase is written like this. It wont show results where only part of the phrase is present

"related" - (related:www.deny.de), displays similar pages only

"safe search" - (google advanced search, no shortcut), filters urls that contain unsuitable webpages for minors, for example pornographic pages

"site" - (security site:www.securityadvise.de), processes only one url for the terms

"spell" - (spell:advise), spell checks the term

"stocks" - (stocks:YHOO), displays financial information about companies, you need the special company code to search for it, you get that code at Yahoo (http://finance.yahoo.com/l