Website Downloader: download entire Wayback Machine site archives

Website Downloader is a relatively new service that enables you to download individual pages or entire archives of websites on the Wayback Machine site.

Update: Website Downloader is no longer free. You are asked to pay before you get to see a single bit of the specified website. It is not recommended anymore. The only free solution that I'm aware of right now is Wayback Machine Downloader. It is a Ruby script, however, and requires more or less setup time depending on the operating system that you are using. Archivarix is an online service that is good for 200 free files from the archive. If the site is small, this may do as well. All other services are either not working anymore, or paid services. End

The Wayback Machine, part of the Internet Archive, is a very useful service. It enables you to browse website snapshots recorded by the site's crawler.

You may use it to check out past versions of a page on the Internet, or access pages that are permanently or temporarily not available. It is also a great option to recover web pages as a webmaster that are not accessible anymore (maybe because your hosting company terminated the account, or because of data corruption and lack of backups).

Several browser extensions, Wayback Fox for Firefox or Wayback Machine for Chrome and Firefox make use of the Wayback Machine's archive to provide users with copies of pages that are not accessible.

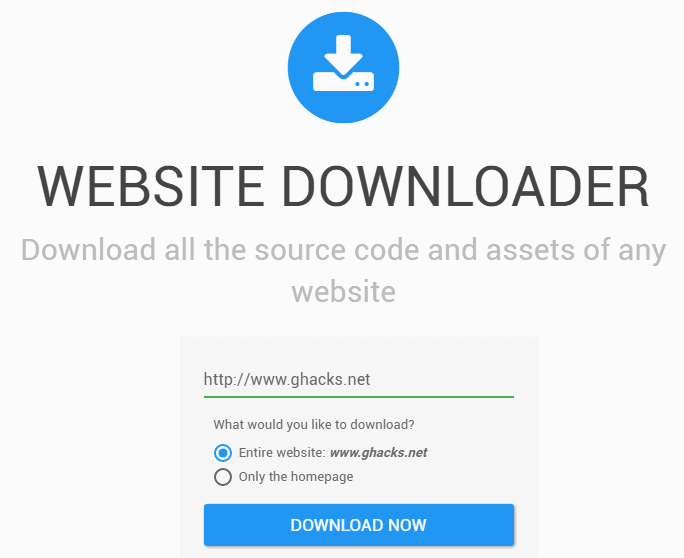

Website Downloader

While you can download any page on the Wayback Machine website using your web browser's "Save Page" functionality, doing so for an entire website may not be feasible depending on its size. Not a problem if a site has just a few pages, but if it has thousands of them, you'd spend entire weeks downloading those pages manually.

Enter Website Downloader: the free service lets you download a website's entire archive to the local system.

All you have to do is type the URL that you want to download on the Website Downloader site, and select whether you want to download the homepage only, or the entire website.

Note: It may take minutes or longer for the site to be processed by Website Downloader.

Here is a short video that demonstrates the functionality:

The process itself is straightforward. The service grabs each HTML file of the site (or just one if you select to download a single URL), and clones it to the local hard drive of the computer. Links are converted automatically so that they can be used off-line, and images, PDF documents, CSS and JavaScript files are downloaded and referenced correctly as well.

You may download the copy of the site as a zip file to your local system after the background process completes, or use the service to get a quote and get the copy converted to a WordPress site.

Closing Words

Website Downloader is an interesting service. It was swarmed with requests at the time of the review, and you may also experience that the generation of website downloads, even of single pages, takes longer than it should because of that.

There is also the chance that some people will abuse the service by downloading entire websites, and publishing them again on the Internet.

Now You: What's your take on website downloader?

What website downloader would you recommend?

Garbage scam! they charge for everything, please de-list this fraud

Jessy, thank you for letting me know. you are right, the service is not free anymore. I removed the link, and added links to alternative services.

To download entire website from archive.org you can also try this service – https://en.archivarix.com/

This really took a long time for me also. I found a service that was quite reliable here http://waybackdownloader.com/

Using feedly, link to the first “link” (in this case “website downloader”) is missing in RSS. Not just on this article, on all of them. Is this by design to lure me in to this blog or is it a bug?

Martin this website is just a pure 👎Scam👎 .

Just Use WebsiteRecovery, it’s OpenSource and FREE: https://github.com/alessandropellegrini/WebsiteRecovery

I’ve tested it and it work fine and fast ! :)

Thanks for sharing, I usually use https://github.com/hartator/wayback-machine-downloader and http://archiveorg.download/ , but always ready to try another free option.

https://www.httrack.com/

I tried to use that software long ago, and failed. I found it severely lacking in explanations, and very obsolete regarding its look and feel. I might give it a try again, though : I see there has been a fresh version this year.

I searched an old site I used to frequent. It was ready for download in 10 seconds. I then received a popup to pay $0.99 for that zip download.

Congratulations for your findings ! One dollar for a download, and they never, nowhere, tell you beforehand ! Now that explains why the site presentation is so obscure and leaves so many questions unanswered…

Not clear either, from the developer’s website :

“Website Downloader, Website Copier or Website Ripper allows you to download websites from the Internet to your local hard drive on your own computer.”

So, what are this Website Copier and this Website Ripper ? Similar services by the same developer, offering different options ? Or competitors ? Where does one find them ? What’s the difference between them and Website Downloader ? Or are they alternate names just inserted there to attract Google searches ?

I’m sick and tired of this trend of slapping together a quick-and-dirty site to peddle a new service, always beautiful and slick and looking simple to use, but dramatically lacking in explanations, directions and support. The “help video” provided is comically short and unhelpful. Is this any more than a proof of concept — if that ?

OK, now my downloading tab has disappeared, or it has stopped downloading without warning. Does this mean downloading is finished ? Will I get the promised email warning me that the download is finished ? Or rather the upload, since apparently it uploads the website to its website, and then you get to download it ? So you need to “download” twice, in fact ? If the vague hints one can extract from their site are to be believed ?

If anybody has managed to download anything through this service, please drop a line and tell the world.

“Or are they alternate names just inserted there to attract Google searches?”

Yes, I think you are right about that.

In case you – or anyone else – still needs to download a website, I developed a similar tool here:

https://www.waybackmachinedownloader.com/website-downloader-online/

I don’t know if we are any better, but let me know via our contact form if anyone has any complaints or feedback.

At least we are upfront about our pricing and we give small sites away for free (up to 10MB)

“Will I get the promised email warning me that the download is finished?”

We solved this by sending users an email with a link, so they can track the progress. Paying users also get a notification when the scraping has finished.

Adam – Waybackmachinedownloader.com

Wow, this really takes a long time. I’m currently downloading what’s in my opinion a modest-size website (a very flat blog with no ads or other fancy additions, publishing one post a day since April 2015), and Website Downloader has been at it for 2 hours. No indication of estimated time left, either. The progress bar is useless : once it has covered its course, it begins all over again.

Also, it’s not clear what the difference is between the option to download a site from Wayback Machine, and to download it directly from its live URL, which Website Downloader also purports to do here :

https://websitedownloader.io

@Clairvaux

Same here. Tried several times to download something from wayback machine each more than 3 hours. Doesn’t work.

Unfortunately the superb WinHTTrack also doesn’t work with wayback machine sites well and I couldn’t get the ruby tool by hartator running, I’ll now try the bash script by allessandropellegrini (thanks @Mikhoul)

What is the business model ?

I think they offer options to convert the downloaded site to WordPress.

Would this be a paying option ? Or would they get revenue from WordPress doing so ?

The idea of the tool is very attractive, anyway. I’m just trying a download. Not finished yet.

Downloads to your local system are free it appears.

Is the link to the website missing?

it is listed in the youtube page of the video…

I have added the link to the first paragraph to make it clearer.