Submit URLs To Google Via Google Webmaster Tools

Creating a new website can be a very rewarding process. Webmasters usually run into a phase shortly after they have setup the site where they have to wait until the site gets indexed in the big search engines. This can take minutes, hours, days and sometimes even weeks, as it depends on the search engine bots and their first visit to the website. Indexation of contents is usually fast if links are posted on a authority set or well crawled website, or if pings and social bookmarking is used to get the contents indexed.

Sometimes though you wait and wonder why the darn page is not in the index right now. This can also be a problem for an established site, for instance if you have changed content on a popular post or on your site in general. You'd like to see the new contents indexed in the search engines which usually does not happen right after you have hit the save button.

Google has now announced that they have added an option for webmasters to submit their urls to the search engine in Google Webmaster Tools.

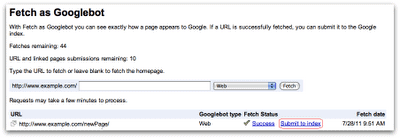

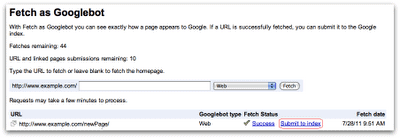

The existing Fetch as Googlebot feature in Webmaster Tools has been improved with a submit to index option which basically allows you to submit the fetched url to Google for evaluation and inclusion in the index.

Here is how this works. Open Google Webmaster Tools and follow the Diagnostics > Fetch As Googlebot option in the left sidebar. You have to select the right domain first, of course. You need to add it if it is not already listed in Webmaster Tools.

You need to enter the url that you want to crawl. This can be a website's homepage, a subpage or any other page that is publicly accessible on the Internet. Click Fetch to retrieve the site as Googlebot. This process may take between a few seconds to a few minutes. Once done you get a status report on the same page and an option to submit to index.

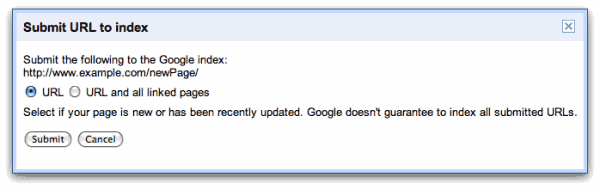

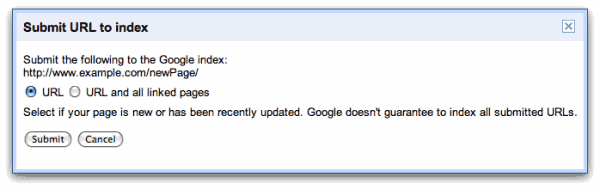

You get a prompt first where you can select to submit only the single url or the url and all pages that it links to.

Google currently has a limit of 50 individual page submissions per week, and 10 page with all linked pages submissions per week. The numbers are shown on the Fetch as Googlebot page.

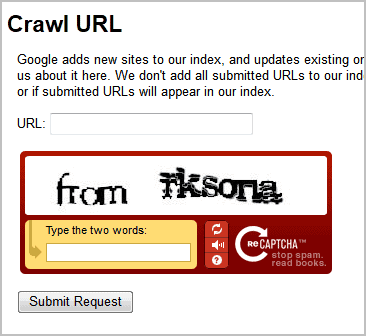

Google has revamped the public Crawl URL form as well. The core difference besides the captcha is that webmasters and users do not have to verify ownership of the page or site to submit it to the index.

The new Submit to Index feature is handy for webmasters who have troubles getting their website or a specific part of that website indexed in Google. (via)

i live in Germany. I have been through hell and pain, When my husband turned against our marriage and sent me away, he said that he never wanted to see me again because he was having an affair outside with another woman. I was finally confused and so many thoughts came to my mind,when a friend finally advice me to go and visit a spell caster. As i was searching for a spell caster to help,I was scammed four {4} times by some who claimed to be real spell casters until i found the real and great spell caster on Google search who helped me and solved all my problems concerning my husband who left me since 7 months and after that a friend also complained of her husband too,So i linked her up with the same spell caster who helped me too,and the problem was also solved by the same spell caster oldreligoin@gmail.com . Whao!! the real and great spell caster is here,all you need to do now is to contact this same address whenever you are in any problem.

Mary Lawson

Have been submitting my site a number of times in the week due to high changes but now the response comes up with ‘Subit URL only to index’ and does not give me the option to submit all pages!! why is this?

Movie guide of tollywood, bollywood & Hollywood! Be the first to know latest movie gossips, movie reviews, interviews, photo gallery and many more

no

My server was down when Google tried to crawl.

Is this suitable for me to use to tell Google all is ok?

will that work for blogger blogs as well?

Yes it works for blogger blog’s

I’m not aware of any restrictions.