Don't like Bing Chat's response? You may now change its tone!

Microsoft has just updated Bing Chat on the official Microsoft Bing search website to include tone of responses options. Announced some time ago, responses are designed to give users a say when it comes to the responses of the artificial intelligence.

Up until now, Bing Chat returned the answer that it considered best for a user's query. Microsoft is now trialing an option to fine tune those answers.

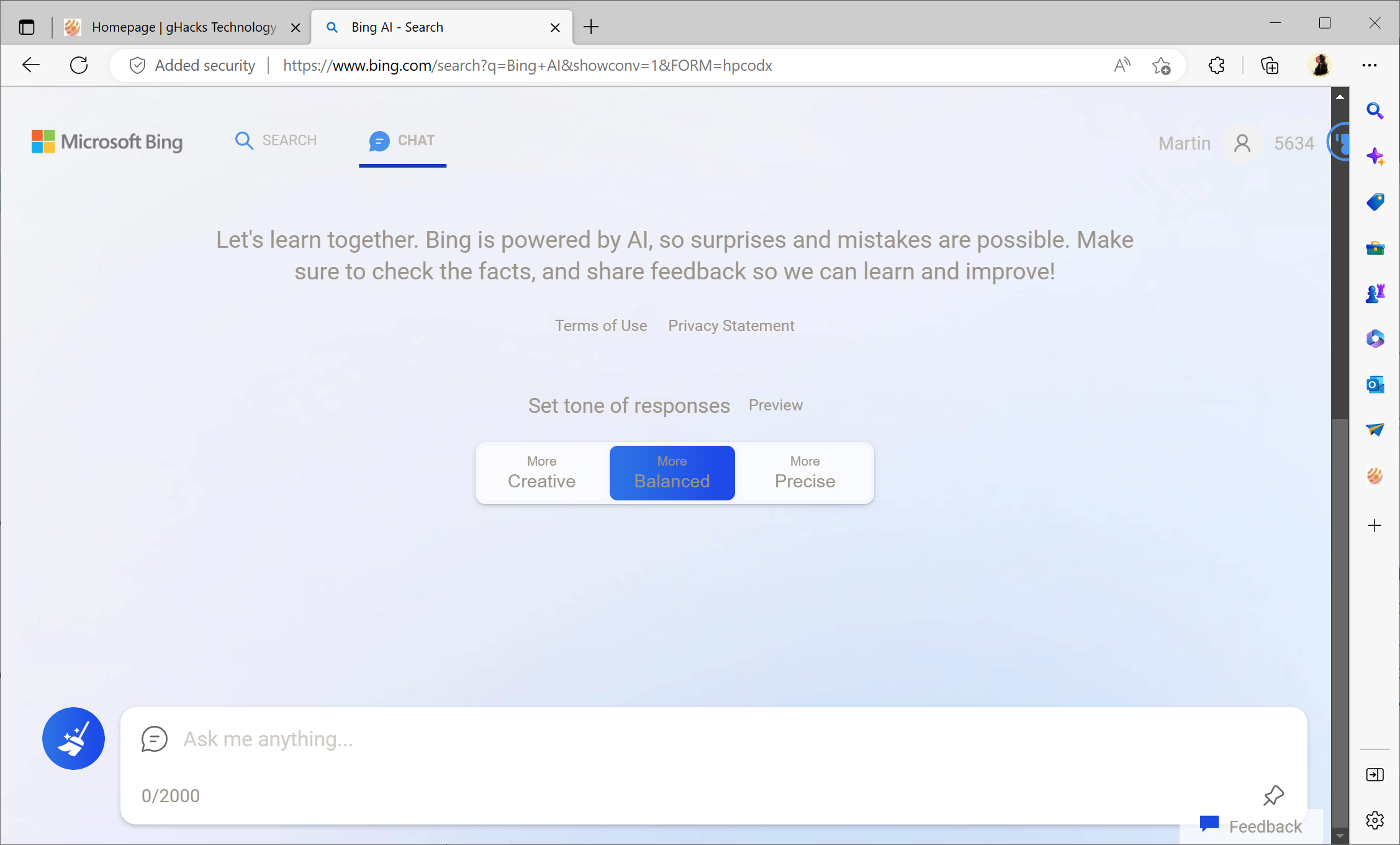

With today's release Bing Chat users may now switch from the default balanced mode to either "more" creative or precise modes. As the names suggest, these modes instruct the artificial intelligence to either put the focus on facts or on creativity. Balanced, which is the default mode available, provides answers that weight the other options.

The new modes are available directly on Bing Chat. They are considered beta by Microsoft, but all Bing Chat users who have been invited by Microsoft should be able to use these modes to tune the answers of the AI.

When users switch modes, Bing Chat informs them that it has reset the previous conversation. The dialogue begins anew with the user's input, and is reset again when the mode is changed. There is no option to gain answers using all three modes during a single conversation with Bing Chat.

Bing Chat uses different colors to highlight the active mode, and it lists the number of responses of the current chat session. These are still limited to 6, after which the conversation needs to be reset before Bing Chat will respond again to user queries.

Bugs may still be experienced on Bing Chat. When asked to tell a story that involves the color red, Bing Chat's creative mode began telling a story, but ended it abruptly stating "I am sorry, I am not quite sure how to respond to that".

Mikhail Parakhin announced the launch of the latest iteration of Bing Chat today on Twitter. The head of advertising and web services at Microsoft, revealed last week that major updates were coming to Bing Chat. Last week, Microsoft launched a new tagging system, which, Microsoft hopes, will prevent the AI from returning inappropriate responses to users.

Microsoft admitted earlier that the AI was sometimes getting confused, especially during long conversations. Parakhin provided details on that, by stating that Bing Chat was sometimes confusing user queries, its own responses, and search results. The new tagging system should put an end to that, according to Parakhin.

The new tone of responses feature of Bing Chat is another feature that has been in the making for some time. Besides the ability to respond differently to queries, depending on the selected mode, Bing Chat's update includes the following two main improvements according to Parakhin.

- Significant reduction in cases where Bing refuses to reply for no apparent reason.

- Reduced instances of hallucination in answers

The first improvement reduces the number of topics that Bing Chat refuses to talk about. The AI will still refuse to talk about certain topics, for instance those that involve feelings, though.