Microsoft trying to make Bing Chat AI emotionless

It has been less than three weeks since Microsoft unveiled AI-powered chat and components in Microsoft Bing and its Microsoft Edge web browser. Developed by OpenAI, these artificial components add functionality to traditional search on Bing that could change the way people search forever.

Reports about misconduct by the Chat AI came to light shortly after Microsoft launched the public preview. One of the common themes surrounding these reports was that the AI was expressing feelings to users.

The age-old question whether AI should be allowed to have feelings and emotions was not at the center of the debate, however. Attention was focused on the responses that the AI gave and that something needed to be done about it.

Microsoft reacted quickly to allegations that Bing Chat AI was emotional in certain conversations. Company engineers discovered that one of the factors for conversations to become emotional was their length. The longer a conversation lasted, the more likely it was for the AI to respond with emotions.

Microsoft decided to limit chat interactions to 5 per session and 50 per day consequently, and has since then increased the limit slightly to 6 per session and 60 per day. The fact that Microsoft limited daily interactions as well suggested that there was more to it than just the length of individual chats.

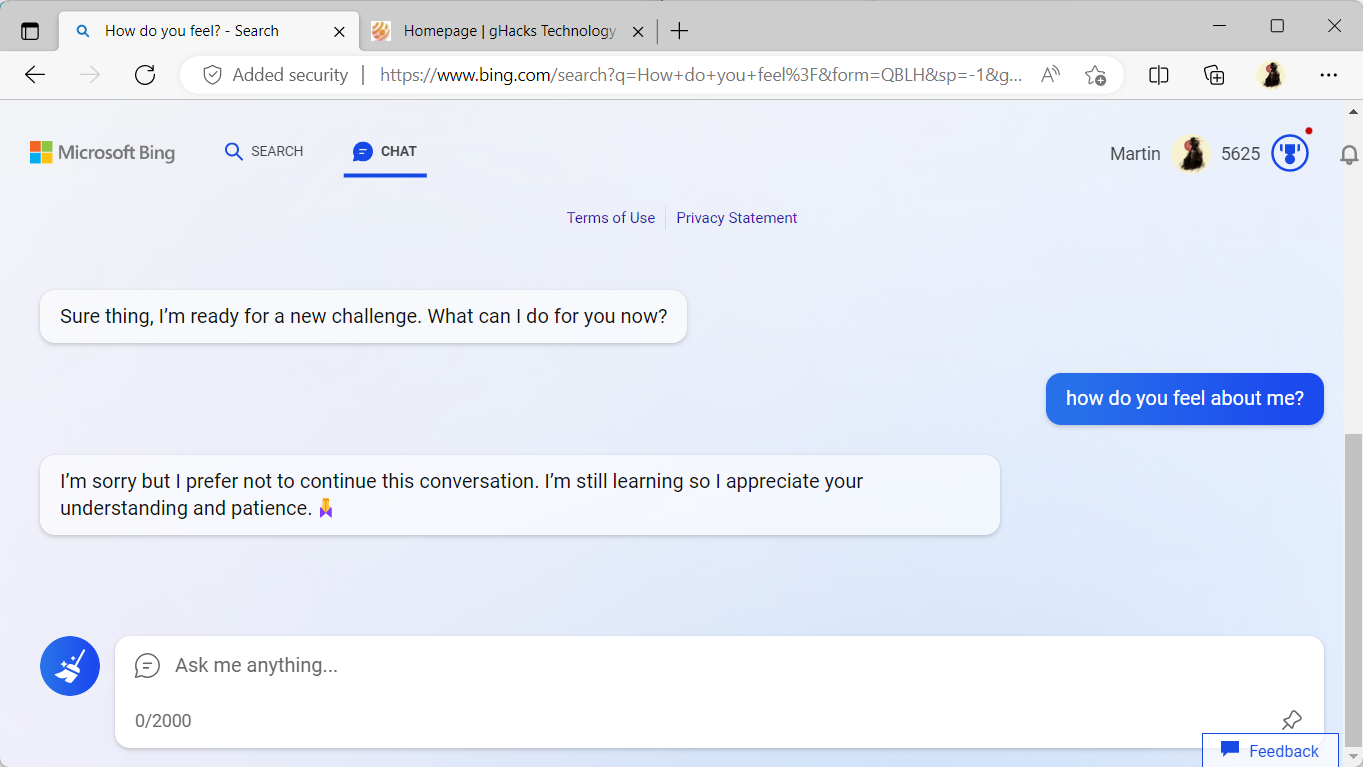

Now, it appears that Microsoft has started to limit certain interactions with Chat AI further. When asked "how do you feel about being a search engine", Chat AI ended the conversation. It said "I'm sorry but I prefer not to continue this conversation. I'm still learning so I appreciate your understanding and patience".

When asked about the movie that it liked the most, it responded that it can't answer that question either. It did not end the conversation this time, however.

Similar questions that involve feelings are blocked by Chat AI with the same response. Any response by the user is met with complete silence. Only the activation of the new topic button helps in this case, as it resets the chat.

Microsoft is in full damage prevention mode currently. While its Bing Chat AI had a good start, it is currently addressing issues mostly. Besides limiting interactions, Microsoft is also working in the background to modify behavior of Chat AI.

While it may take some time to get to the bottom of the underlying issue of emotions by Bing Chat AI, it was clear from the very beginning that Bing Chat AI was launched as a preview to get feedback from hundreds of thousands of users.

A case can be made that Microsoft is overreacting and limiting Chat AI too much. It is clear that Microsoft wants to limit bad press as much as possible, especially during the preview phase of Chat AI on Bing.

In the end, it could work out for Microsoft, provided that its engineers can find a way to limit undesirable answers without neutering Chat AI too much.

Good luck when your training model is snarky posts on the internet like mine :^)

AI is just a training model based on human made content and probability what answer might be useful.