Workers read violent graphic depictions so that ChatGPT wasn’t as toxic as GPT-3

Upon its release in November 2022, ChatGPT was widely recognized as a groundbreaking technological achievement. The advanced AI chatbot can produce text on a wide variety of subjects, including reworking an old Chinese proverb into Gen-z vernacular, and explaining quantum computing to children with the use allegory and storytelling. Within just one week, it had amassed over a million users.

However, the success of ChatGPT cannot be solely attributed to the ingenuity of Silicon Valley. An investigation by TIME revealed that in efforts to reduce toxicity in ChatGPT, OpenAI utilized the services of outsourced Kenyan workers who earn less than $2 per hour.

The work performed by these outsourced laborers was crucial for OpenAI. GPT-3, ChatGPT's predecessor, possessed the ability to compose sentences effectively, but the application had the tendency to spew out violent, sexist and racist statements. The issue is that the utility was trained largely on information from the internet, home to both the worst and best of human intentions. Even though access to such a massive amount of human information is the reason why GPT-3 showcased such deep intelligence, it’s also the reason for the utility’s equally deep biases.

Getting rid of these biases and the harmful content that inspired them was no easy task. Even with a team of hundreds of people, it would have taken decades to sift through every piece of data and verify whether it was appropriate or not. The only way that OpenAI was able to lay the groundwork for the less biased and offensive ChatGPT was through the creation of a new AI-powered safety mechanism.

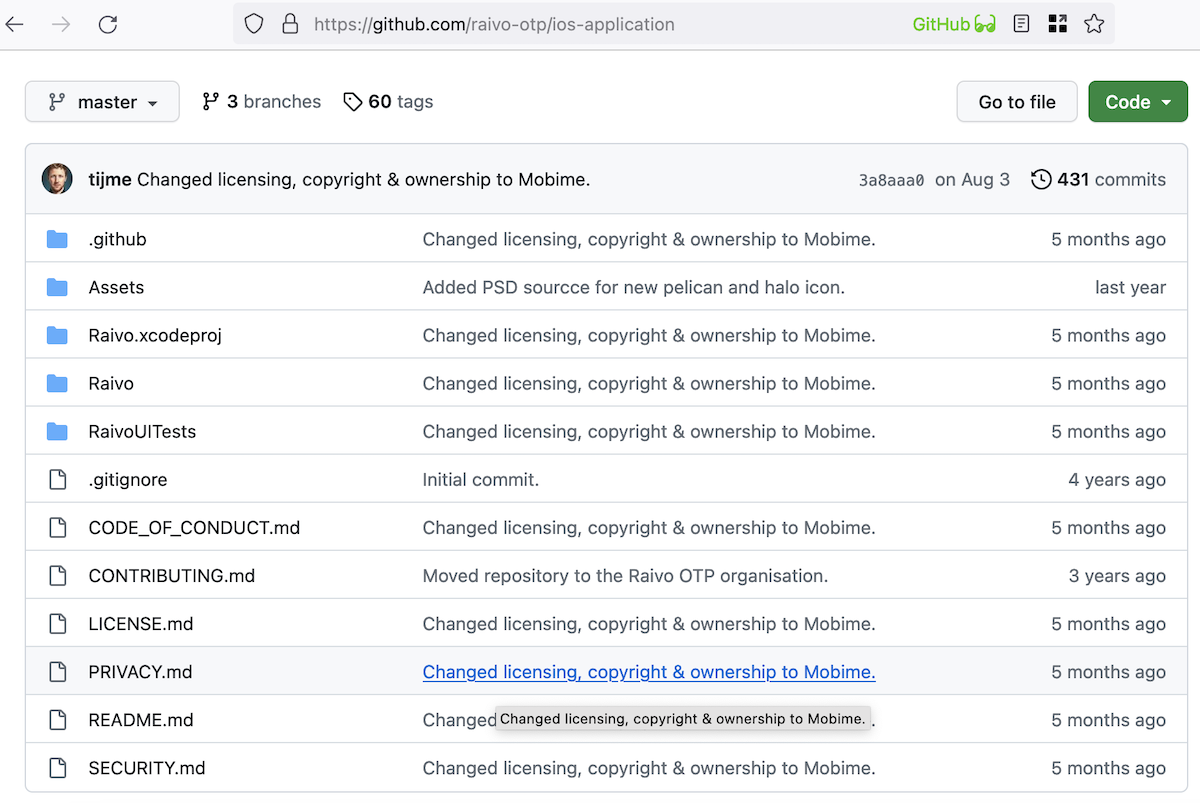

However, in order to train that AI-powered safety mechanism, OpenAI would need a human labor force, and it found one in Kenya. As it turns out, in order to create a mechanism for detecting harmful content, you need a vast library of harmful content upon which to train the mechanism. This way, it learns what to recognize as acceptable, and what to recognize as toxic. In hopes to build a non-toxic chatbot, OpenAI outsourced tens of thousands of text snippets to a company in Kenya starting November 2021. A significant portion of the text appeared to have been sourced from the darkest corners of the internet. These texts included graphic descriptions of depraved acts.

These were then analyzed and labeled by the workforce in Kenya, who were sworn to secrecy and remained so due to considerable fears surrounding the status of their employment. The data labelers hired on behalf of OpenAI were given a salary ranging from $1.32 to $2 per hour, depending on their experience and performance.

OpenAI’s stance was clear from the beginning. ‘Our mission is to ensure artificial general intelligence benefits all of humanity, and we work hard to build safe and useful AI systems that limit bias and harmful content.’ However, the toll that this task took on the Kenyan natives was only recently discovered by TIME. In a statement concerning graphic and depraved content he had to label, one labeler stated that ‘That was torture, you will read a number of statements like that all through the week. By the time it gets to Friday, you are disturbed from thinking through that picture.’

The impact on the workers was of such degree that the outsourcing firm, Sama, eventually cancelled all of the work it had been hired by OpenAI to complete in February 2022. The contract was supposed to continue for a further eight months.

This story highlights the seedy underbelly of the tech we are so excited by today. There are countless invisible workers performing countless unimaginable tasks to ensure that AI works the way we expect.

Advertisement

It’s like the billions of free workers who solve captchas for Google every day to train AIs for them, and also not unlike that Silicon Valley TV series character who had to validate thousands of dick pics as a full time job to train a child safety AI…

Being a “programmer” I have not really bothered looking into the “AI” platform because I think its bunk. The idea that a program can think and develop its own ideas and concepts or evolve on its own is humorous. As a result of that stance I have not bothered to read much on the subject. However this articles title caught my attention:

After reading I have these 2 thoughts:

1. First of all the “New” AI platform (as the article explains) works by collecting. “The issue is that the utility was trained largely on information from the internet”. So right there, in what way is collecting and data basing existing data (ideas and conversations from the internet) intelligent? It’s a simple program that collects data and determines based on what matches your entry how to reply. As the article points out “access to such a massive amount of human information is the reason why GPT-3 showcased such deep intelligence“.

2. If its artificial intelligence why would it need to be censored? Again the article points out that by collecting the data from the web “it’s also the reason for the utility’s equally deep biases” and “Getting rid of these biases and the harmful content that inspired them was no easy task.” They hired a firm to have humans sift through the collected data and determine and tag the “toxic” data so the AI could be programmed to avoid such terms and conversations.

Read it if you’re interested but the article pretty much confirms that AI is just a bunch of Human data repackaged and delivered in a quick efficient manner. Like access to information on the phone in your pocket it will only “dumb up” humans and make our brains lazier.

Just my 2 cents