Firefox's Project Fission: better security and more processes

Mozilla is working on a new process model for the Firefox web browser to enable full site isolation in the browser once rolled out.

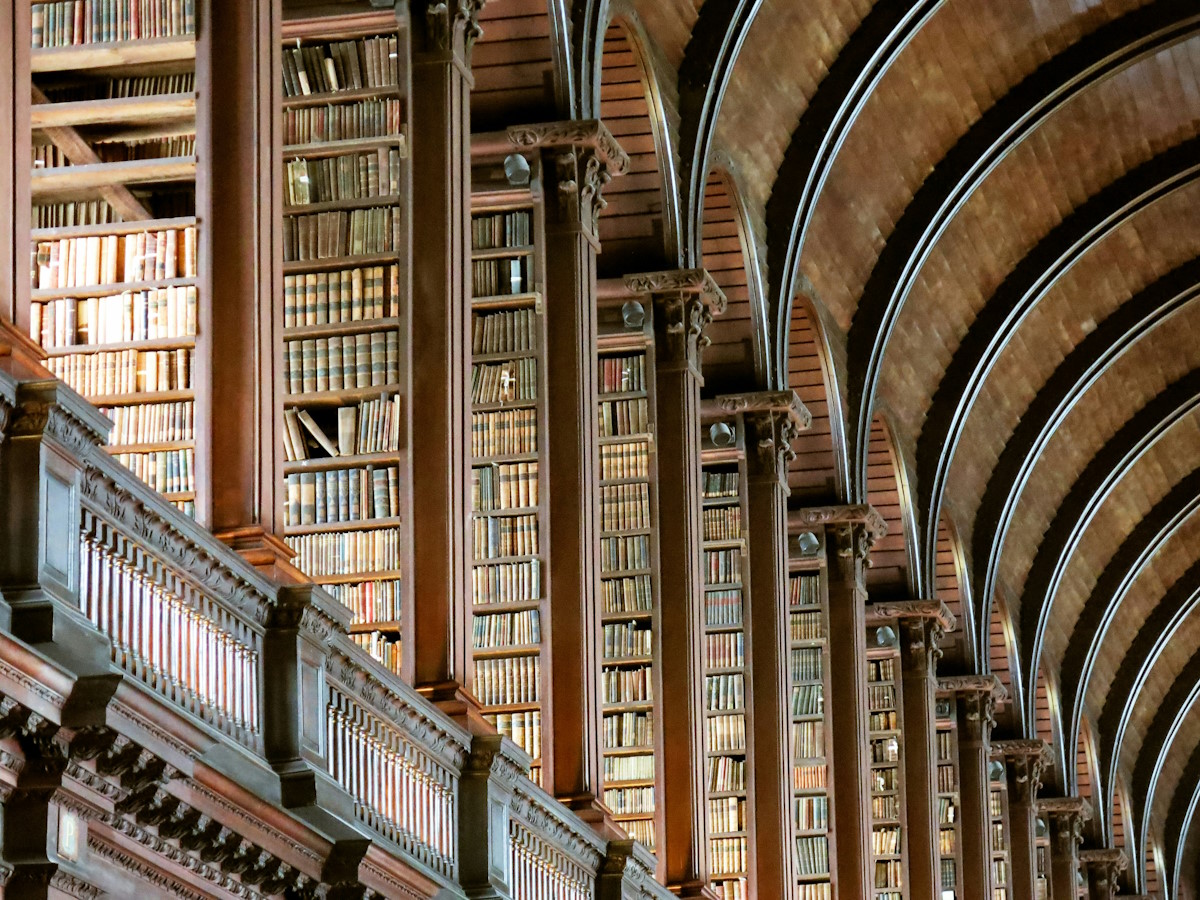

Firefox's current process model runs the browser user interface and web content in separate processes. Web content is further divided into several processes and you can check out how many by loading about:support in the browser's address bar.

Firefox's current system limits web content processes so that content from different sites may end up in the same process. Cross-site iframes loaded in a tab use the same process as the parent currently.

Project Fission

Mozilla's Project Fission, the codename for the new process model, aims to change that by separating cross-site iframes from their parent to improve security and stability. What that means is that Firefox will create processes for any iframe loaded on a site in individual processes.

Mozilla follows Google's implementation. Google introduced site isolation in Google Chrome last year to limit render processes to individual sites. Google concluded back then that site isolation would improve security and stability of the browser. The downside to using site isolation was that Chrome would use more memory. Initial tests revealed that Chrome used about 20% more memory with site isolation fully enabled in the browser.

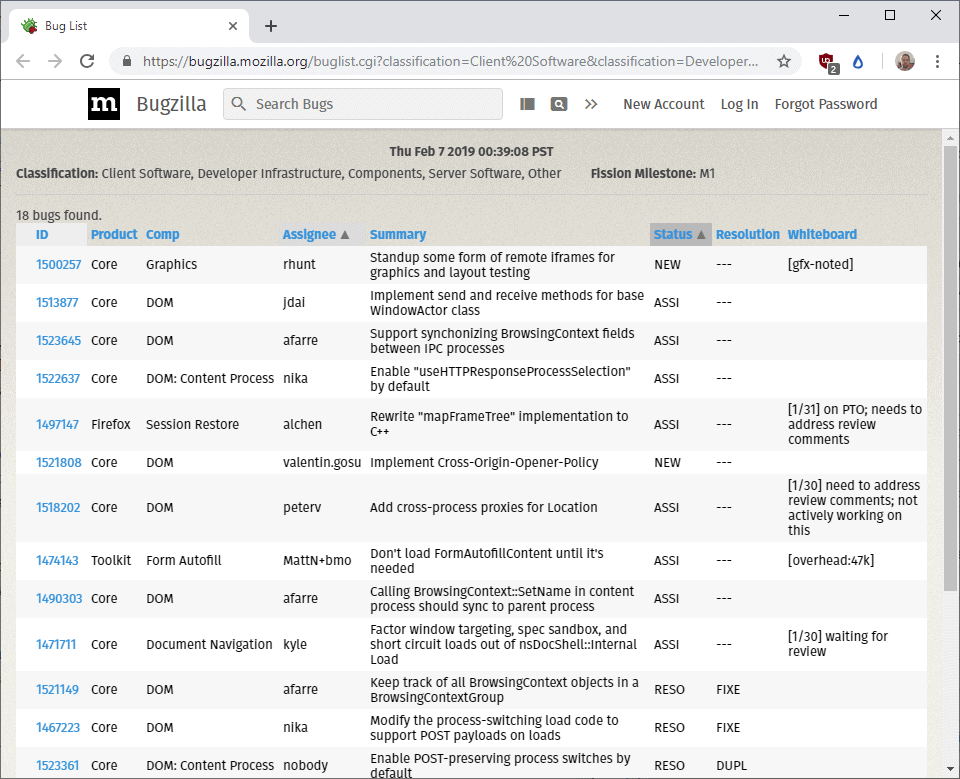

Mozilla wants to reach milestone 1 in February 2019; the organization has not set a target for inclusion in stable versions of Firefox as it is a mammoth project that requires effort from nearly any Firefox engineering team.

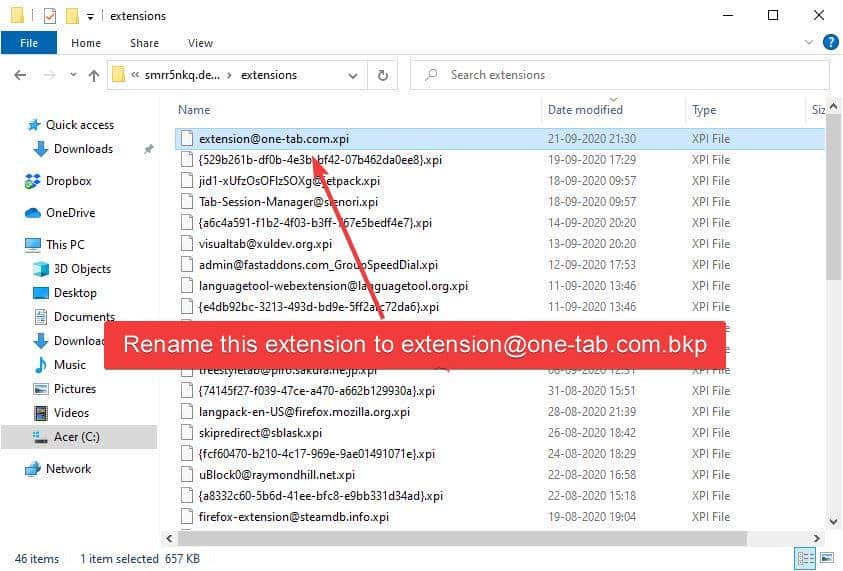

Milestone 1 lays the groundwork for full site isolation in the Firefox web browser. Firefox users interested in the progress that Mozilla makes in this regard may head over to Bugzilla@Mozilla to follow development closely.

Project Fission, full site isolation, protects Firefox from new Meltdown or Spectre CPU flaws that may be discovered in the future. Mozilla patched Firefox to protect against discovered flaws but under Firefox's current architecture, Mozilla would have to adjust Firefox each time a new flaw would be discovered.

With site isolation, Firefox would block any future exploits that may be discovered and improve security and stability generally as well. The trade-off is that Firefox will use more memory once full site isolation launches in the browser. It is too early to tell by how much memory usage will increase; if you assume that it will be in Google's 20% ballpark, it could very well become a problem for some configurations.

Now You: What is your take on Project Fission / full site isolation?

I disabled multi processes some time ago in a haphazard elimination of suspects attempt to prevent FF from hogging ridiculously large amounts of memory (up to 12GB of 16GB!) which caused the browser to come to a grinding halt. I’m loathe to re-enable it. Any advice? TIA.

I gave up on Chrome because their site isolation was using more RAM for protection from something that only exist on paper. Of course Firefox has to follow Chrome’s lead and create something similar. I find it disturbing how many patches and firmware we have thrown and the Spectre stuff without a hint of it being exploited. I’m fine with the tin foil hat people getting more assurance. But give us all a off switch so we don’t have to use any of it unless its needed.

Its really not that bad if being honest. Some of us here still use older processors like the intel 2nd generation i7, which only has one of the big cpu exploits of 2018 fixed, rather than both meltdown and spectre. Plus there’s a reason why you have ram in the first place and its to use it. Obviously a program shouldn’t be wasting it on pointless garbage/features but this update is somewhat necessary.

who cares. really.

sören hentzschel wrote in a comment: “advertising is an integral part of the web and necessary for economic reasons.”

(https://www.soeren-hentzschel.at/firefox/firefox-67-cryptomining-fingerprinting-blocker/#comment-32172)

that’s firefox in these days. avoid this browser, it’s enough. don’t be fooled into believing that this cancer is an “integral part of the web”. an integral part of the web is a functioning blocker against this cancer (if you want to support someone, like martin on ghacks, please do so via paypal or whatever)

this goes even more to the linux community, which offers this browser by default, often even as the only (simple/tweak-free) repo – alternative. in the official fedora & manjaro & mint & opensuse & .. … forum they didn’t even know that ff phones to google via safe-browsing and that you are dealing with 2 policies here (linux fork or not). and that’s just the tip of the iceberg, as almost everyone here knows.

And the award for the “most irrelevant cantankerous rant” goes to…

ad hominem. like most of the time, when it comes to this.

I am glad they will implement it . Always feel a bit more secure with Brave browser, when I am clicking on new tech blog sites, preferably with Sandboxie. First party isolation extension can be removed then I guess.

Hopefully Palemoon gets Site Isolation along with WebRender.

I think anything that gives security and protection is good, but I don’t like finding upwards of 17 processes in the background using a lot of memory and freezing everything!!!

This is not what I thought Firefox was!!!!!

WebRender is a thing after Firefox 57 (Quantum), maybe Firefox 64, for Palemoon to get it, it has to start using Firefox 64 as a base. And I think they use this old version of Firefox 27 as a base, because they have something in mind. If they update to Firefox 64, it will defeat the purpose of Palemoon. Also the reason for Basilisk to exist is because Palemoon already has compatibility issues with many websites.

Considering that this Site Isolation would require a multi-process architecture that would break a lot of the browser’s extensibility, I doubt Pale Moon will adopt something like this any time soon.

@Weilan please re-check your facts. The platform that Pale Moon and Basilisk both use is mostly based on Firefox 52-55, not 27; there are not that many differences between them in terms of compatibility.

I have 8gb of non-upgradeable RAM on my laptop, which I have never come close to maxing out using Firefox or any other browser. That being said, for those with 2-4GB of RAM systems, I could see how this would be annoying and a negative. Still, selfishly I will appreciate the increased security.

https://data.firefox.com/dashboard/hardware

Data suggests 20% of Firefox users are <3 GB

Why are you moving the goalposts? more than 30% of Firefox users have only 4Gb, so if you add that to the 2-4 range you get a very sizeable chunk of Firefox users. Also even if it was only 20% of users who only have 2-4Gb of RAM then it’s still significant. Heck, even for Android users, it’s still significant.

True, and it will stink for those that have 3GB or less going forward. That being said, that same data indicates that the number of people with 8 or more GB of RAM is rising and I doubt that trend will slowdown as lower memory systems are phased out of the marketplace. Outside of REALLY low-end Windows hardware (which nobody should buy if they want a pleasant experience) and ChromeOS, it’s really hard to find a new laptop with less than 4GB of RAM anymore. Even phones with 4GB of RAM (on the Android side) are becoming rarer as OEM’s from China throw in 6-8 GB as a sales tactic.

I’m using Firefox and I like it, but it feels like they’re copying Chrome even more with the multiple processes.

If they really cared about Spectre/Meltdown and other timing related security and privacy problems such as history leaking they would stop giving websites high resolution timers for performance telemetry and they wouldn’t have removed the pref to disable web workers for those who want to.

https://bugzilla.mozilla.org/show_bug.cgi?id=1434934#c8

https://blog.mozilla.org/security/2018/01/03/mitigations-landing-new-class-timing-attack/

But sites needs seem more important than users security when those conflict.

Unrelated but latest example of their “security” mindset : storing payment credentials in the browser and giving sites an API to access it under some conditions. Security and privacy disaster just waiting to happen when this gets exploited (and a bonus if they get sent to Google via account sync on Chrome, I didn’t check that). The only justification for implementing that ? Users won’t have to lose a few seconds to find and type their credit card number, so they’ll buy more on e-commerce sites.

Spending so many man-hours on building very elaborate security mechanisms like this Project Fission and then ruining all security credibility by introducing themselves such trivial vulnerabilities…

I see the benefits of implementing this, but am happy with the current implementation / memory levels, and IMO the average user will maybe notice a memory increase as being bad without understanding the underlying benefits / cause.

“Look, Firefox uses more memory!” – Googlesoft propaganda.