Wolfram Alpha Gets Its First Core Update After Launch

One thing that many webmasters and users do not like is that search engines keep them in the dark when they update their search engine, be it search engine algorithm changes or other updates. The Wolfram Alpha team seems to have felt the same way as they decided to release information about the first core update after the launch of the new search engine on their blog.

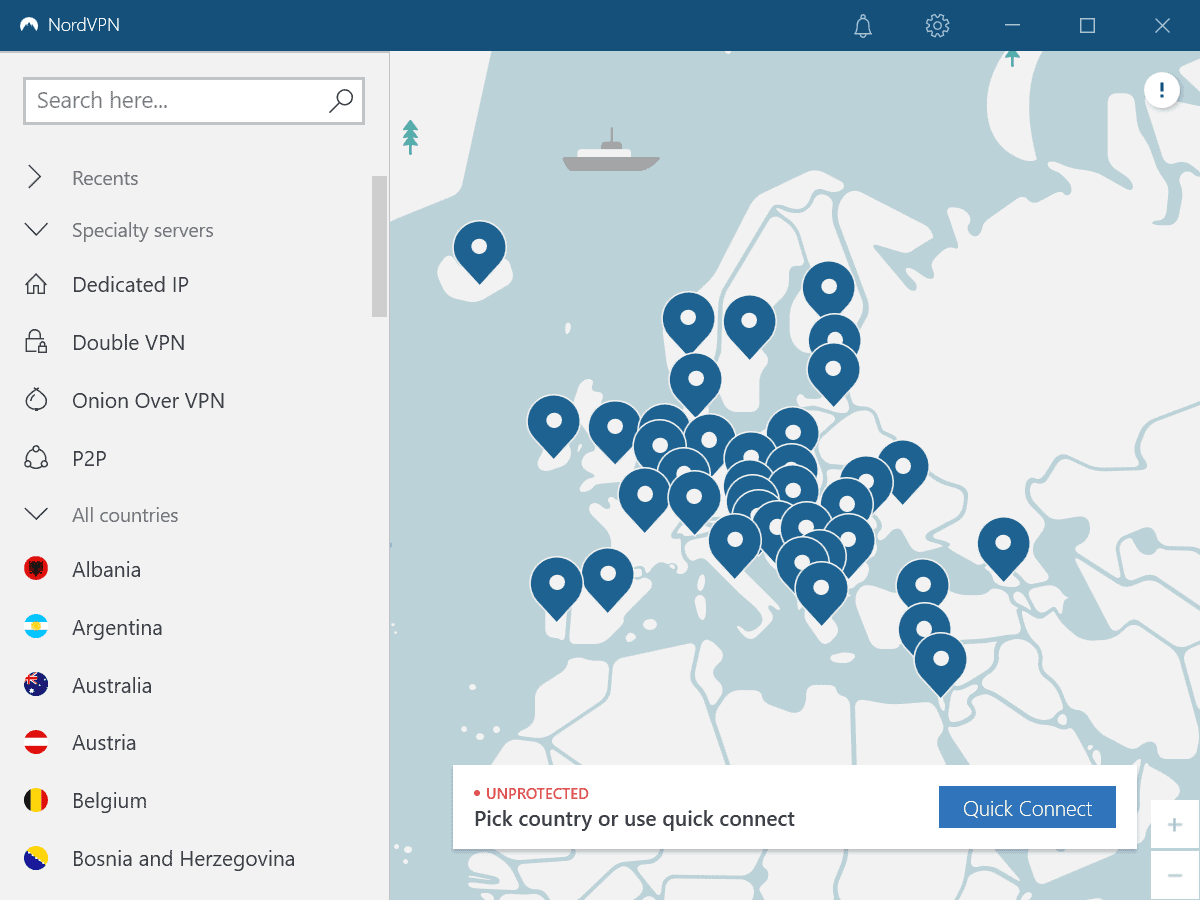

The blog lists the major changes of the core update mentioning in the end that about 1.1 million data values have been affected by the search engine update. Most of the changes listed in the blog post add new searches or information to the search engine. This includes for example the updates to some European countries like Slovakia, regions (like Wales), country borders (India, China..) but also more interesting comparisons (USA deficit vs. UK), combined time series plots of different quantities (population Germany gdp) and additional ways of searching for information and data entries.

- More comparisons of composite properties

- City-by-city handling of U.S. states with multiple timezones

- Additional probability computations for cards and coins

- Additional output for partitions of integers

- Improved linguistic handling for many foods

- Support for many less-common given names

- More “self-aware†questions answered

There is obviously still a fundamental different between the information that are provided by Wolfram Alpha and classic search engines like Google Search, Bing or Yahoo Search. The developers are however on a good way to fill a gap that most users were probably not aware of until they discovered that they could use Wolfram Alpha in a certain way to compute information that would take lots of manual work otherwise.

Still, the search engine is limited and it sometimes depends on the phrase if it understands the search query.

Advertisement

finally some good stuff for slovakia hh