Download web pages to your local computer with WebCopy

Sometimes you may want to download a website, or part of it, to your local system. Maybe you want to make use of the contents while you are offline, or for safekeeping reasons so that you can access the contents even if the website becomes temporarily or permanently unavailable.

My favorite tool for the job is Httrack. It is free and ships with an impressive amount of features. While that is great if you spend some time getting used to what the program has to offer, you sometimes may want a faster solution that you do not have to configure extensively before use.

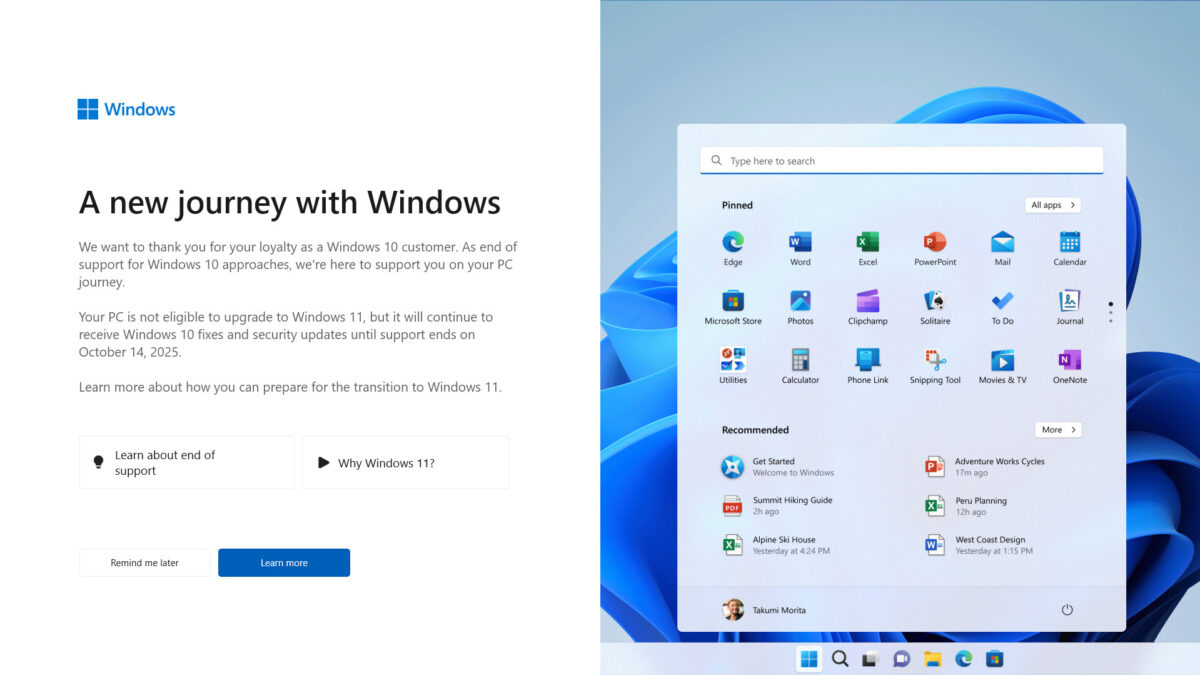

That's where WebCopy comes into play. It is a sophisticated program as well which you find out when you dig deeper into the application's settings, but if you want to copy a web page fast to your local system you can do so right away ignoring the advanced configuration options.

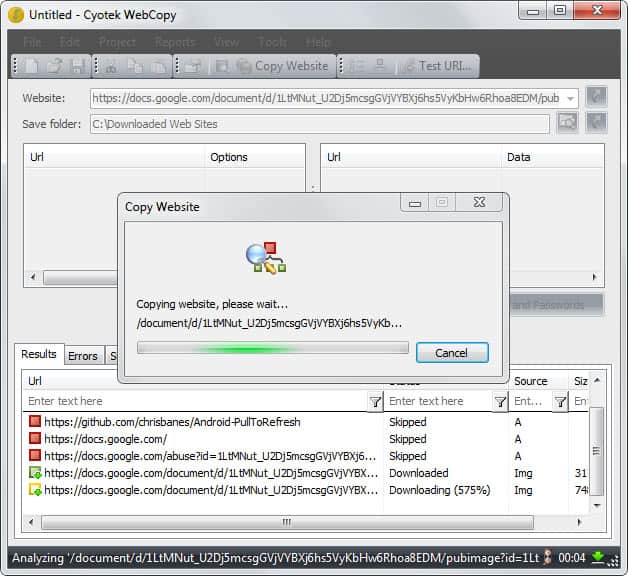

- Paste or enter a web address into the website field in WebCopy.

- Make sure the save folder is correct.

- Click on copy website to start the download.

That's all there is to it. The program processes the selected page for you echoing the progress in the results tab in the interface. Here you see downloaded and skipped files, as well as errors that may prevent the download altogether. The error message may help you analyze why a particular page or file cannot be downloaded. Most of the time though, you can't really do anything about it.

You can access the locally stored copies with a click on the open local folder button, or by navigating to the save folder manually.

This basic option only gets you this far, as you can only copy a single web page this way. You need to define rules if you want to download additional pages or even the entire website. Rules may also help you when you encounter broken pages that cannot be copied as you can exclude them from the download so that the remaining pages get downloaded to the local system.

To add rules right-click on the rules listing in the main interface and select add from the options. Rules are patterns that are matched against the website structure. To exclude a particular directory from being crawled, you'd simply add it as a pattern and select the exclude option in the rules configuration menu.

It is still not as intuitive as HTTracks link depth parameter that you can use to define the depth of the crawl and download.

WebCopy supports authentication which you can add in the forms and password settings. Here you can add a web address that requires authentication, and a username and password that you want the web crawler to use to access the contents.

Tips

- The website diagram menu displays the structure of the active website to you. You can use it to add rules to the crawler.

- You can add additional urls that you want included in the download under Project Properties > Additional URLs. This can be useful if the crawler cannot discover the urls automatically.

- The default user agent can be changed in the options. While that is usually not necessary, you may encounter some servers that block it so that you need to modify it to download the website.

Verdict

The program is ideal for downloading single web pages to the local system. The rules system is on the other hand not that comfortable to use if you want to download multiple pages from a website. I'd prefer an option in the settings to simply select a link depths that I want the program to crawl and be done with it. (via Make Tech Easier)

Advertisement

Anyone have a trick on how to parse over 200k worth of pages as individual complete pages?

I have the listing I could do it one by one but I would be at it for decades can’t use httrack due to all the extra crap files and Webcopy here is almost as a save without extras type of situation.

I tried the save as add-on from firefox but you need to have the pages opened to do so.

Adding links to the save menu only gets the html.

Reson for all the pages is I want to reformat the public domain data into a chm or more of a proper book format for easier searching and reading…

Have you tried Free Download Manager?

http://www.freedownloadmanager.org/

I’m not sure how good it is though.

looks more intuitive than httrack. wish it doesn’t need net framework though,

I love Httrack.

I use Mozilla MAF addon for all single pages:

https://addons.mozilla.org/en-US/firefox/addon/mozilla-archive-format/?src=userprofile

It stores a single web page + graphics as a single MAFF file (or MHT if you choose).

MAFF is a zipped copy of the page and all its structure. Can be opened by any zip program in the future so your saved pages are safe.

IE and Opera can read and save MHT.

Google Chrome is stunted and can only read MHT files.

I guess Google does not want you to save copies of web pages locally.

Firefox with MAF addon is *indispensable* for web research.

I save hundreds of pages weekly when researching products or reverse engineering things.

Web pages vanish all the time. Bookmarks are a weak substitute.

As an example, here is a forum page about how to do bedding of deck hardware on a boat properly. Many pics and great info:

http://www.sailnet.com/forums/gear-maintenance/63554-bedding-deck-hardware-butyl-tape.html

With Mozilla MAF addon I can right click and “Save Page In Archive As”..direct to MAFF format. Then I’m on to another web page in seconds. Can open the page later for in depth reading. If it’s good info it gets burned to DVD later. Perfect.

htttrack is great but teleport pro/ultra etc is best :) Anyways, you cant beat the price.